Word embeddings generate fixed vector representations for words based on their overall usage in a corpus, capturing general semantic relationships but lacking sensitivity to word meaning shifts in different contexts. Contextual embeddings, derived from models like BERT or GPT, produce dynamic vectors reflecting the specific context in which a word appears, enabling nuanced understanding of polysemous words and improved performance in downstream natural language processing tasks. This distinction makes contextual embeddings crucial for applications requiring deep semantic comprehension and contextual relevance.

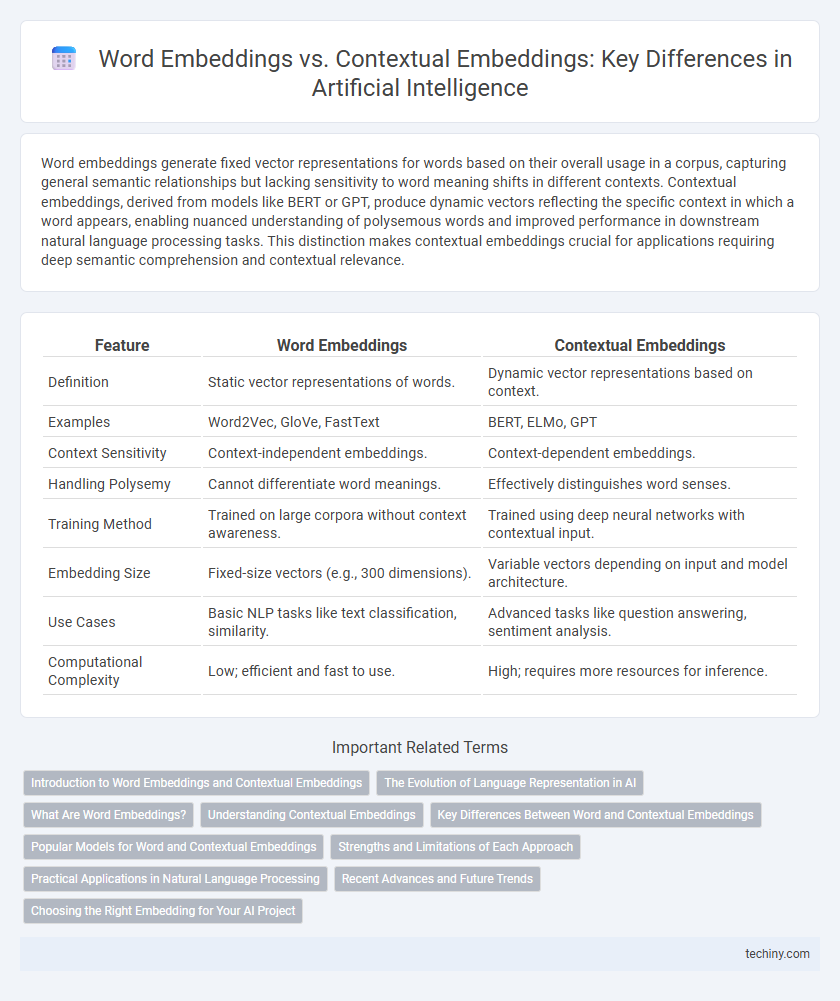

Table of Comparison

| Feature | Word Embeddings | Contextual Embeddings |

|---|---|---|

| Definition | Static vector representations of words. | Dynamic vector representations based on context. |

| Examples | Word2Vec, GloVe, FastText | BERT, ELMo, GPT |

| Context Sensitivity | Context-independent embeddings. | Context-dependent embeddings. |

| Handling Polysemy | Cannot differentiate word meanings. | Effectively distinguishes word senses. |

| Training Method | Trained on large corpora without context awareness. | Trained using deep neural networks with contextual input. |

| Embedding Size | Fixed-size vectors (e.g., 300 dimensions). | Variable vectors depending on input and model architecture. |

| Use Cases | Basic NLP tasks like text classification, similarity. | Advanced tasks like question answering, sentiment analysis. |

| Computational Complexity | Low; efficient and fast to use. | High; requires more resources for inference. |

Introduction to Word Embeddings and Contextual Embeddings

Word embeddings represent words as fixed vectors in a continuous vector space, capturing semantic relationships based on their co-occurrence in large text corpora. Contextual embeddings generate dynamic word representations that vary depending on the surrounding context within a sentence, enabling models to understand polysemy and nuanced meanings. Techniques like Word2Vec and GloVe exemplify static embeddings, while models such as BERT and ELMo revolutionize natural language processing by producing context-aware embeddings.

The Evolution of Language Representation in AI

Word embeddings such as Word2Vec and GloVe revolutionized natural language processing by representing words as fixed vectors capturing semantic similarity based on co-occurrence statistics. Contextual embeddings like BERT and GPT further advanced language representation by generating dynamic word vectors conditioned on surrounding text, enabling deeper understanding of polysemy and context-dependent meanings. This evolution from static to contextual embeddings has significantly enhanced AI capabilities in tasks like sentiment analysis, machine translation, and contextual language understanding.

What Are Word Embeddings?

Word embeddings are vector representations of words that capture semantic meanings based on their co-occurrence in large text corpora. These fixed embeddings, such as Word2Vec or GloVe, map words to continuous low-dimensional space where semantically similar words have similar vectors. Unlike contextual embeddings, word embeddings do not change based on the word's context within a sentence.

Understanding Contextual Embeddings

Contextual embeddings generate dynamic word representations by considering the surrounding text, enabling more accurate capture of polysemy and word sense disambiguation. Models like BERT and GPT utilize transformers to produce embeddings that adapt based on sentence context, improving tasks such as sentiment analysis, machine translation, and question answering. This approach contrasts static word embeddings like Word2Vec, which assign a single vector to each word regardless of context, limiting their ability to represent nuanced meanings.

Key Differences Between Word and Contextual Embeddings

Word embeddings represent each word as a fixed vector regardless of context, capturing general semantic meanings based on co-occurrence statistics from large text corpora. Contextual embeddings generate dynamic vectors for words depending on their surrounding context using deep learning models like BERT and GPT, enabling nuanced understanding of polysemous words. The key difference lies in static versus context-sensitive representations, where contextual embeddings significantly improve tasks such as word sense disambiguation and contextual language comprehension.

Popular Models for Word and Contextual Embeddings

Word embeddings such as Word2Vec, GloVe, and FastText generate fixed vector representations for words based on co-occurrence statistics in large corpora, capturing semantic similarity in a static manner. Contextual embeddings, pioneered by models like ELMo, BERT, and GPT, produce dynamic word vectors that vary depending on surrounding text, enabling a deeper understanding of polysemy and context-dependent meanings. Popular models like BERT leverage transformer architectures to deliver state-of-the-art performance in natural language understanding tasks through bidirectional context modeling.

Strengths and Limitations of Each Approach

Word embeddings like Word2Vec and GloVe efficiently capture semantic relationships between words through fixed vector representations but struggle with polysemy and context-dependent meanings. Contextual embeddings, exemplified by models such as BERT and GPT, dynamically generate word vectors based on sentence context, improving accuracy in tasks involving ambiguity and idiomatic expressions. However, contextual embeddings demand significantly higher computational resources and longer training times, limiting their deployment in resource-constrained environments.

Practical Applications in Natural Language Processing

Word embeddings like Word2Vec and GloVe provide static vector representations of words, enabling efficient semantic similarity and clustering tasks in natural language processing applications such as sentiment analysis and information retrieval. Contextual embeddings from models like BERT and GPT capture dynamic word meanings based on sentence context, significantly improving performance in tasks like named entity recognition, machine translation, and question answering. Deploying contextual embeddings enhances understanding of polysemy and nuances in language, driving advancements in dialogue systems and context-aware search engines.

Recent Advances and Future Trends

Recent advances in artificial intelligence highlight a clear shift from traditional word embeddings like Word2Vec and GloVe toward contextual embeddings generated by transformer models such as BERT and GPT, which capture nuanced meanings based on surrounding text. Contextual embeddings dynamically adjust representations for polysemous words, enhancing performance in downstream tasks like machine translation, sentiment analysis, and question answering. Future trends point to the integration of multimodal data and continual learning techniques to create embeddings that better understand context across diverse languages and real-world scenarios.

Choosing the Right Embedding for Your AI Project

Word embeddings such as Word2Vec and GloVe provide fixed vector representations that capture semantic relationships but lack context sensitivity, making them suitable for projects with static language needs or limited computational resources. Contextual embeddings like BERT and GPT dynamically generate vectors based on surrounding text, offering superior performance in tasks requiring nuanced understanding, such as sentiment analysis and question answering. Selecting the appropriate embedding hinges on the complexity of the language tasks, model interpretability requirements, and available computational power to balance accuracy and efficiency.

Word Embeddings vs Contextual Embeddings Infographic

techiny.com

techiny.com