Overfitting occurs when an AI model learns the training data too well, capturing noise instead of underlying patterns, leading to poor generalization on new data. Underfitting happens when the model is too simple to capture the complexity of the data, resulting in high error rates on both training and testing datasets. Balancing model complexity and training data quality is crucial to avoid overfitting and underfitting in artificial intelligence applications.

Table of Comparison

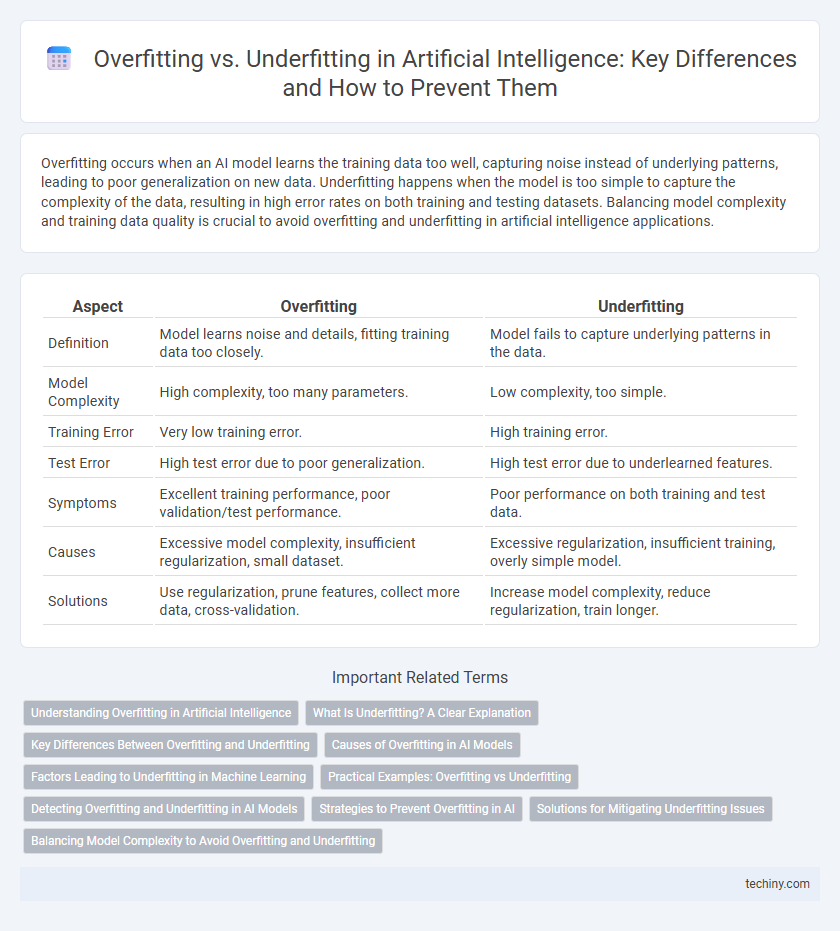

| Aspect | Overfitting | Underfitting |

|---|---|---|

| Definition | Model learns noise and details, fitting training data too closely. | Model fails to capture underlying patterns in the data. |

| Model Complexity | High complexity, too many parameters. | Low complexity, too simple. |

| Training Error | Very low training error. | High training error. |

| Test Error | High test error due to poor generalization. | High test error due to underlearned features. |

| Symptoms | Excellent training performance, poor validation/test performance. | Poor performance on both training and test data. |

| Causes | Excessive model complexity, insufficient regularization, small dataset. | Excessive regularization, insufficient training, overly simple model. |

| Solutions | Use regularization, prune features, collect more data, cross-validation. | Increase model complexity, reduce regularization, train longer. |

Understanding Overfitting in Artificial Intelligence

Overfitting in artificial intelligence occurs when a model learns the training data too well, capturing noise and outliers instead of the underlying patterns. This phenomenon leads to poor generalization on unseen data, causing high accuracy on training sets but significantly lower performance on validation or test sets. Techniques such as cross-validation, regularization, and pruning are essential to mitigate overfitting and enhance model robustness.

What Is Underfitting? A Clear Explanation

Underfitting occurs when an artificial intelligence model is too simple to capture the underlying patterns in the data, resulting in poor performance on both training and test datasets. This typically happens when the model has insufficient complexity, such as too few parameters or inadequate training iterations, leading to high bias and low variance. Detecting underfitting involves evaluating model metrics like high training error, indicating the need for a more complex model or additional features to improve accuracy.

Key Differences Between Overfitting and Underfitting

Overfitting occurs when a machine learning model captures noise in the training data, leading to high accuracy on training sets but poor generalization on unseen data. Underfitting happens when a model is too simple to capture the underlying patterns, resulting in low accuracy on both training and test datasets. Key differences include that overfitting relates to model complexity and variance, whereas underfitting relates to bias and insufficient model capacity.

Causes of Overfitting in AI Models

Overfitting in AI models commonly arises from using excessively complex algorithms that capture noise in the training data rather than underlying patterns. Limited or unrepresentative training data often causes models to memorize specific details, reducing generalization to new inputs. Insufficient regularization techniques, such as dropout or weight decay, also contribute to overfitting by failing to constrain model complexity during learning.

Factors Leading to Underfitting in Machine Learning

Underfitting in machine learning arises when a model is too simplistic to capture the underlying patterns of the training data, often caused by insufficient model complexity or inadequate feature representation. Factors such as a limited number of training epochs, excessive regularization, and using a model with too few parameters contribute to underfitting by preventing the algorithm from learning the necessary signal. Insufficient training data quality or ignoring relevant input features also leads to poor generalization, resulting in high bias and low predictive performance.

Practical Examples: Overfitting vs Underfitting

Overfitting occurs when a machine learning model captures noise instead of the underlying pattern, such as a deep neural network that achieves near-perfect accuracy on training data but performs poorly on new images. Underfitting happens when a model is too simple, like a linear regression applied to complex, nonlinear data, leading to high errors on both training and test sets. Practical examples include an overfit spam filter that flags legitimate emails as spam and an underfit house price predictor that fails to capture important factors, resulting in inaccurate price estimates.

Detecting Overfitting and Underfitting in AI Models

Detecting overfitting in AI models involves monitoring when the training error decreases while the validation error starts to increase, indicating the model is too closely tailored to the training data and fails to generalize. Underfitting is identified by persistently high errors in both training and validation datasets, showing the model lacks the complexity to capture underlying patterns. Techniques such as cross-validation, learning curves analysis, and evaluation of model performance metrics help accurately diagnose these issues in machine learning workflows.

Strategies to Prevent Overfitting in AI

Strategies to prevent overfitting in artificial intelligence include using regularization techniques such as L1 and L2 to penalize complex models, implementing dropout layers in neural networks to randomly deactivate neurons during training, and applying early stopping methods to halt training once performance on validation data ceases to improve. Cross-validation is also essential to ensure model generalization by evaluating against multiple data subsets. Collecting more diverse training data and simplifying model architecture help reduce the risk of capturing noise instead of underlying patterns.

Solutions for Mitigating Underfitting Issues

To mitigate underfitting in artificial intelligence models, increasing model complexity by adding more layers or neurons can enhance the model's capacity to capture underlying patterns. Employing feature engineering techniques to include more relevant variables and improving data quality ensures the model receives informative inputs. Additionally, training the model for more epochs or reducing regularization helps the model fit the data more accurately without oversimplifying its predictions.

Balancing Model Complexity to Avoid Overfitting and Underfitting

Balancing model complexity is crucial to avoid overfitting, where the AI model learns noise and details from training data, and underfitting, where the model fails to capture underlying patterns. Techniques such as cross-validation, regularization (L1, L2), and pruning optimize model parameters to improve generalization on unseen data. Properly tuning hyperparameters and selecting appropriate model architectures help maintain this balance for robust, accurate AI performance.

Overfitting vs Underfitting Infographic

techiny.com

techiny.com