LSTM and GRU are advanced recurrent neural network architectures designed to address the vanishing gradient problem in sequence modeling tasks. LSTM uses separate memory cells and three gates (input, forget, output) to capture long-term dependencies, while GRU combines the forget and input gates into a single update gate, resulting in a simpler structure with fewer parameters. GRU often achieves comparable performance to LSTM with faster training times, making it a preferred choice for applications with limited computational resources.

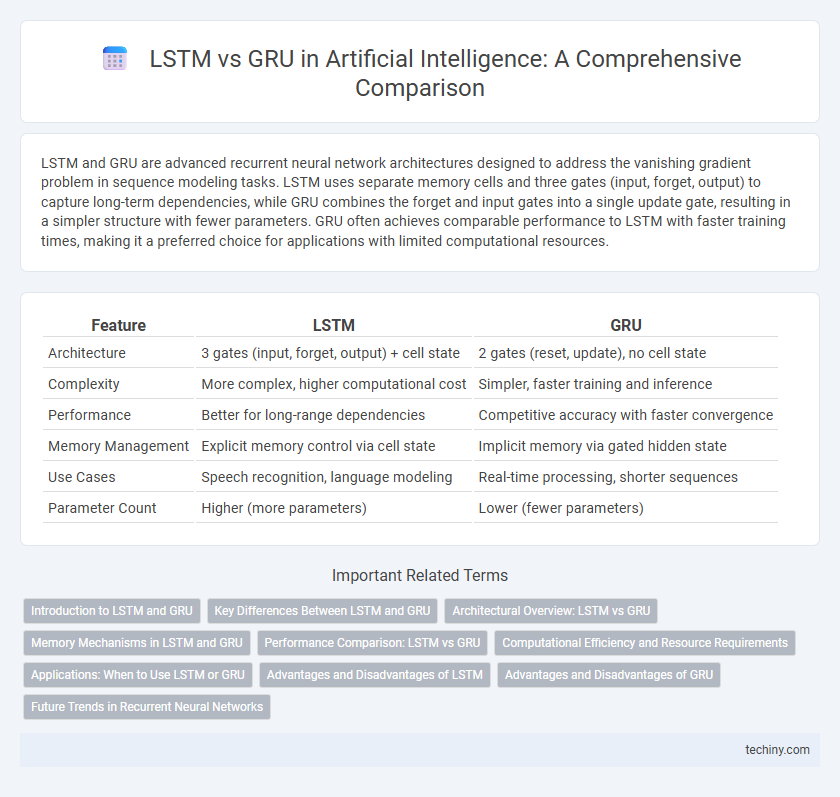

Table of Comparison

| Feature | LSTM | GRU |

|---|---|---|

| Architecture | 3 gates (input, forget, output) + cell state | 2 gates (reset, update), no cell state |

| Complexity | More complex, higher computational cost | Simpler, faster training and inference |

| Performance | Better for long-range dependencies | Competitive accuracy with faster convergence |

| Memory Management | Explicit memory control via cell state | Implicit memory via gated hidden state |

| Use Cases | Speech recognition, language modeling | Real-time processing, shorter sequences |

| Parameter Count | Higher (more parameters) | Lower (fewer parameters) |

Introduction to LSTM and GRU

Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRU) are advanced types of recurrent neural networks designed to capture long-range dependencies in sequential data. LSTMs utilize three gates--input, output, and forget gates--to regulate information flow, allowing them to maintain memory over extended sequences. GRUs simplify this architecture by combining the input and forget gates into a single update gate, resulting in fewer parameters and often faster training without significant loss in performance.

Key Differences Between LSTM and GRU

LSTM (Long Short-Term Memory) and GRU (Gated Recurrent Unit) are both advanced recurrent neural network architectures designed to address the vanishing gradient problem in sequence modeling. LSTM features three gates--input, forget, and output--that regulate memory cell states, providing fine-grained control over information flow, whereas GRU simplifies this mechanism with only two gates: reset and update, enabling faster training and fewer parameters. GRU tends to perform well on smaller datasets due to its simpler structure, while LSTM is often preferred for complex sequential data requiring longer memory retention.

Architectural Overview: LSTM vs GRU

Long Short-Term Memory (LSTM) networks feature a complex architecture with three gates: input, forget, and output, allowing selective memory retention and control over long-term dependencies. Gated Recurrent Units (GRU) simplify this design by combining the input and forget gates into a single update gate and incorporating a reset gate, resulting in fewer parameters and often faster training without significant loss in performance. Both architectures address the vanishing gradient problem in Recurrent Neural Networks but differ in computational efficiency and memory management due to their distinct gating mechanisms.

Memory Mechanisms in LSTM and GRU

LSTM (Long Short-Term Memory) networks utilize a complex memory cell structure with separate input, output, and forget gates that regulate information flow, allowing effective long-term dependency capture. GRU (Gated Recurrent Unit) simplifies this mechanism by combining the forget and input gates into a single update gate, reducing computational complexity while maintaining the ability to preserve relevant memory. Both architectures address the vanishing gradient problem but differ in memory management, with LSTM offering more control and GRU providing efficiency for shorter sequences.

Performance Comparison: LSTM vs GRU

Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) are prominent recurrent neural network architectures designed for sequential data processing. GRU often outperforms LSTM in terms of training speed and computational efficiency due to its simpler gating mechanism, while LSTM provides more effective handling of long-range dependencies in complex datasets. Benchmark studies reveal GRU achieves comparable accuracy with fewer parameters, making it suitable for real-time applications, whereas LSTM excels in scenarios demanding greater temporal depth and intricate pattern recognition.

Computational Efficiency and Resource Requirements

LSTM networks typically demand higher computational resources and memory due to their complex gating mechanisms involving input, output, and forget gates, which increases training time and model size. In contrast, GRU models feature a streamlined architecture with fewer gates, resulting in faster training and reduced computational overhead while maintaining comparable performance. This efficiency makes GRUs preferable for resource-constrained environments such as mobile devices and real-time applications.

Applications: When to Use LSTM or GRU

LSTM networks excel in applications requiring long-term dependency learning, such as language modeling and speech recognition, due to their ability to retain information over extended sequences. GRU models, with fewer parameters and faster training times, are preferred in real-time applications like online prediction and smaller datasets where computational efficiency is critical. Choosing between LSTM and GRU depends on the specific task complexity and resource constraints, with LSTM favored for intricate sequence patterns and GRU for lightweight, time-sensitive implementations.

Advantages and Disadvantages of LSTM

LSTM networks excel in capturing long-term dependencies in sequential data due to their complex gating mechanisms, which effectively mitigate the vanishing gradient problem common in traditional RNNs. However, their intricate structure results in higher computational cost and longer training times compared to simpler models like GRU. Despite this, LSTMs remain preferred in tasks requiring modeling of extensive context, such as speech recognition and language modeling.

Advantages and Disadvantages of GRU

Gated Recurrent Units (GRUs) offer a streamlined architecture compared to Long Short-Term Memory (LSTM) networks, featuring fewer parameters that lead to faster training and reduced computational complexity. GRUs effectively capture dependencies in sequential data while mitigating the vanishing gradient problem, making them advantageous for smaller datasets and real-time applications. However, GRUs may lack the fine-grained control over memory retention and forgetting present in LSTMs, which can limit performance in tasks requiring intricate temporal dynamics.

Future Trends in Recurrent Neural Networks

Future trends in recurrent neural networks emphasize the increasing adoption of Gated Recurrent Units (GRU) due to their computational efficiency and comparable performance to Long Short-Term Memory (LSTM) models. Advances in hybrid architectures that integrate attention mechanisms with GRUs and LSTMs are driving improvements in sequence modeling tasks across natural language processing and time-series analysis. Research is also exploring lightweight recurrent models optimized for edge computing, enhancing real-time inference capabilities in AI applications.

LSTM vs GRU Infographic

techiny.com

techiny.com