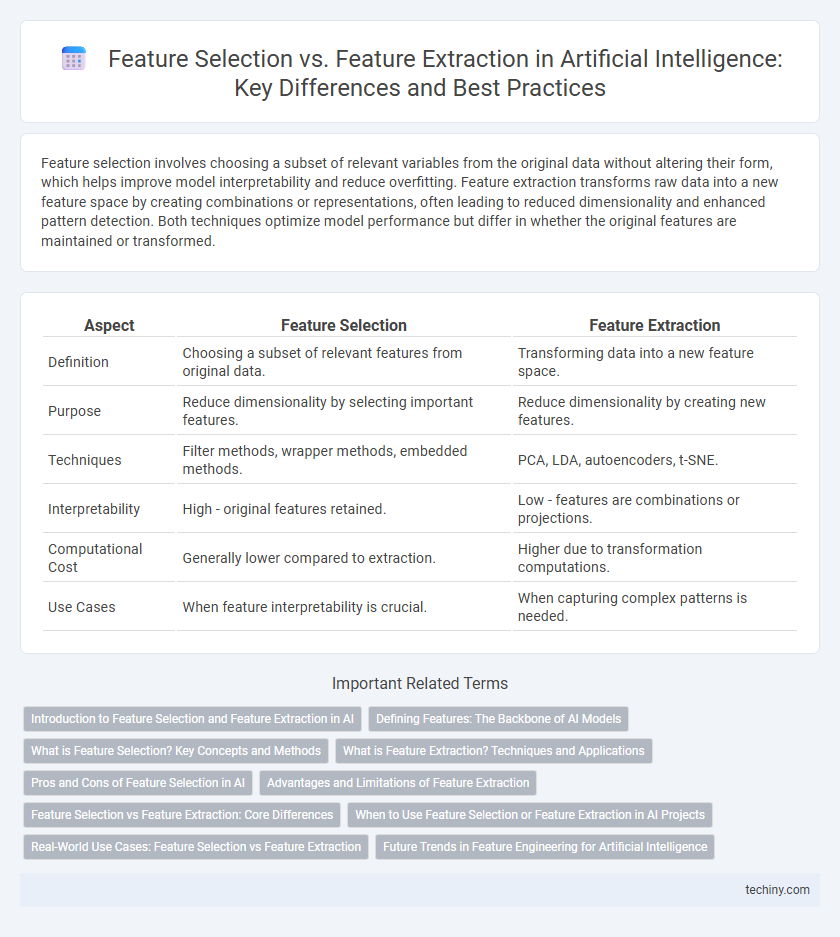

Feature selection involves choosing a subset of relevant variables from the original data without altering their form, which helps improve model interpretability and reduce overfitting. Feature extraction transforms raw data into a new feature space by creating combinations or representations, often leading to reduced dimensionality and enhanced pattern detection. Both techniques optimize model performance but differ in whether the original features are maintained or transformed.

Table of Comparison

| Aspect | Feature Selection | Feature Extraction |

|---|---|---|

| Definition | Choosing a subset of relevant features from original data. | Transforming data into a new feature space. |

| Purpose | Reduce dimensionality by selecting important features. | Reduce dimensionality by creating new features. |

| Techniques | Filter methods, wrapper methods, embedded methods. | PCA, LDA, autoencoders, t-SNE. |

| Interpretability | High - original features retained. | Low - features are combinations or projections. |

| Computational Cost | Generally lower compared to extraction. | Higher due to transformation computations. |

| Use Cases | When feature interpretability is crucial. | When capturing complex patterns is needed. |

Introduction to Feature Selection and Feature Extraction in AI

Feature selection identifies the most relevant variables from the original dataset to improve model performance and reduce overfitting by eliminating redundant or irrelevant data. Feature extraction transforms raw data into new features through techniques like Principal Component Analysis (PCA) or autoencoders, capturing essential information in a lower-dimensional space. Both methods enhance machine learning efficiency by optimizing input data representation in AI models.

Defining Features: The Backbone of AI Models

Feature selection identifies the most relevant variables from original data, directly improving model interpretability and reducing dimensionality without altering feature space. Feature extraction transforms raw data into new feature sets through techniques like PCA, enabling models to capture essential patterns while compressing information. Both processes optimize input features, enhancing AI model accuracy and computational efficiency by focusing on the backbone elements defining data representation.

What is Feature Selection? Key Concepts and Methods

Feature selection in artificial intelligence involves identifying and choosing the most relevant features from a dataset to improve model performance and reduce complexity. Key methods include filter techniques like Chi-square and mutual information, wrapper methods such as recursive feature elimination, and embedded approaches like LASSO regularization. This process enhances model accuracy, speeds up training, and mitigates overfitting by eliminating redundant or irrelevant features.

What is Feature Extraction? Techniques and Applications

Feature extraction in artificial intelligence involves transforming raw data into a reduced set of representative features that retain essential information for model training. Common techniques include Principal Component Analysis (PCA), Independent Component Analysis (ICA), and autoencoders, which help improve computational efficiency and model performance. Applications span image recognition, natural language processing, and bioinformatics, where extracting meaningful patterns from complex datasets is critical.

Pros and Cons of Feature Selection in AI

Feature selection enhances AI model interpretability by retaining original data features, leading to simpler and more transparent machine learning algorithms. It reduces overfitting risk and computational cost by eliminating irrelevant or redundant data, improving model generalization and efficiency. However, improper feature selection can discard important information, potentially lowering model accuracy and requiring domain expertise or iterative testing to identify optimal feature subsets.

Advantages and Limitations of Feature Extraction

Feature extraction reduces dimensionality by transforming input data into a set of informative features, enhancing model performance and computational efficiency in AI applications. It captures essential patterns and relationships, improving data representation and reducing noise, but risks losing interpretability and original feature relevance. This approach can be computationally intensive and may require domain expertise to select appropriate extraction techniques, limiting its applicability in some scenarios.

Feature Selection vs Feature Extraction: Core Differences

Feature selection involves choosing a subset of original variables based on importance criteria, preserving interpretability and original data meaning. Feature extraction transforms original features into new combinations or representations, often reducing dimensionality but losing direct interpretability. Core differences lie in preservation of original features in selection versus creation of new features in extraction, impacting model transparency and data representation.

When to Use Feature Selection or Feature Extraction in AI Projects

Feature selection is ideal when interpretability and retaining original feature meanings are crucial, especially in models sensitive to noise or when working with smaller datasets. Feature extraction suits complex datasets requiring dimensionality reduction while preserving essential information, often enhancing performance in deep learning and image recognition tasks. Choosing between them depends on the project's goal, dataset size, and need for computational efficiency or feature transparency.

Real-World Use Cases: Feature Selection vs Feature Extraction

Feature selection is commonly applied in medical diagnostics to identify the most relevant patient attributes, improving model interpretability and reducing dimensionality without altering the original data. Feature extraction is widely used in image recognition tasks, where techniques like Principal Component Analysis and autoencoders transform raw data into compact, informative representations to enhance classification accuracy. In natural language processing, feature selection helps pinpoint critical keywords, while extraction methods convert text into vectors, demonstrating each method's distinct advantage in practical AI deployments.

Future Trends in Feature Engineering for Artificial Intelligence

Future trends in feature engineering for artificial intelligence emphasize automated feature selection techniques leveraging reinforcement learning to efficiently identify high-impact variables. Advances in unsupervised and self-supervised learning enable more robust feature extraction methods, capturing complex data representations without extensive labeled datasets. Integration of explainable AI frameworks promotes transparency in feature importance, aligning model interpretability with performance in evolving AI applications.

Feature Selection vs Feature Extraction Infographic

techiny.com

techiny.com