Hierarchical clustering creates a multi-level tree structure that reveals data relationships at various granularity levels, enabling better insight into nested groupings. Flat clustering partitions data into a single set of clusters without any inherent hierarchy, offering simplicity and faster computation. Choosing between hierarchical and flat clustering depends on the complexity of the data and the need for interpretability in AI applications.

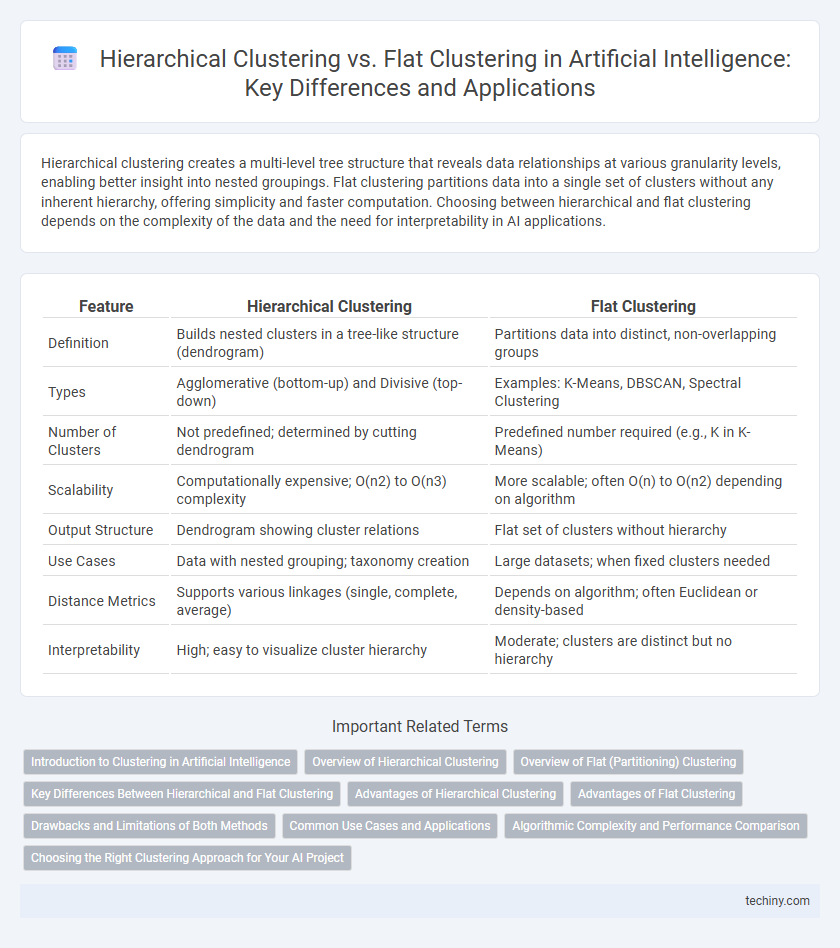

Table of Comparison

| Feature | Hierarchical Clustering | Flat Clustering |

|---|---|---|

| Definition | Builds nested clusters in a tree-like structure (dendrogram) | Partitions data into distinct, non-overlapping groups |

| Types | Agglomerative (bottom-up) and Divisive (top-down) | Examples: K-Means, DBSCAN, Spectral Clustering |

| Number of Clusters | Not predefined; determined by cutting dendrogram | Predefined number required (e.g., K in K-Means) |

| Scalability | Computationally expensive; O(n2) to O(n3) complexity | More scalable; often O(n) to O(n2) depending on algorithm |

| Output Structure | Dendrogram showing cluster relations | Flat set of clusters without hierarchy |

| Use Cases | Data with nested grouping; taxonomy creation | Large datasets; when fixed clusters needed |

| Distance Metrics | Supports various linkages (single, complete, average) | Depends on algorithm; often Euclidean or density-based |

| Interpretability | High; easy to visualize cluster hierarchy | Moderate; clusters are distinct but no hierarchy |

Introduction to Clustering in Artificial Intelligence

Hierarchical clustering and flat clustering are fundamental techniques in artificial intelligence for grouping data based on similarity measures. Hierarchical clustering builds nested clusters by either agglomerative merging or divisive splitting, producing a dendrogram that reveals multi-level relationships. In contrast, flat clustering partitions data into a predefined number of disjoint clusters, optimizing cluster centroids through algorithms like k-means for efficient pattern recognition.

Overview of Hierarchical Clustering

Hierarchical clustering organizes data into a tree-like structure called a dendrogram, enabling multi-level data analysis by grouping similar objects into nested clusters. This method provides flexibility in choosing the granularity of clusters without requiring a predefined number of clusters, making it suitable for exploratory data analysis in AI applications. It supports both agglomerative and divisive approaches, enhancing interpretability and insights into data relationships compared to flat clustering methods.

Overview of Flat (Partitioning) Clustering

Flat clustering, also known as partitioning clustering, divides data into a predetermined number of distinct, non-overlapping clusters by optimizing a specific objective function such as minimizing within-cluster variance. Common flat clustering algorithms include K-means, K-medoids, and CLARANS, which assign each data point to exactly one cluster, facilitating straightforward interpretation and efficient computation. This approach contrasts with hierarchical clustering by producing a single-level partitioning, making it well-suited for large datasets requiring scalable and clear cluster assignments.

Key Differences Between Hierarchical and Flat Clustering

Hierarchical clustering builds a multi-level tree of clusters called a dendrogram, enabling analysis at various granularity levels, while flat clustering partitions data into a single set of distinct groups without nested structure. Hierarchical methods do not require specifying the number of clusters beforehand, unlike algorithms like K-means used in flat clustering that require a preset cluster count. Computational complexity is generally higher in hierarchical clustering due to iterative merging or splitting, whereas flat clustering offers faster performance but less flexibility in cluster shape and size.

Advantages of Hierarchical Clustering

Hierarchical clustering offers the advantage of creating a multi-level grouping structure that reveals data relationships at various granularity levels, which is essential for tasks requiring detailed pattern recognition. This method does not require specifying the number of clusters in advance, providing flexibility compared to flat clustering algorithms like K-means. Its dendrogram visualization aids in intuitive interpretation of cluster merges and splits, enhancing data analysis transparency and decision-making.

Advantages of Flat Clustering

Flat clustering offers straightforward implementation and scalability, making it suitable for large datasets where quick partitioning into distinct groups is required. Its simplicity allows for efficient memory usage and faster computation compared to hierarchical methods, which often involve complex tree structures. Flat clustering algorithms like k-means provide clear, non-overlapping cluster assignments that are easy to interpret in applications such as image segmentation and customer segmentation.

Drawbacks and Limitations of Both Methods

Hierarchical clustering suffers from high computational complexity, making it inefficient for large datasets, and it is sensitive to noise and outliers, which can distort the dendrogram structure. Flat clustering methods like K-means require predefined cluster numbers, often resulting in suboptimal partitions if the chosen k does not match the data's intrinsic grouping. Both approaches lack robustness when handling clusters with varying shapes and densities, limiting their effectiveness in complex real-world AI applications.

Common Use Cases and Applications

Hierarchical clustering is widely used in bioinformatics for gene expression analysis and customer segmentation where understanding data structure at multiple granularities is crucial. Flat clustering algorithms like K-means excel in market basket analysis and image segmentation, where predefined cluster numbers and computational efficiency are prioritized. Both approaches support anomaly detection and document classification, with hierarchical methods offering dendrogram visualization and flat clustering enabling rapid convergence for large datasets.

Algorithmic Complexity and Performance Comparison

Hierarchical clustering algorithms, such as Agglomerative and Divisive methods, generally exhibit higher algorithmic complexity, typically O(n^3) in time, due to repeated distance calculations and dendrogram construction, compared to flat clustering algorithms like K-Means, which operate at O(nkt) with n data points, k clusters, and t iterations. In terms of performance, hierarchical clustering provides more detailed data structure insights through nested clusters but struggles with scalability on large datasets, whereas flat clustering offers faster convergence and efficiency suitable for big data applications but lacks the ability to reveal multi-level relationships. Choosing between these methods depends on dataset size, required granularity, and computational resource availability within AI-driven clustering tasks.

Choosing the Right Clustering Approach for Your AI Project

Hierarchical clustering builds nested clusters by either merging or splitting them, making it suitable for datasets with inherent multi-level structures, while flat clustering partitions data into distinct groups without any hierarchy. Selecting the right clustering approach depends on the complexity of the data, interpretability requirements, and computational resources available for the AI project. Evaluating cluster granularity and scalability helps ensure the chosen method aligns with the AI model's objectives and data characteristics.

Hierarchical Clustering vs Flat Clustering Infographic

techiny.com

techiny.com