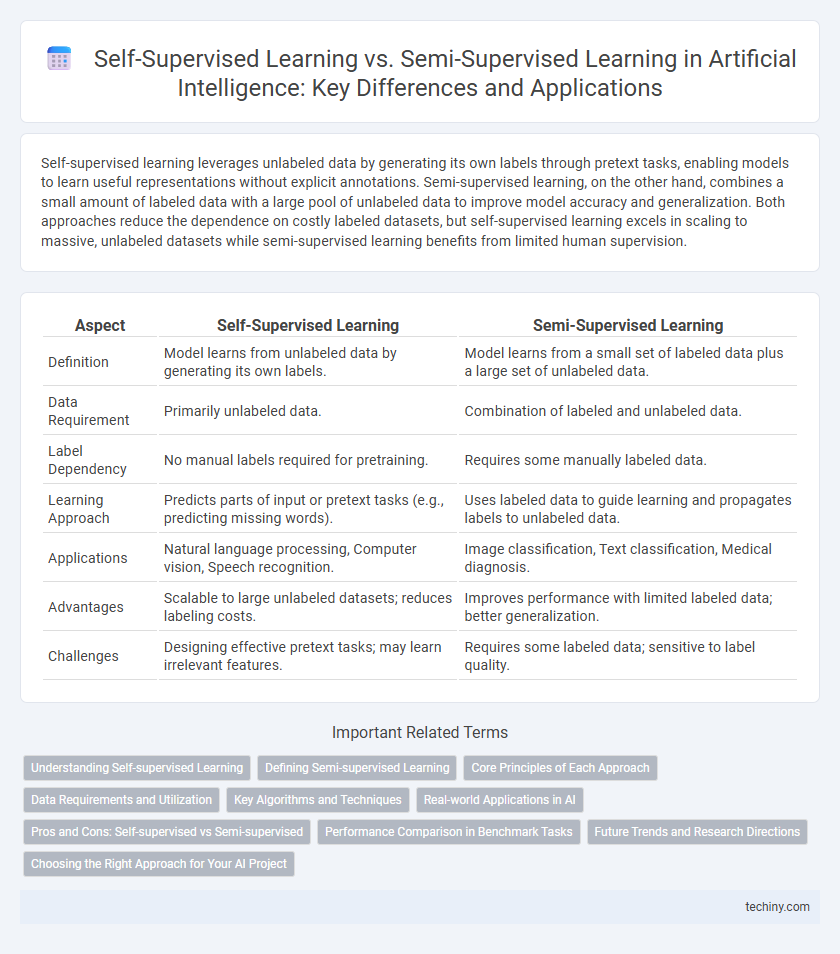

Self-supervised learning leverages unlabeled data by generating its own labels through pretext tasks, enabling models to learn useful representations without explicit annotations. Semi-supervised learning, on the other hand, combines a small amount of labeled data with a large pool of unlabeled data to improve model accuracy and generalization. Both approaches reduce the dependence on costly labeled datasets, but self-supervised learning excels in scaling to massive, unlabeled datasets while semi-supervised learning benefits from limited human supervision.

Table of Comparison

| Aspect | Self-Supervised Learning | Semi-Supervised Learning |

|---|---|---|

| Definition | Model learns from unlabeled data by generating its own labels. | Model learns from a small set of labeled data plus a large set of unlabeled data. |

| Data Requirement | Primarily unlabeled data. | Combination of labeled and unlabeled data. |

| Label Dependency | No manual labels required for pretraining. | Requires some manually labeled data. |

| Learning Approach | Predicts parts of input or pretext tasks (e.g., predicting missing words). | Uses labeled data to guide learning and propagates labels to unlabeled data. |

| Applications | Natural language processing, Computer vision, Speech recognition. | Image classification, Text classification, Medical diagnosis. |

| Advantages | Scalable to large unlabeled datasets; reduces labeling costs. | Improves performance with limited labeled data; better generalization. |

| Challenges | Designing effective pretext tasks; may learn irrelevant features. | Requires some labeled data; sensitive to label quality. |

Understanding Self-supervised Learning

Self-supervised learning leverages unlabeled data by generating supervisory signals from the data itself, enabling models to learn representations without explicit annotations. This contrasts with semi-supervised learning, which requires a small amount of labeled data combined with a large portion of unlabeled data to improve model performance. Self-supervised techniques, such as contrastive learning and masked prediction, have advanced fields like natural language processing and computer vision by reducing dependency on costly labeled datasets.

Defining Semi-supervised Learning

Semi-supervised learning combines a small amount of labeled data with a large volume of unlabeled data to improve model accuracy without the extensive cost of annotation. This approach leverages both supervised learning techniques on labeled samples and unsupervised methods on unlabeled data, optimizing feature representations for enhanced generalization. It is particularly effective in domains where acquiring complete labeled datasets is impractical, enabling more efficient and scalable AI model training.

Core Principles of Each Approach

Self-supervised learning leverages unlabeled data by generating surrogate labels through pretext tasks, enabling models to learn representations without explicit human annotations. Semi-supervised learning combines a small amount of labeled data with a large pool of unlabeled data to improve model performance by propagating label information through graph-based methods or consistency regularization. Core principles of self-supervised learning emphasize automatic label generation and representation learning, while semi-supervised learning centers on leveraging partial supervision and label propagation strategies.

Data Requirements and Utilization

Self-supervised learning leverages large volumes of unlabeled data by generating supervisory signals from the data itself, reducing the need for manual annotation and enabling models to learn rich representations autonomously. Semi-supervised learning requires a smaller labeled dataset complemented by a larger set of unlabeled data, enhancing learning accuracy by combining explicit label information with unsupervised data patterns. The efficiency of self-supervised methods in utilizing entirely unlabeled datasets contrasts with semi-supervised approaches that depend on partial labeling for effective model training.

Key Algorithms and Techniques

Self-supervised learning leverages pretext tasks such as contrastive learning, masked language modeling (e.g., BERT), and autoencoders to create meaningful data representations without explicit labels. Semi-supervised learning combines a small amount of labeled data with large unlabeled datasets using techniques like consistency regularization, pseudo-labeling, and graph-based methods to improve model generalization. Both approaches utilize neural networks, but self-supervised methods emphasize predictive tasks within data, whereas semi-supervised learning focuses on augmenting labeled data through unlabeled examples.

Real-world Applications in AI

Self-supervised learning enables AI models to leverage vast amounts of unlabeled data by creating pretext tasks, improving performance in natural language processing and computer vision without extensive labeled datasets. Semi-supervised learning excels in scenarios where small labeled datasets are augmented by larger unlabeled ones, enhancing medical imaging analysis and speech recognition accuracy. Both approaches address data scarcity in real-world AI applications, driving advancements in autonomous driving, healthcare diagnostics, and recommendation systems.

Pros and Cons: Self-supervised vs Semi-supervised

Self-supervised learning leverages large amounts of unlabeled data by automatically generating labels, reducing the need for costly manual annotation and enhancing scalability in AI model training. Semi-supervised learning combines a small set of labeled data with a larger pool of unlabeled data, improving model accuracy when labeled data is limited but still requiring some manual labeling efforts. While self-supervised learning excels in representation learning and robustness to domain shifts, semi-supervised approaches often achieve higher performance on specific tasks due to the use of explicit labeled guidance.

Performance Comparison in Benchmark Tasks

Self-supervised learning often outperforms semi-supervised learning on benchmark tasks by leveraging large amounts of unlabeled data to create robust feature representations without explicit labels. Semi-supervised learning depends on limited labeled data combined with unlabeled examples, which can lead to suboptimal generalization when labeled data is scarce. Recent studies demonstrate self-supervised models achieving higher accuracy and better generalization across datasets such as ImageNet and CIFAR-10 compared to traditional semi-supervised approaches.

Future Trends and Research Directions

Self-supervised learning is poised to dominate future AI research due to its ability to leverage vast amounts of unlabeled data, reducing reliance on labeled datasets typical in semi-supervised learning. Emerging trends highlight the integration of self-supervised techniques with transformer architectures to enhance natural language understanding and computer vision tasks. Research directions focus on developing scalable models that improve feature representation learning and generalization, enabling more efficient and robust AI systems.

Choosing the Right Approach for Your AI Project

Self-supervised learning leverages large volumes of unlabeled data by generating labels from the data itself, making it ideal for projects with scarce labeled datasets but abundant raw data. Semi-supervised learning combines a small set of labeled data with a larger pool of unlabeled data to improve model accuracy, offering a balance when some labeled examples are available. Selecting the right approach depends on your data availability, labeling resources, and project goals, as self-supervised methods excel in representation learning while semi-supervised techniques enhance performance with limited annotations.

Self-supervised learning vs Semi-supervised learning Infographic

techiny.com

techiny.com