Generative Adversarial Networks (GANs) excel at producing highly realistic images by pitting two neural networks against each other in a competitive learning process, making them ideal for applications requiring sharp, detailed outputs. Variational Autoencoders (VAEs) offer a more structured approach to generative modeling by encoding data into a latent space with a probabilistic framework, enabling smooth interpolation and effective data simulation. While GANs often achieve superior image fidelity, VAEs provide better latent space representation and stability in training, making the choice dependent on the specific requirements of the task.

Table of Comparison

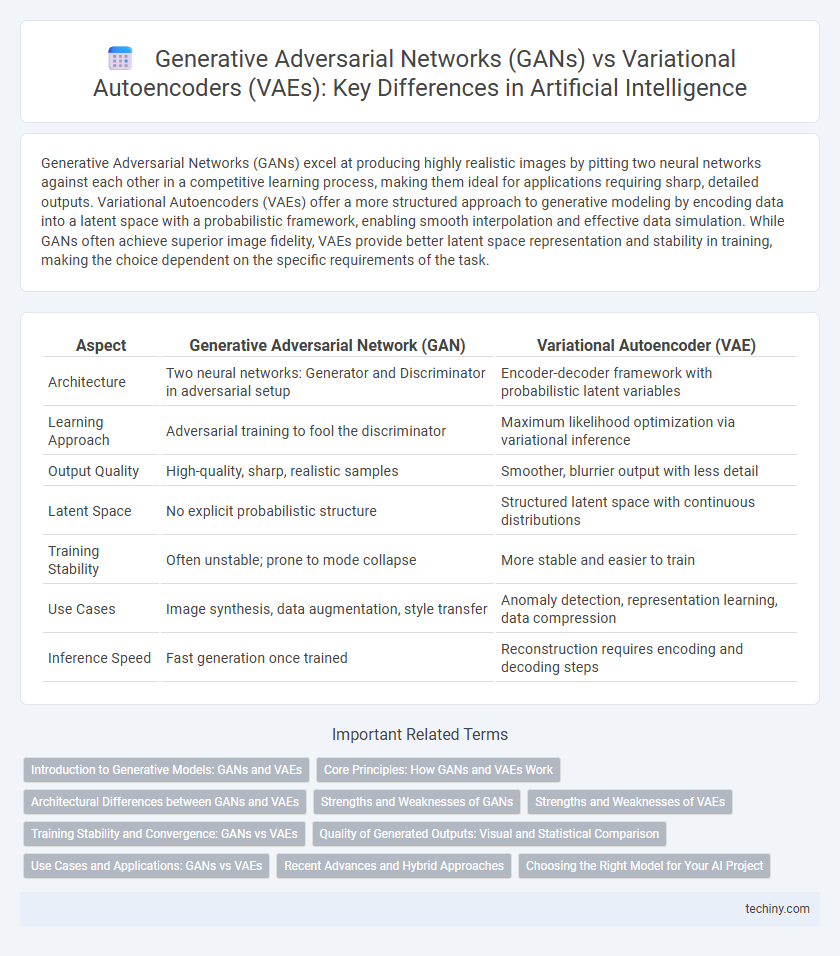

| Aspect | Generative Adversarial Network (GAN) | Variational Autoencoder (VAE) |

|---|---|---|

| Architecture | Two neural networks: Generator and Discriminator in adversarial setup | Encoder-decoder framework with probabilistic latent variables |

| Learning Approach | Adversarial training to fool the discriminator | Maximum likelihood optimization via variational inference |

| Output Quality | High-quality, sharp, realistic samples | Smoother, blurrier output with less detail |

| Latent Space | No explicit probabilistic structure | Structured latent space with continuous distributions |

| Training Stability | Often unstable; prone to mode collapse | More stable and easier to train |

| Use Cases | Image synthesis, data augmentation, style transfer | Anomaly detection, representation learning, data compression |

| Inference Speed | Fast generation once trained | Reconstruction requires encoding and decoding steps |

Introduction to Generative Models: GANs and VAEs

Generative Adversarial Networks (GANs) consist of a generator and a discriminator competing to produce realistic synthetic data, optimizing through adversarial training to capture complex data distributions. Variational Autoencoders (VAEs) leverage probabilistic encoding and decoding to learn latent representations, enabling efficient data generation with a focus on continuous latent variable modeling. Both models excel at unsupervised learning for image synthesis, anomaly detection, and data augmentation, yet differ fundamentally in training objectives and output diversity.

Core Principles: How GANs and VAEs Work

Generative Adversarial Networks (GANs) consist of two neural networks, a generator and a discriminator, competing to improve data generation by synthesizing realistic samples and distinguishing them from true data. Variational Autoencoders (VAEs) encode input data into a probabilistic latent space and decode it back to reconstruct the original data, optimizing a lower bound on the data likelihood for effective representation learning. GANs emphasize adversarial training for sample quality, while VAEs focus on latent variable modeling and reconstruction accuracy, underpinning their distinct generative approaches.

Architectural Differences between GANs and VAEs

Generative Adversarial Networks (GANs) consist of two neural networks, a generator and a discriminator, that compete in a zero-sum game to produce realistic synthetic data, leveraging adversarial training to refine output quality. Variational Autoencoders (VAEs) utilize an encoder-decoder architecture that learns probabilistic latent space representations, optimizing reconstruction loss combined with a regularization term based on the Kullback-Leibler divergence. The fundamental architectural difference lies in GANs' adversarial framework driving data realism versus VAEs' probabilistic modeling enabling latent space interpolation and generative smoothness.

Strengths and Weaknesses of GANs

Generative Adversarial Networks (GANs) excel at producing highly realistic images due to their adversarial training mechanism, which helps generate sharper and more detailed outputs compared to Variational Autoencoders (VAEs). However, GANs face challenges such as training instability, mode collapse, and sensitivity to hyperparameters, which can hinder consistent convergence and diversity in generated samples. Despite these weaknesses, GANs remain powerful in applications requiring high-fidelity image synthesis, outperforming VAEs in visual quality but often requiring more careful tuning and computational resources.

Strengths and Weaknesses of VAEs

Variational Autoencoders (VAEs) excel in generating diverse and continuous latent representations, enabling smooth interpolation and effective regularization through their probabilistic framework. They often produce blurrier images compared to Generative Adversarial Networks (GANs) due to their reliance on reconstruction loss, which limits fine detail generation. VAEs are more stable during training and better suited for tasks involving representation learning, despite generating lower-quality visual outputs than GANs.

Training Stability and Convergence: GANs vs VAEs

Generative Adversarial Networks (GANs) often face challenges with training stability due to the adversarial nature of the generator and discriminator, which can result in mode collapse or oscillatory behavior during convergence. Variational Autoencoders (VAEs) benefit from a more stable and theoretically grounded optimization process by maximizing a variational lower bound on data likelihood, leading to more consistent convergence. While GANs excel in generating sharp and realistic samples, VAEs provide more reliable training dynamics, making them preferable when stable convergence is critical.

Quality of Generated Outputs: Visual and Statistical Comparison

Generative Adversarial Networks (GANs) typically produce sharper and more realistic visual outputs due to their adversarial training framework, which directly optimizes for visual fidelity and fine details. Variational Autoencoders (VAEs) often generate smoother but blurrier images as they maximize a probabilistic lower bound, prioritizing statistical accuracy over fine-grained texture details. Statistically, GANs may suffer from mode collapse affecting diversity, while VAEs maintain better coverage of data distribution but with less visual precision.

Use Cases and Applications: GANs vs VAEs

Generative Adversarial Networks (GANs) excel in generating highly realistic images and videos, making them ideal for applications in entertainment, art creation, and data augmentation for machine learning models. Variational Autoencoders (VAEs) are more suited for tasks requiring robust latent space representations, such as anomaly detection, medical imaging, and data compression. While GANs focus on producing sharp, high-fidelity outputs, VAEs prioritize efficient encoding and decoding processes, enabling smoother interpolation and better unsupervised learning.

Recent Advances and Hybrid Approaches

Recent advances in Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) have led to hybrid models that leverage the strengths of both architectures for enhanced generative performance. These hybrid approaches combine the sharp image synthesis capabilities of GANs with the structured latent space representation of VAEs, improving both sample quality and diversity. Techniques such as VAE-GAN and adversarially trained VAEs demonstrate improved convergence stability and richer, more controllable generative outputs in domains like image generation and data augmentation.

Choosing the Right Model for Your AI Project

Generative Adversarial Networks (GANs) excel in generating high-quality, realistic images and complex data distributions, making them ideal for projects requiring sharp, detailed outputs like image synthesis or style transfer. Variational Autoencoders (VAEs) offer robust performance in learning latent representations with better interpretability and stability, suitable for tasks involving data compression, anomaly detection, or generating diverse samples with smooth interpolations. Selecting between GANs and VAEs depends on the specific project goals: prioritize GANs for realism and detail, while VAEs are preferred for stable training and meaningful latent space exploration.

Generative Adversarial Network (GAN) vs Variational Autoencoder (VAE) Infographic

techiny.com

techiny.com