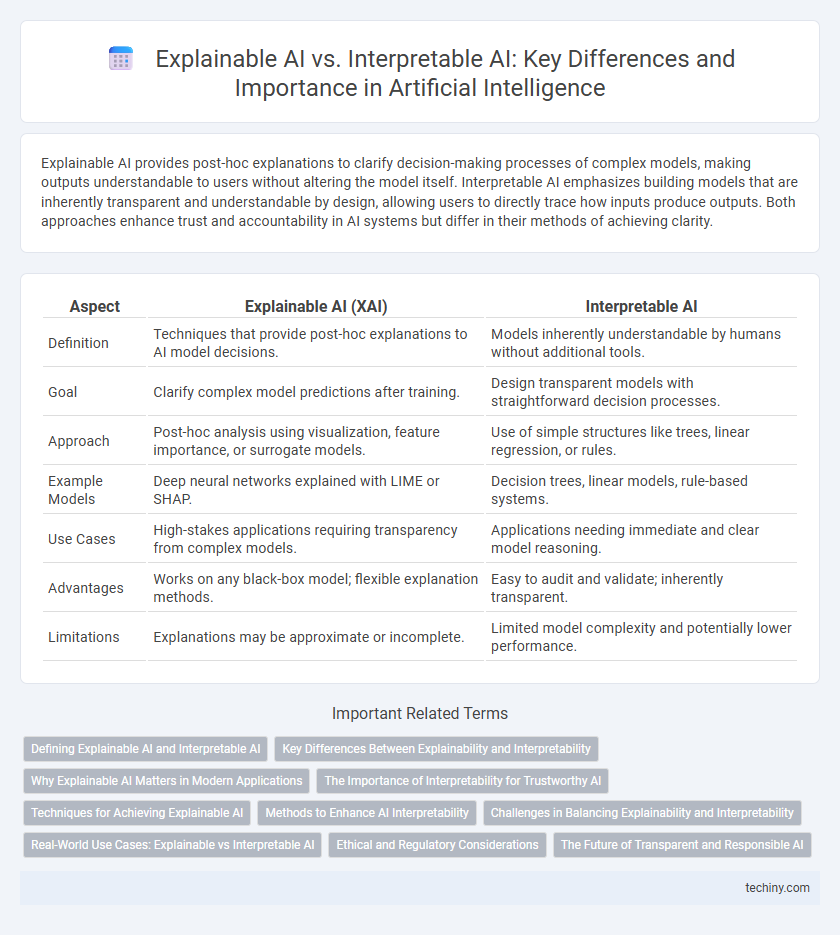

Explainable AI provides post-hoc explanations to clarify decision-making processes of complex models, making outputs understandable to users without altering the model itself. Interpretable AI emphasizes building models that are inherently transparent and understandable by design, allowing users to directly trace how inputs produce outputs. Both approaches enhance trust and accountability in AI systems but differ in their methods of achieving clarity.

Table of Comparison

| Aspect | Explainable AI (XAI) | Interpretable AI |

|---|---|---|

| Definition | Techniques that provide post-hoc explanations to AI model decisions. | Models inherently understandable by humans without additional tools. |

| Goal | Clarify complex model predictions after training. | Design transparent models with straightforward decision processes. |

| Approach | Post-hoc analysis using visualization, feature importance, or surrogate models. | Use of simple structures like trees, linear regression, or rules. |

| Example Models | Deep neural networks explained with LIME or SHAP. | Decision trees, linear models, rule-based systems. |

| Use Cases | High-stakes applications requiring transparency from complex models. | Applications needing immediate and clear model reasoning. |

| Advantages | Works on any black-box model; flexible explanation methods. | Easy to audit and validate; inherently transparent. |

| Limitations | Explanations may be approximate or incomplete. | Limited model complexity and potentially lower performance. |

Defining Explainable AI and Interpretable AI

Explainable AI (XAI) refers to methods and techniques that make the decision-making processes of complex AI models understandable to humans by providing clear, human-readable justifications. Interpretable AI focuses on models that are inherently transparent and simple enough, such as linear regression or decision trees, allowing users to directly comprehend how inputs relate to outputs. Both aim to enhance trust and accountability in AI systems but differ in approach: explainability often applies post-hoc to complex models, while interpretability is intrinsic to the model design.

Key Differences Between Explainability and Interpretability

Explainable AI provides detailed reasons behind model decisions, enabling users to understand the "why" and "how" of outputs by generating human-readable explanations. Interpretable AI focuses on transparent models with inherent simplicity, allowing direct insight into model mechanics without additional post-hoc interpretation. Explainability aims at post-model analysis for complex algorithms, while interpretability emphasizes straightforward model structures for intrinsic clarity.

Why Explainable AI Matters in Modern Applications

Explainable AI provides transparent insights into complex model decisions, enhancing trust and accountability in critical sectors like healthcare, finance, and autonomous systems. It enables stakeholders to understand, verify, and challenge AI outcomes, reducing risks associated with biased or erroneous predictions. Emphasizing explainability supports regulatory compliance and fosters user acceptance by demystifying black-box models in modern AI applications.

The Importance of Interpretability for Trustworthy AI

Interpretability in AI ensures that models provide clear, understandable explanations for their decisions, fostering user trust and regulatory compliance. Explainable AI focuses on creating post-hoc explanations, which may not fully reveal the internal workings, whereas interpretable AI designs inherently transparent models prioritizing simplicity and clarity. Trustworthy AI depends on interpretability to validate outcomes, reduce biases, and enable accountability in critical applications like healthcare and finance.

Techniques for Achieving Explainable AI

Techniques for achieving Explainable AI (XAI) include model-agnostic approaches such as SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations), which provide local explanations for individual predictions. In contrast, interpretable AI relies on inherently transparent models like decision trees, linear regression, and rule-based systems, enabling straightforward comprehension of the decision process. Combining post-hoc explanation methods with interpretable models enhances transparency, promoting trust and accountability in AI deployments.

Methods to Enhance AI Interpretability

Methods to enhance AI interpretability include model-agnostic techniques like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations), which provide post-hoc explanations for complex models. Interpretable AI often relies on inherently transparent models such as decision trees, linear regression, and rule-based systems that allow direct understanding of decision processes. Explainable AI integrates visualization tools, feature importance analysis, and counterfactual explanations to make black-box models more comprehensible to users and stakeholders.

Challenges in Balancing Explainability and Interpretability

Balancing explainability and interpretability in Artificial Intelligence presents challenges due to the complexity of models like deep neural networks, which often sacrifice transparency for performance. Explainable AI aims to provide understandable justifications for decisions, but these explanations may oversimplify or obscure the underlying model behavior. Interpretable AI focuses on inherently transparent models, yet achieving high accuracy while maintaining straightforward interpretability remains a significant hurdle in real-world applications.

Real-World Use Cases: Explainable vs Interpretable AI

Explainable AI (XAI) provides detailed insights into complex model decisions, making it crucial in high-stakes fields like healthcare diagnostics and financial risk assessment where transparency is mandatory. Interpretable AI, often simpler by design, enables straightforward decision-making understanding, beneficial in regulatory environments such as credit scoring and compliance monitoring. Real-world applications highlight that Explainable AI suits scenarios demanding accountability, while Interpretable AI fits tasks requiring quick, reliable decision explanations.

Ethical and Regulatory Considerations

Explainable AI provides transparent justifications for model decisions, enhancing accountability and fostering trust in compliance with ethical standards and data protection regulations such as GDPR. Interpretable AI emphasizes inherently understandable models, minimizing risks of algorithmic bias and enabling regulators to audit decision-making processes effectively. Both approaches address ethical concerns by promoting fairness, transparency, and responsible AI deployment in sensitive sectors like healthcare and finance.

The Future of Transparent and Responsible AI

Explainable AI (XAI) and Interpretable AI are pivotal in advancing transparent and responsible artificial intelligence systems by enhancing model accountability and user trust. XAI focuses on providing post-hoc explanations for complex models, while Interpretable AI prioritizes designing inherently understandable models from the ground up. The future of transparent AI hinges on integrating these approaches to balance predictive performance with clarity, ensuring ethical deployment and regulatory compliance in AI-driven decision-making.

Explainable AI vs Interpretable AI Infographic

techiny.com

techiny.com