Bag of Words represents text data by counting word frequency, ignoring grammar and word order, which makes it simple but less context-aware. Word2Vec captures semantic relationships by converting words into dense vectors based on their context in large corpora, enhancing understanding of meaning and similarity. Word2Vec outperforms Bag of Words in natural language processing tasks requiring nuanced interpretation and context preservation.

Table of Comparison

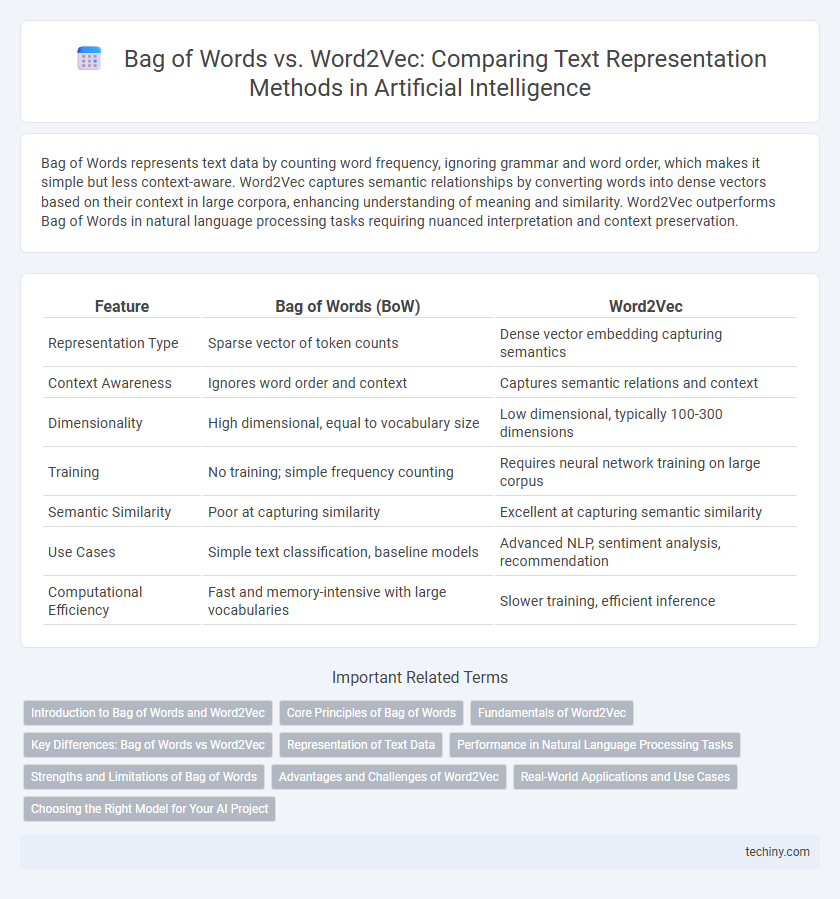

| Feature | Bag of Words (BoW) | Word2Vec |

|---|---|---|

| Representation Type | Sparse vector of token counts | Dense vector embedding capturing semantics |

| Context Awareness | Ignores word order and context | Captures semantic relations and context |

| Dimensionality | High dimensional, equal to vocabulary size | Low dimensional, typically 100-300 dimensions |

| Training | No training; simple frequency counting | Requires neural network training on large corpus |

| Semantic Similarity | Poor at capturing similarity | Excellent at capturing semantic similarity |

| Use Cases | Simple text classification, baseline models | Advanced NLP, sentiment analysis, recommendation |

| Computational Efficiency | Fast and memory-intensive with large vocabularies | Slower training, efficient inference |

Introduction to Bag of Words and Word2Vec

Bag of Words (BoW) is a simple and widely used text representation technique that converts text into numerical features by counting the frequency of each word in a document, without preserving word order or context. Word2Vec, developed by Google, generates dense vector representations by capturing semantic relationships between words through training on large text corpora using neural networks. While BoW focuses on word occurrence, Word2Vec encodes deeper linguistic meaning, enabling improved performance in natural language processing tasks.

Core Principles of Bag of Words

Bag of Words (BoW) is a fundamental text representation technique that transforms text into fixed-length vectors by counting the frequency of each word, disregarding grammar and word order. This model emphasizes word occurrence but ignores semantic relationships and context, making it simple yet limited for capturing meaning. BoW's core principle relies on treating text as an unordered collection of words, enabling straightforward feature extraction for machine learning algorithms in natural language processing tasks.

Fundamentals of Word2Vec

Word2Vec leverages neural networks to generate dense vector representations of words, capturing semantic relationships by placing similar words closer in vector space. Unlike the Bag of Words model that treats words as independent tokens, Word2Vec considers word context, enabling it to encode syntactic and semantic meaning effectively. This approach enhances downstream tasks such as natural language processing, machine translation, and sentiment analysis by providing richer linguistic representations.

Key Differences: Bag of Words vs Word2Vec

Bag of Words (BoW) represents text by counting word frequencies without capturing word order or context, resulting in sparse and high-dimensional vectors. Word2Vec generates dense, continuous vector embeddings by training neural networks to capture semantic relationships and contextual similarity between words. Unlike BoW, Word2Vec preserves syntactic and semantic information, enabling more effective natural language processing tasks such as sentiment analysis and machine translation.

Representation of Text Data

Bag of Words represents text by counting word frequency without capturing context or semantic relationships, resulting in sparse and high-dimensional vectors. Word2Vec generates dense, continuous vector embeddings that encode semantic meaning by analyzing word co-occurrence in context windows, allowing it to capture syntactic and semantic similarities. This makes Word2Vec more effective for natural language processing tasks requiring understanding of word relationships and context.

Performance in Natural Language Processing Tasks

Bag of Words (BoW) offers simplicity and speed but often struggles with capturing context or semantic meaning, leading to lower accuracy in tasks like sentiment analysis and document classification. Word2Vec generates dense vector representations that encode semantic relationships between words, significantly improving performance in natural language processing tasks such as named entity recognition and machine translation. Empirical results show Word2Vec often outperforms BoW by enabling models to understand word similarities and context, resulting in higher precision and recall metrics.

Strengths and Limitations of Bag of Words

Bag of Words (BoW) excels in simplicity and efficiency, transforming text into fixed-length vectors based on word frequency, which makes it easy to implement for basic text classification tasks. However, BoW lacks semantic understanding, ignoring word order and context, leading to potential loss of meaning and inability to capture synonyms or polysemy. Its high dimensionality and sparsity pose challenges for scalability and performance on large or complex datasets compared to embedding methods like Word2Vec.

Advantages and Challenges of Word2Vec

Word2Vec offers significant advantages over Bag of Words by capturing semantic relationships between words through continuous vector representations, enabling better context understanding and improved performance in natural language processing tasks. It effectively reduces dimensionality and addresses sparsity issues inherent in Bag of Words models while providing the ability to identify similarities and analogies between words. However, challenges of Word2Vec include the need for large datasets to train accurate embeddings, sensitivity to hyperparameter tuning, and difficulties in capturing polysemy where words have multiple meanings.

Real-World Applications and Use Cases

Bag of Words (BoW) excels in text classification tasks where interpretability and simplicity are crucial, such as spam detection and document retrieval systems, due to its straightforward frequency-based representation. Word2Vec captures semantic relationships and contextual meanings, making it ideal for complex natural language processing applications like sentiment analysis, machine translation, and recommendation engines. Industries leveraging Word2Vec benefit from improved understanding of user intent and nuanced language interpretation in chatbots and voice assistants.

Choosing the Right Model for Your AI Project

Selecting between Bag of Words and Word2Vec depends on your AI project's complexity and data type. Bag of Words is ideal for simple text classification tasks with clear keyword importance, while Word2Vec excels in capturing semantic relationships and context in large text corpora. Prioritize Word2Vec for projects requiring nuanced understanding and continuous vector representations to enhance natural language processing accuracy.

Bag of Words vs Word2Vec Infographic

techiny.com

techiny.com