Bag-of-Words (BoW) represents text by counting word occurrences, treating each term independently without considering context or importance. TF-IDF (Term Frequency-Inverse Document Frequency) enhances text representation by weighting terms based on their frequency in a document relative to their rarity across all documents, improving relevance detection. While BoW is simpler and faster, TF-IDF provides a more refined feature set that boosts performance in tasks like information retrieval and text classification.

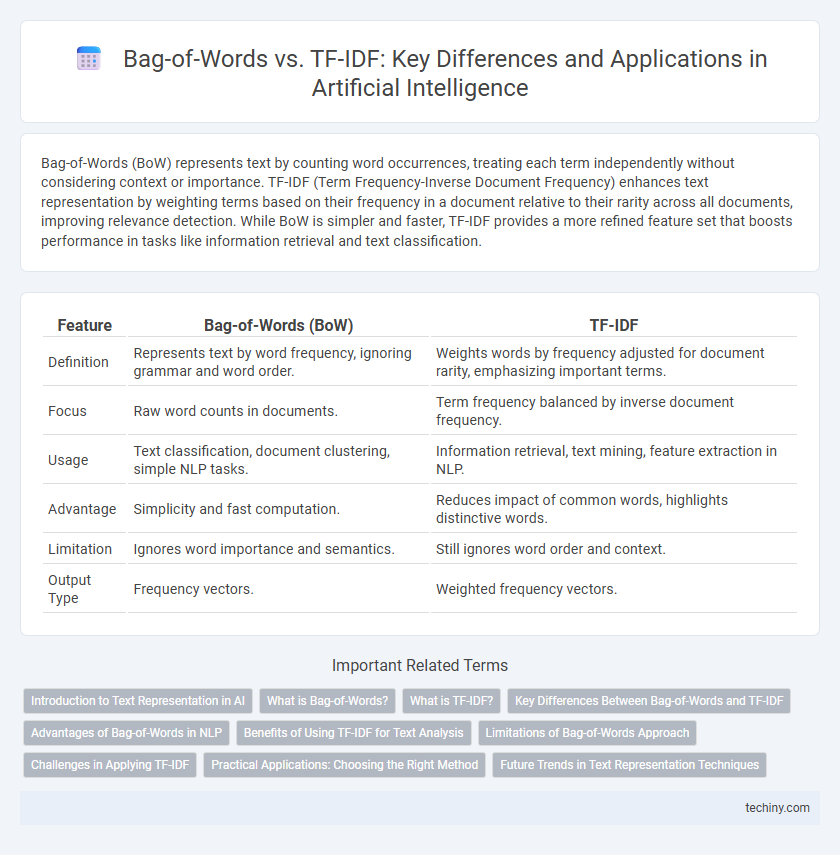

Table of Comparison

| Feature | Bag-of-Words (BoW) | TF-IDF |

|---|---|---|

| Definition | Represents text by word frequency, ignoring grammar and word order. | Weights words by frequency adjusted for document rarity, emphasizing important terms. |

| Focus | Raw word counts in documents. | Term frequency balanced by inverse document frequency. |

| Usage | Text classification, document clustering, simple NLP tasks. | Information retrieval, text mining, feature extraction in NLP. |

| Advantage | Simplicity and fast computation. | Reduces impact of common words, highlights distinctive words. |

| Limitation | Ignores word importance and semantics. | Still ignores word order and context. |

| Output Type | Frequency vectors. | Weighted frequency vectors. |

Introduction to Text Representation in AI

Bag-of-Words (BoW) represents text by counting word frequency, ignoring word order, making it simple but sometimes less informative for AI models. TF-IDF (Term Frequency-Inverse Document Frequency) enhances text representation by weighing words based on their importance across documents, improving feature relevance in natural language processing tasks. Both techniques serve as foundational methods for transforming textual data into numerical vectors for machine learning algorithms in AI.

What is Bag-of-Words?

Bag-of-Words (BoW) is a fundamental text representation technique in Natural Language Processing that converts text into fixed-length vectors by counting the frequency of each word within a document. BoW disregards grammar and word order, focusing solely on the presence or absence of words to capture document features. This method is widely used for tasks such as text classification and information retrieval due to its simplicity and effectiveness in representing textual data.

What is TF-IDF?

TF-IDF, or Term Frequency-Inverse Document Frequency, is a statistical measure used in natural language processing to evaluate the importance of a word within a specific document relative to a corpus. It combines term frequency, which counts how often a word appears in a document, with inverse document frequency, which reduces the weight of common words across all documents. This weighting mechanism helps improve the accuracy of text classification, information retrieval, and sentiment analysis by emphasizing relevant terms and diminishing background noise.

Key Differences Between Bag-of-Words and TF-IDF

Bag-of-Words (BoW) represents text as a simple frequency count of words, ignoring word order and context, which can lead to high-dimensional sparse vectors. TF-IDF (Term Frequency-Inverse Document Frequency) adjusts word frequency by the rarity of terms in the entire corpus, highlighting more informative words and reducing the weight of common terms. While BoW emphasizes raw word counts, TF-IDF enhances feature relevance, improving text classification and information retrieval tasks by focusing on significant words across documents.

Advantages of Bag-of-Words in NLP

Bag-of-Words (BoW) offers simplicity and efficiency in natural language processing by representing text as fixed-length vectors based on word frequency, making it computationally lightweight. BoW effectively captures the presence and importance of words in small to medium-sized datasets, enabling straightforward implementation in sentiment analysis and document classification tasks. Its interpretability allows easy debugging and model analysis compared to more complex embeddings like TF-IDF or word2vec.

Benefits of Using TF-IDF for Text Analysis

TF-IDF enhances text analysis by weighting terms based on their importance across documents, reducing the influence of common words and emphasizing unique, informative keywords. This approach improves the accuracy of document classification and clustering by capturing the relevance of terms within the entire corpus rather than mere frequency counts. TF-IDF is especially beneficial in tasks like information retrieval, sentiment analysis, and topic modeling where distinguishing significant words enhances model performance.

Limitations of Bag-of-Words Approach

The Bag-of-Words (BoW) approach disregards word order and context, leading to loss of semantic meaning in text analysis. It treats all words with equal importance, which can cause common but irrelevant words to dominate the representation. This limitation reduces BoW's effectiveness in capturing the nuanced understanding required for complex natural language processing tasks compared to TF-IDF.

Challenges in Applying TF-IDF

TF-IDF faces challenges in handling large vocabularies, leading to sparse and high-dimensional feature spaces that increase computational complexity. It struggles to account for semantic meaning and context, often treating synonyms and polysemous words as distinct features. Additionally, TF-IDF is sensitive to common but domain-specific terms, which can skew relevance scores if not properly managed.

Practical Applications: Choosing the Right Method

Bag-of-Words (BoW) is effective for simple text classification tasks where word presence and frequency are sufficient, such as spam detection or sentiment analysis, due to its straightforward representation. TF-IDF excels in information retrieval and document ranking by emphasizing distinctive words, improving search engine accuracy and topic modeling. Selecting between BoW and TF-IDF depends on the importance of word frequency normalization and the need to reduce the impact of common words in the specific natural language processing application.

Future Trends in Text Representation Techniques

Future trends in text representation techniques emphasize moving beyond traditional Bag-of-Words and TF-IDF models toward advanced embeddings like Transformer-based models (e.g., BERT, GPT) that capture context and semantics more effectively. These models utilize deep learning architectures to generate dynamic, context-aware vector representations, improving performance in natural language understanding tasks. Integration of hybrid approaches combining classical frequency-based methods with neural embeddings is anticipated to enhance efficiency and interpretability in text analysis.

Bag-of-Words vs TF-IDF Infographic

techiny.com

techiny.com