Hard attention focuses on selecting discrete parts of the input for processing, making it computationally efficient but non-differentiable, which complicates training. Soft attention assigns continuous weights to all input elements, enabling end-to-end differentiability and smoother gradient flow for model optimization. This trade-off influences the choice between hard and soft attention mechanisms depending on the specific requirements of accuracy and computational efficiency in artificial intelligence applications.

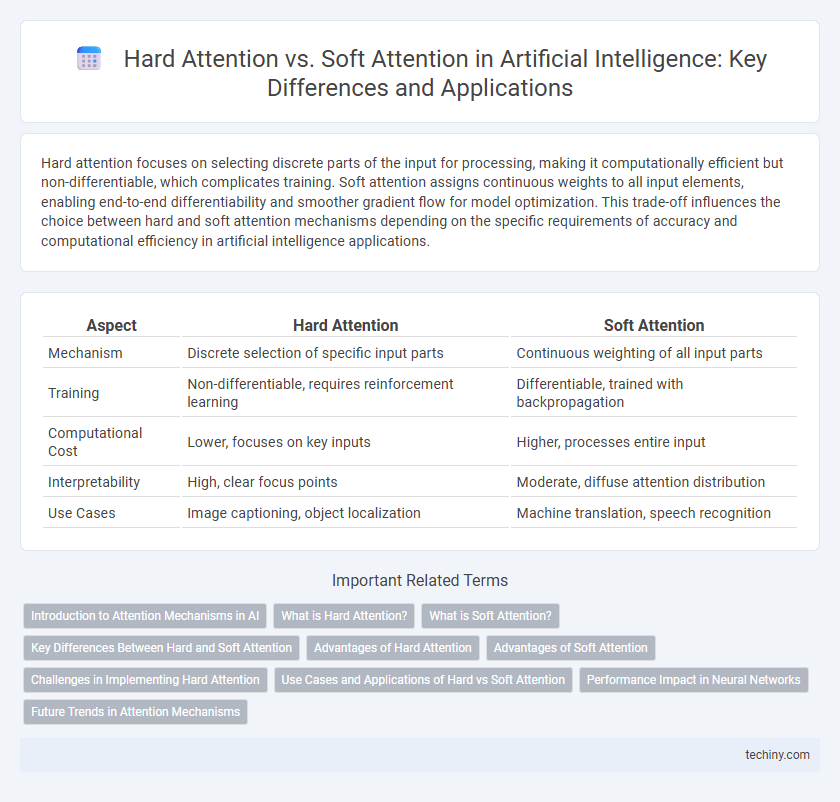

Table of Comparison

| Aspect | Hard Attention | Soft Attention |

|---|---|---|

| Mechanism | Discrete selection of specific input parts | Continuous weighting of all input parts |

| Training | Non-differentiable, requires reinforcement learning | Differentiable, trained with backpropagation |

| Computational Cost | Lower, focuses on key inputs | Higher, processes entire input |

| Interpretability | High, clear focus points | Moderate, diffuse attention distribution |

| Use Cases | Image captioning, object localization | Machine translation, speech recognition |

Introduction to Attention Mechanisms in AI

Hard attention selectively focuses on discrete parts of input data by making non-differentiable choices, leading to efficient computation but requiring reinforcement learning for training. Soft attention computes weighted averages over all input features, enabling differentiable end-to-end training with gradient descent and smoother learning dynamics. Attention mechanisms enhance model interpretability and performance by dynamically highlighting relevant information in tasks such as natural language processing and computer vision.

What is Hard Attention?

Hard Attention is a mechanism in artificial intelligence where the model selectively focuses on discrete parts of the input, making hard, binary decisions about which information to attend to. This approach involves stochastic sampling, often implemented through reinforcement learning techniques, to choose specific regions or features, improving computational efficiency. Unlike Soft Attention, Hard Attention does not compute weighted averages over all inputs but instead processes only the selected segments, enhancing interpretability in tasks like image captioning and natural language processing.

What is Soft Attention?

Soft Attention is a neural mechanism that assigns continuous probability weights to all elements in the input sequence, allowing the model to focus on multiple relevant parts simultaneously during training. Unlike Hard Attention, which selects a single discrete element to attend to, Soft Attention enables end-to-end differentiability and efficient gradient-based optimization. This approach enhances performance in tasks such as machine translation, image captioning, and natural language understanding by dynamically highlighting important features across the entire input data.

Key Differences Between Hard and Soft Attention

Hard attention selects discrete, specific parts of the input data for processing, making it computationally efficient but non-differentiable and harder to train. Soft attention assigns continuous, differentiable weights to all input elements, allowing end-to-end training through gradient descent but with higher computational costs. The choice between hard and soft attention impacts model interpretability, training complexity, and application suitability in tasks like natural language processing and computer vision.

Advantages of Hard Attention

Hard Attention offers the advantage of efficient computation by selectively focusing on a discrete subset of input elements, reducing memory usage and speeding up processing. It enables interpretable decision-making by highlighting specific regions or features, facilitating better model explainability. This approach also minimizes noise interference from irrelevant data, improving overall performance in tasks like image recognition and natural language processing.

Advantages of Soft Attention

Soft attention offers improved differentiability, enabling end-to-end training of neural networks using gradient-based optimization techniques. It enhances model interpretability by providing weighted importance scores over all input features, facilitating better understanding of decision-making processes. Additionally, soft attention mechanisms allow flexible handling of variable-length inputs without the need for explicit alignment, improving performance in natural language processing and image recognition tasks.

Challenges in Implementing Hard Attention

Implementing Hard Attention in artificial intelligence models presents significant challenges due to its non-differentiable nature, which makes gradient-based optimization difficult. This requires the use of reinforcement learning or approximation techniques like the REINFORCE algorithm, often leading to higher variance and unstable training processes. Furthermore, Hard Attention's discrete selection mechanism can result in suboptimal focus on critical input features compared to Soft Attention's continuous weighting, impacting model interpretability and performance.

Use Cases and Applications of Hard vs Soft Attention

Hard attention excels in scenarios requiring discrete selection, such as object detection and image captioning, where pinpointing specific regions is crucial. Soft attention suits natural language processing tasks like machine translation and sentiment analysis, enabling models to weigh all input elements continuously for contextual understanding. Combining both methods enhances multi-modal systems, improving accuracy in complex tasks like visual question answering and speech recognition.

Performance Impact in Neural Networks

Hard attention selectively focuses on discrete parts of input data, which can improve interpretability but often results in non-differentiable models requiring reinforcement learning or sampling techniques, potentially slowing training and increasing complexity. Soft attention computes weighted averages across all input elements, enabling end-to-end differentiability and smoother gradient flow, typically leading to faster convergence and better performance in tasks like machine translation and image captioning. Neural networks employing soft attention generally exhibit higher accuracy and stability compared to hard attention, especially in large-scale datasets and real-time applications.

Future Trends in Attention Mechanisms

Future trends in attention mechanisms emphasize the integration of hybrid models combining hard attention's discrete selection with soft attention's differentiable weighting to enhance computational efficiency and interpretability. Advances in dynamic attention allocation aim to optimize resource usage in large-scale neural networks, significantly improving performance in natural language processing and computer vision tasks. Emerging research explores adaptive attention frameworks that evolve during training, offering robust scalability for real-time AI applications.

Hard Attention vs Soft Attention Infographic

techiny.com

techiny.com