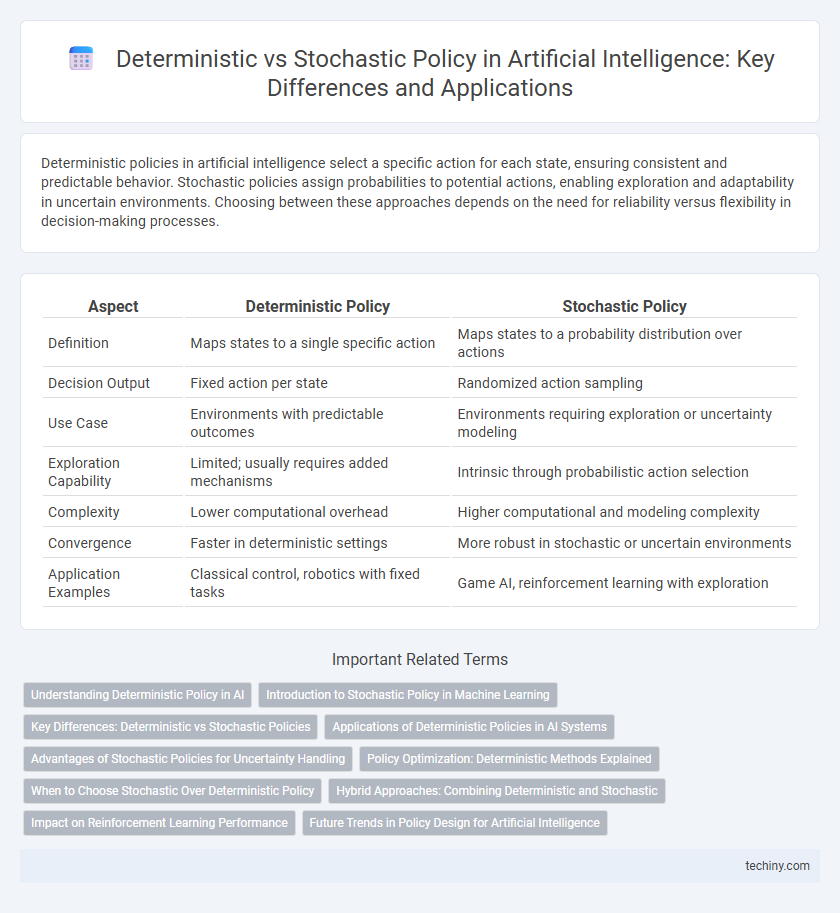

Deterministic policies in artificial intelligence select a specific action for each state, ensuring consistent and predictable behavior. Stochastic policies assign probabilities to potential actions, enabling exploration and adaptability in uncertain environments. Choosing between these approaches depends on the need for reliability versus flexibility in decision-making processes.

Table of Comparison

| Aspect | Deterministic Policy | Stochastic Policy |

|---|---|---|

| Definition | Maps states to a single specific action | Maps states to a probability distribution over actions |

| Decision Output | Fixed action per state | Randomized action sampling |

| Use Case | Environments with predictable outcomes | Environments requiring exploration or uncertainty modeling |

| Exploration Capability | Limited; usually requires added mechanisms | Intrinsic through probabilistic action selection |

| Complexity | Lower computational overhead | Higher computational and modeling complexity |

| Convergence | Faster in deterministic settings | More robust in stochastic or uncertain environments |

| Application Examples | Classical control, robotics with fixed tasks | Game AI, reinforcement learning with exploration |

Understanding Deterministic Policy in AI

Deterministic policy in artificial intelligence defines a fixed action for each state, ensuring consistent behavior during decision-making processes. Unlike stochastic policies that assign probabilities to actions, deterministic policies optimize predictability and simplify policy evaluation. This approach is crucial in environments with clear state-action mappings, enabling efficient reinforcement learning and control applications.

Introduction to Stochastic Policy in Machine Learning

Stochastic policy in machine learning refers to a strategy where actions are chosen based on probability distributions rather than fixed rules, allowing models to handle uncertainty and exploration effectively. Unlike deterministic policies that map states to specific actions, stochastic policies enable more flexible decision-making in environments with inherent randomness. These policies are essential in reinforcement learning algorithms such as Policy Gradient methods, where balancing exploration and exploitation improves learning performance.

Key Differences: Deterministic vs Stochastic Policies

Deterministic policies select a specific action for each state, providing consistent and predictable behavior in reinforcement learning environments, whereas stochastic policies assign probabilities to possible actions, enabling exploration and adaptability. Deterministic approaches often excel in environments with low uncertainty by optimizing a fixed strategy, while stochastic policies are advantageous when managing uncertainty and balancing exploration-exploitation trade-offs. The choice between deterministic and stochastic policies significantly impacts learning efficiency, convergence stability, and overall performance in artificial intelligence models.

Applications of Deterministic Policies in AI Systems

Deterministic policies in AI systems are widely used in robotics and autonomous vehicles, where consistent and predictable actions are crucial for safety and efficiency. These policies generate specific actions for each state, enabling real-time decision-making in control systems like robotic manipulators and industrial automation. Deterministic approaches optimize performance in environments with well-defined dynamics, reducing computational complexity compared to stochastic methods.

Advantages of Stochastic Policies for Uncertainty Handling

Stochastic policies excel in handling uncertainty by allowing exploration through probabilistic action selections, enhancing robustness in dynamic and unpredictable environments. They enable better adaptation to noisy or incomplete data by maintaining a distribution over possible actions rather than committing to a single deterministic choice. This flexibility improves long-term learning and decision-making in complex artificial intelligence applications.

Policy Optimization: Deterministic Methods Explained

Deterministic policy optimization in artificial intelligence involves directly mapping states to specific actions without randomness, enabling precise and efficient decision-making in environments with continuous action spaces. These methods, such as Deterministic Policy Gradient (DPG), leverage gradient-based optimization techniques to improve policies by maximizing expected returns deterministically. Deterministic approaches often provide faster convergence and reduced variance compared to stochastic policies, making them suitable for tasks requiring consistent and reliable action outputs.

When to Choose Stochastic Over Deterministic Policy

Stochastic policies are preferred in environments with high uncertainty or partial observability, where deterministic approaches may fail to capture the complexity of state transitions. In reinforcement learning tasks involving exploration-exploitation trade-offs, stochastic policies enable diverse action selection, improving learning efficiency and robustness. They are particularly effective in multi-agent settings or games like poker, where unpredictability enhances strategic advantages.

Hybrid Approaches: Combining Deterministic and Stochastic

Hybrid approaches in artificial intelligence leverage the strengths of deterministic and stochastic policies by integrating deterministic decision rules with stochastic exploration mechanisms. Such methods enhance policy robustness and improve sample efficiency by allowing predictable actions in stable states while maintaining adaptability through probabilistic choices in uncertain environments. This combination enables more effective learning in complex reinforcement learning tasks by balancing exploitation and exploration dynamically.

Impact on Reinforcement Learning Performance

Deterministic policies provide consistent action selection, leading to faster convergence in environments with low stochasticity, enhancing reinforcement learning efficiency. Stochastic policies introduce exploration by sampling actions, improving performance in complex or dynamic environments by avoiding local optima. Balancing deterministic and stochastic approaches is critical for optimizing reinforcement learning outcomes across varied task settings.

Future Trends in Policy Design for Artificial Intelligence

Future trends in policy design for artificial intelligence emphasize hybrid models that integrate deterministic and stochastic approaches to balance predictability and adaptability. Advances in reinforcement learning algorithms are driving the development of policies capable of dynamic decision-making under uncertainty, enhancing AI robustness in real-world scenarios. Emphasis on explainability and ethical considerations is shaping policies that ensure transparent, fair, and accountable AI behavior across diverse applications.

Deterministic Policy vs Stochastic Policy Infographic

techiny.com

techiny.com