Adversarial robustness in artificial intelligence refers to a model's ability to maintain performance when exposed to maliciously crafted inputs designed to deceive it. This robustness often contrasts with generalization, which measures how well a model performs on unseen, natural data. Balancing these two objectives is critical, as improving adversarial robustness can sometimes reduce generalization effectiveness, necessitating advanced training techniques like adversarial training and regularization.

Table of Comparison

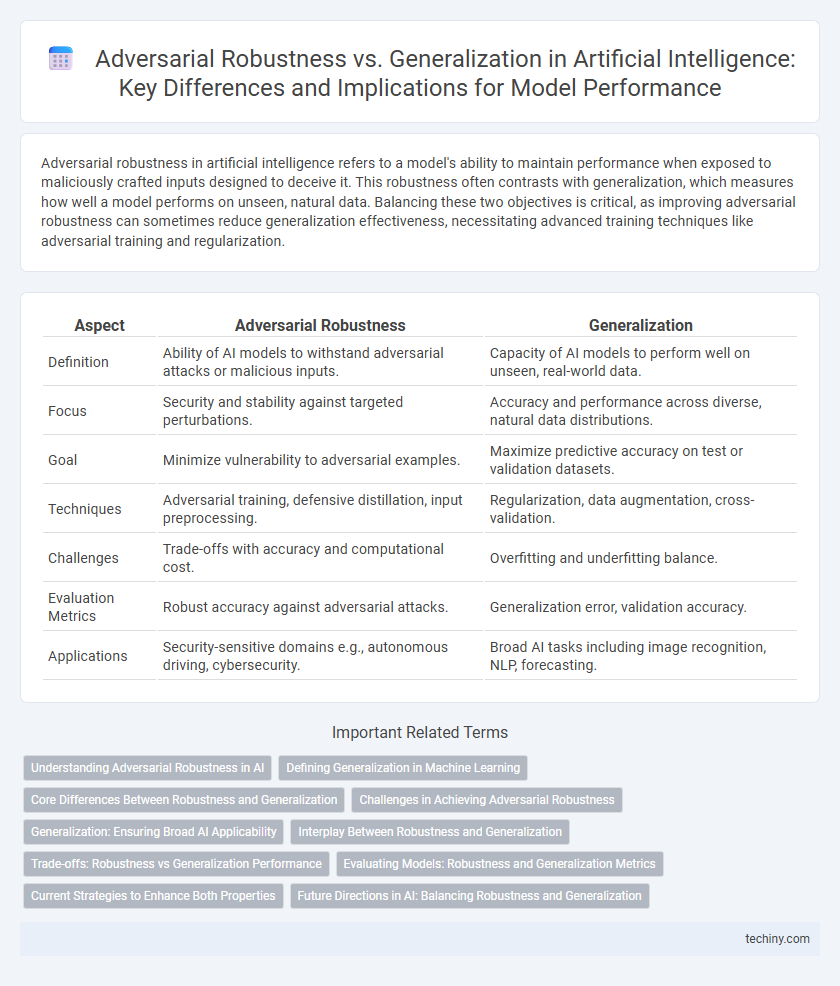

| Aspect | Adversarial Robustness | Generalization |

|---|---|---|

| Definition | Ability of AI models to withstand adversarial attacks or malicious inputs. | Capacity of AI models to perform well on unseen, real-world data. |

| Focus | Security and stability against targeted perturbations. | Accuracy and performance across diverse, natural data distributions. |

| Goal | Minimize vulnerability to adversarial examples. | Maximize predictive accuracy on test or validation datasets. |

| Techniques | Adversarial training, defensive distillation, input preprocessing. | Regularization, data augmentation, cross-validation. |

| Challenges | Trade-offs with accuracy and computational cost. | Overfitting and underfitting balance. |

| Evaluation Metrics | Robust accuracy against adversarial attacks. | Generalization error, validation accuracy. |

| Applications | Security-sensitive domains e.g., autonomous driving, cybersecurity. | Broad AI tasks including image recognition, NLP, forecasting. |

Understanding Adversarial Robustness in AI

Adversarial robustness in AI refers to a model's ability to maintain performance when exposed to intentionally perturbed inputs designed to deceive it. This robustness is often measured through adversarial attacks that simulate worst-case scenarios, revealing vulnerabilities in model predictions. Understanding the trade-offs between robustness and generalization helps develop AI systems that resist adversarial examples while performing well on unseen, naturally occurring data.

Defining Generalization in Machine Learning

Generalization in machine learning refers to a model's ability to perform accurately on unseen data, extending beyond the training set. It involves capturing underlying patterns without overfitting to noise or specific examples, enabling reliable predictions in real-world scenarios. Evaluating generalization often relies on metrics from validation and test datasets, highlighting model robustness across diverse inputs.

Core Differences Between Robustness and Generalization

Adversarial robustness in artificial intelligence focuses on a model's ability to maintain performance when faced with intentionally perturbed inputs designed to mislead it, emphasizing resistance to worst-case scenarios. Generalization measures how well a model performs on unseen, naturally occurring data drawn from the same distribution as the training set, stressing adaptability and predictive accuracy. Core differences lie in robustness targeting stability under adversarial manipulations, whereas generalization ensures consistent performance on typical, clean data distributions.

Challenges in Achieving Adversarial Robustness

Achieving adversarial robustness in artificial intelligence systems presents significant challenges due to the inherent trade-offs with model generalization and the complexity of crafting defenses against adaptive attackers. Models that resist adversarial perturbations often experience reduced performance on clean data, highlighting difficulties in balancing robustness with overall accuracy. Moreover, the high dimensionality of input spaces and evolving attack strategies complicate the development of scalable and reliable robustness techniques.

Generalization: Ensuring Broad AI Applicability

Generalization in artificial intelligence ensures models perform accurately on unseen data, enhancing broad applicability across diverse real-world scenarios. Robust generalization reduces overfitting by enabling AI systems to learn underlying patterns rather than just memorizing training examples. This capability is critical for deploying AI in dynamic environments where data distributions continually evolve.

Interplay Between Robustness and Generalization

Adversarial robustness and generalization in artificial intelligence exhibit a complex interplay where enhancing robustness against adversarial attacks can sometimes compromise model generalization on clean data. Research demonstrates that robustness techniques like adversarial training often lead to models that prioritize stable, invariant features, affecting their ability to generalize across diverse input distributions. Understanding this trade-off is critical for developing AI systems that maintain high accuracy while resisting adversarial perturbations in real-world scenarios.

Trade-offs: Robustness vs Generalization Performance

Adversarial robustness in artificial intelligence often involves modifying models to withstand malicious perturbations, which can compromise generalization performance on clean data. Trade-offs emerge because increasing robustness typically reduces accuracy on standard benchmarks due to overly conservative learning. Optimizing this balance requires techniques like adversarial training combined with regularization strategies to mitigate degradation in generalization while enhancing resilience.

Evaluating Models: Robustness and Generalization Metrics

Evaluating models in artificial intelligence requires precise metrics that balance adversarial robustness and generalization capabilities, such as accuracy under adversarial attacks and out-of-distribution performance. Robustness metrics often include adversarial accuracy and perturbation-based tests, while generalization is measured through validation accuracy on diverse datasets and domain adaptation benchmarks. Combining these metrics enables the development of AI systems that maintain high performance both against adversarial inputs and across varied real-world conditions.

Current Strategies to Enhance Both Properties

Current strategies to enhance adversarial robustness and generalization in artificial intelligence include adversarial training, which integrates adversarial examples into the training process to improve model resilience against perturbations. Techniques such as robust optimization and regularization methods like weight decay and dropout help balance the trade-off between robustness and generalization by reducing model overfitting. Recent advances also explore hybrid approaches combining feature denoising and certified defenses to simultaneously strengthen robustness while maintaining high accuracy on clean data.

Future Directions in AI: Balancing Robustness and Generalization

Future directions in AI emphasize developing models that achieve a delicate balance between adversarial robustness and generalization to ensure reliable performance across diverse, real-world scenarios. Research into advanced regularization techniques and adaptive training algorithms aims to fortify models against adversarial attacks while preserving their ability to generalize effectively on unseen data. Integrating robust optimization with meta-learning frameworks represents a promising pathway for creating AI systems that maintain high accuracy and stability in dynamic environments.

Adversarial Robustness vs Generalization Infographic

techiny.com

techiny.com