Regularization techniques, such as L1 and L2, prevent overfitting by adding penalty terms to the loss function, encouraging simpler models. Normalization methods, like batch normalization and layer normalization, improve training stability and speed by re-centering and re-scaling input features or activations. Both approaches enhance model generalization but target different aspects: regularization controls model complexity, while normalization adjusts data distribution during training.

Table of Comparison

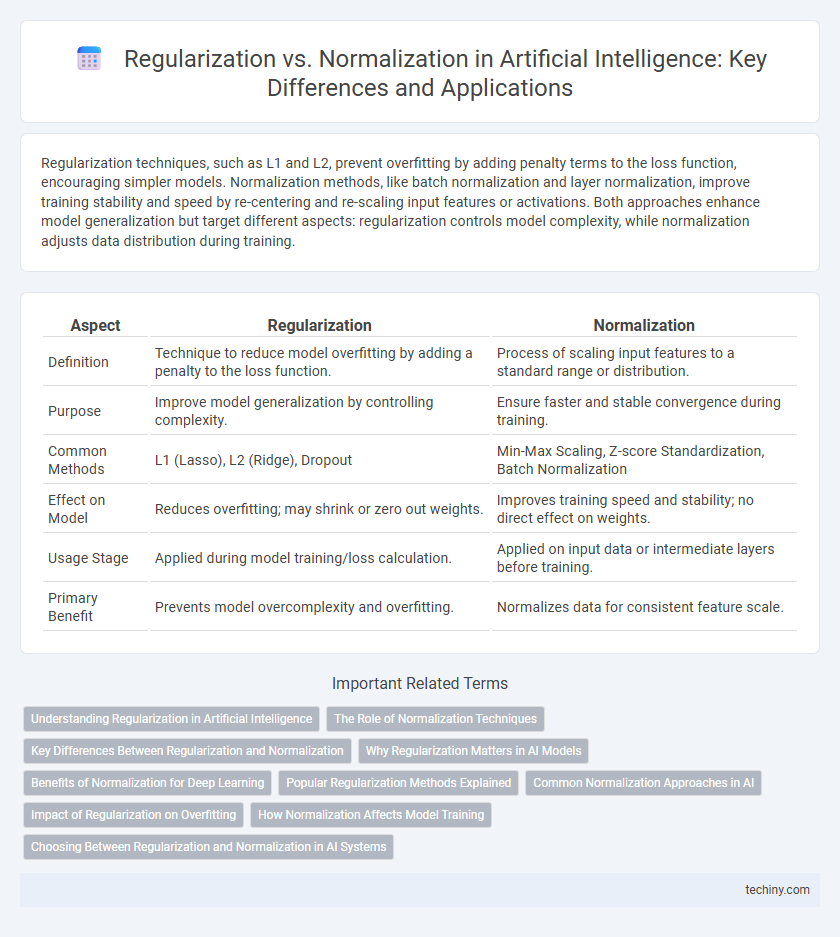

| Aspect | Regularization | Normalization |

|---|---|---|

| Definition | Technique to reduce model overfitting by adding a penalty to the loss function. | Process of scaling input features to a standard range or distribution. |

| Purpose | Improve model generalization by controlling complexity. | Ensure faster and stable convergence during training. |

| Common Methods | L1 (Lasso), L2 (Ridge), Dropout | Min-Max Scaling, Z-score Standardization, Batch Normalization |

| Effect on Model | Reduces overfitting; may shrink or zero out weights. | Improves training speed and stability; no direct effect on weights. |

| Usage Stage | Applied during model training/loss calculation. | Applied on input data or intermediate layers before training. |

| Primary Benefit | Prevents model overcomplexity and overfitting. | Normalizes data for consistent feature scale. |

Understanding Regularization in Artificial Intelligence

Regularization in artificial intelligence mitigates overfitting by adding a penalty term to the loss function, promoting simpler models that generalize better to unseen data. Common regularization techniques include L1 (Lasso), L2 (Ridge), and Elastic Net, which constrain model complexity through weight shrinkage. Effective regularization improves model robustness and predictive performance, especially in deep learning and complex neural networks.

The Role of Normalization Techniques

Normalization techniques in artificial intelligence enhance model training by rescaling input features to a common scale, reducing internal covariate shift, and stabilizing gradients. Methods such as Batch Normalization and Layer Normalization improve convergence speed and model generalization by maintaining consistent data distribution across layers. These techniques help prevent overfitting and improve the robustness of deep neural networks during training.

Key Differences Between Regularization and Normalization

Regularization and normalization serve distinct purposes in artificial intelligence model training, with regularization primarily aimed at preventing overfitting by adding a penalty to the loss function, while normalization focuses on scaling input features to improve convergence and model stability. Regularization techniques like L1 and L2 enforce sparsity or weight decay, whereas normalization methods such as Batch Normalization or Layer Normalization standardize inputs within the network layers. Understanding these key differences enables more effective model optimization and improved generalization on unseen data.

Why Regularization Matters in AI Models

Regularization is crucial in AI models to prevent overfitting, ensuring the model generalizes well to unseen data by adding a penalty for complexity. Techniques like L1 and L2 regularization reduce model variance, improving robustness and predictive accuracy. Unlike normalization, which scales input data, regularization directly influences the learning process, making it essential for building reliable AI systems.

Benefits of Normalization for Deep Learning

Normalization enhances deep learning by stabilizing the learning process and accelerating convergence, which improves model performance and generalization. Techniques like Batch Normalization reduce internal covariate shift, allowing higher learning rates and mitigating the risk of vanishing or exploding gradients. This results in more robust neural networks with faster training times and improved accuracy across various AI applications.

Popular Regularization Methods Explained

Popular regularization methods in artificial intelligence include L1 and L2 regularization, which control model complexity by adding penalty terms to the loss function. Dropout is another widely used technique that randomly deactivates neurons during training to prevent overfitting. These methods enhance model generalization by reducing variance and improving robustness in neural network learning.

Common Normalization Approaches in AI

Batch normalization, layer normalization, and instance normalization are common techniques used to stabilize and accelerate neural network training by normalizing input features. Batch normalization normalizes inputs across a mini-batch, improving gradient flow and reducing internal covariate shift, while layer normalization applies normalization across features within a single training example, making it effective for recurrent neural networks. Instance normalization is primarily used in style transfer tasks, normalizing across individual instances to preserve content while altering style.

Impact of Regularization on Overfitting

Regularization techniques such as L1 and L2 add penalty terms to the loss function, effectively controlling model complexity and reducing overfitting in artificial intelligence models. By constraining the magnitudes of model parameters, regularization improves generalization on unseen data, leading to more robust predictions. This approach is critical in deep learning, where large-scale models with numerous parameters are prone to overfitting without proper regularization.

How Normalization Affects Model Training

Normalization adjusts feature scales to a consistent range, enhancing gradient descent efficiency and leading to faster convergence during model training. It reduces internal covariate shift by ensuring input distributions maintain stability across layers, which improves the model's ability to learn generalized patterns. This process ultimately results in improved training stability and often higher accuracy in deep learning models.

Choosing Between Regularization and Normalization in AI Systems

Choosing between regularization and normalization in AI systems depends on the specific challenge being addressed: regularization techniques such as L1 and L2 reduce model overfitting by penalizing complex weights, improving generalization on unseen data. Normalization methods like batch normalization and layer normalization stabilize and accelerate training by standardizing input features or activations, thereby improving convergence. Optimal model performance often requires combining both approaches, tailoring hyperparameters based on dataset characteristics and model architecture complexity.

Regularization vs Normalization Infographic

techiny.com

techiny.com