Adversarial training enhances model robustness by exposing neural networks to deliberately crafted perturbations, improving their ability to withstand malicious inputs. Regularization techniques, such as L2 and dropout, reduce overfitting by constraining model complexity and promoting generalization on unseen data. Combining adversarial training with regularization offers a balanced approach, strengthening defense mechanisms while maintaining overall model performance.

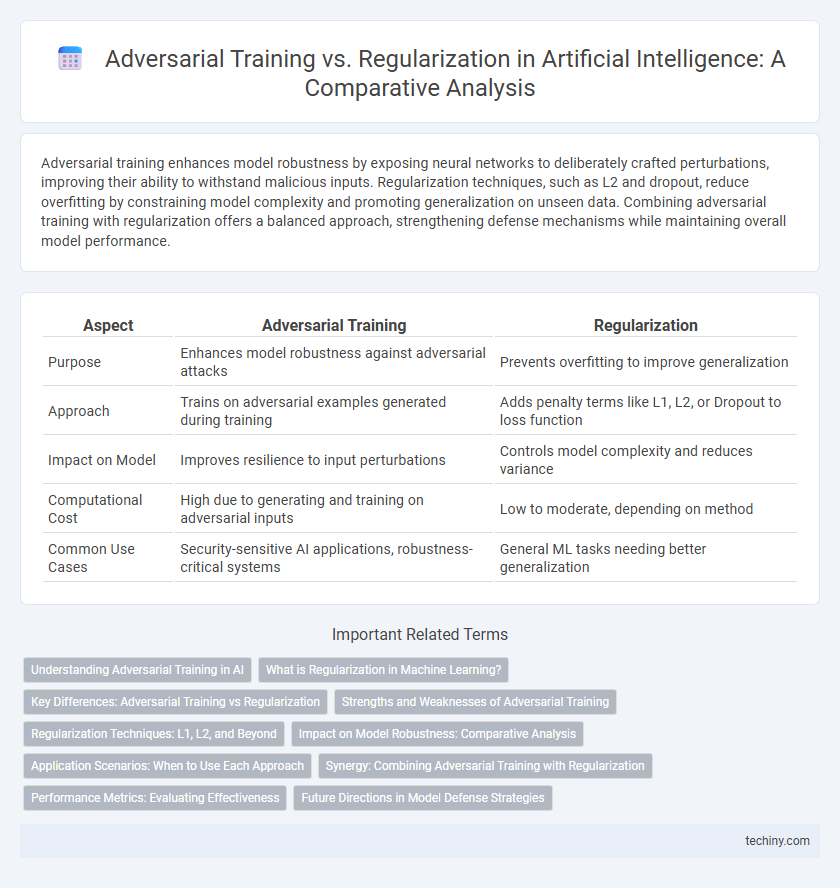

Table of Comparison

| Aspect | Adversarial Training | Regularization |

|---|---|---|

| Purpose | Enhances model robustness against adversarial attacks | Prevents overfitting to improve generalization |

| Approach | Trains on adversarial examples generated during training | Adds penalty terms like L1, L2, or Dropout to loss function |

| Impact on Model | Improves resilience to input perturbations | Controls model complexity and reduces variance |

| Computational Cost | High due to generating and training on adversarial inputs | Low to moderate, depending on method |

| Common Use Cases | Security-sensitive AI applications, robustness-critical systems | General ML tasks needing better generalization |

Understanding Adversarial Training in AI

Adversarial training enhances AI robustness by incorporating adversarial examples into the training data, forcing models to learn resilient features against malicious perturbations. Unlike regularization, which primarily prevents overfitting through techniques like L2 norm and dropout, adversarial training directly targets model vulnerability to adversarial inputs, improving security and generalization under attack. This approach is critical in domains such as computer vision and natural language processing, where adversarial manipulation can compromise model performance and reliability.

What is Regularization in Machine Learning?

Regularization in machine learning is a technique used to prevent overfitting by adding a penalty term to the loss function, which constrains model complexity. Common types include L1 (Lasso) and L2 (Ridge) regularization, which promote sparsity and reduce coefficient magnitude respectively. This approach improves generalization by discouraging the model from fitting noise in the training data.

Key Differences: Adversarial Training vs Regularization

Adversarial training enhances model robustness by exposing it to intentionally perturbed inputs during training, aiming to defend against adversarial attacks and improve generalization under distribution shifts. Regularization techniques, such as L1 or L2 regularization, focus on preventing overfitting by penalizing model complexity and encouraging simpler representations for better generalization on unseen data. The key difference lies in adversarial training's focus on robustness through worst-case perturbations, while regularization emphasizes model simplicity to mitigate overfitting.

Strengths and Weaknesses of Adversarial Training

Adversarial training enhances model robustness by exposing neural networks to perturbed inputs during training, significantly improving resistance to adversarial attacks. Its main strength lies in improving generalization to unseen adversarial examples, but it often requires substantial computational resources and can degrade performance on clean data. Despite these challenges, adversarial training remains a critical method for developing secure AI systems in high-stakes environments.

Regularization Techniques: L1, L2, and Beyond

Regularization techniques in Artificial Intelligence, such as L1 and L2 regularization, play a crucial role in preventing overfitting by adding penalty terms to the loss function, encouraging simpler models with better generalization. L1 regularization promotes sparsity by driving some weights to zero, effectively performing feature selection, while L2 regularization minimizes the magnitude of weights, leading to smoother models. Beyond these, advanced methods like Elastic Net combine L1 and L2 penalties, and techniques such as dropout and batch normalization further enhance model robustness and performance.

Impact on Model Robustness: Comparative Analysis

Adversarial training significantly enhances model robustness by exposing neural networks to perturbations during training, enabling them to resist adversarial attacks more effectively than models relying on traditional regularization techniques such as L2 or dropout. Regularization methods primarily prevent overfitting by penalizing model complexity, but they often fail to address vulnerabilities against carefully crafted adversarial examples. Comparative studies reveal that adversarial training improves robustness metrics substantially, reducing adversarial error rates while maintaining acceptable standard accuracy, a balance regularization alone struggles to achieve.

Application Scenarios: When to Use Each Approach

Adversarial training excels in security-critical applications such as autonomous driving and fraud detection by enhancing model robustness against malicious attacks. Regularization is more suitable for generalization improvement in scenarios like image recognition and natural language processing where overfitting is a primary concern. Choosing between adversarial training and regularization depends on whether the primary goal is defense against adversarial examples or improving standard predictive performance.

Synergy: Combining Adversarial Training with Regularization

Combining adversarial training with regularization techniques enhances model robustness and generalization by mitigating overfitting and improving resistance to adversarial attacks. Regularization methods like weight decay and dropout complement adversarial perturbations by enforcing smoother decision boundaries and reducing model complexity. This synergy leverages the strengths of both approaches, resulting in AI systems capable of maintaining high accuracy under adversarial conditions while retaining stability on clean data.

Performance Metrics: Evaluating Effectiveness

Adversarial training enhances model robustness by explicitly incorporating adversarial examples during training, leading to improved accuracy and resilience against perturbations measured by metrics like robust accuracy and perturbation sensitivity. Regularization techniques such as L2 norm and dropout primarily reduce overfitting, improving generalization reflected in validation accuracy and loss stability, but may not address adversarial vulnerability directly. Performance evaluations using metrics like robust accuracy, clean accuracy, and adversarial loss highlight that adversarial training often outperforms regularization in defending against adversarial attacks while maintaining competitive predictive performance.

Future Directions in Model Defense Strategies

Future directions in model defense strategies emphasize enhancing adversarial training by integrating dynamic regularization techniques that adapt to evolving attack patterns. Research explores combining robust optimization with novel regularization frameworks to improve model generalization under adversarial conditions. Advances in meta-learning and self-supervised defense mechanisms aim to create resilient AI systems capable of anticipating and mitigating emerging threats effectively.

Adversarial Training vs Regularization Infographic

techiny.com

techiny.com