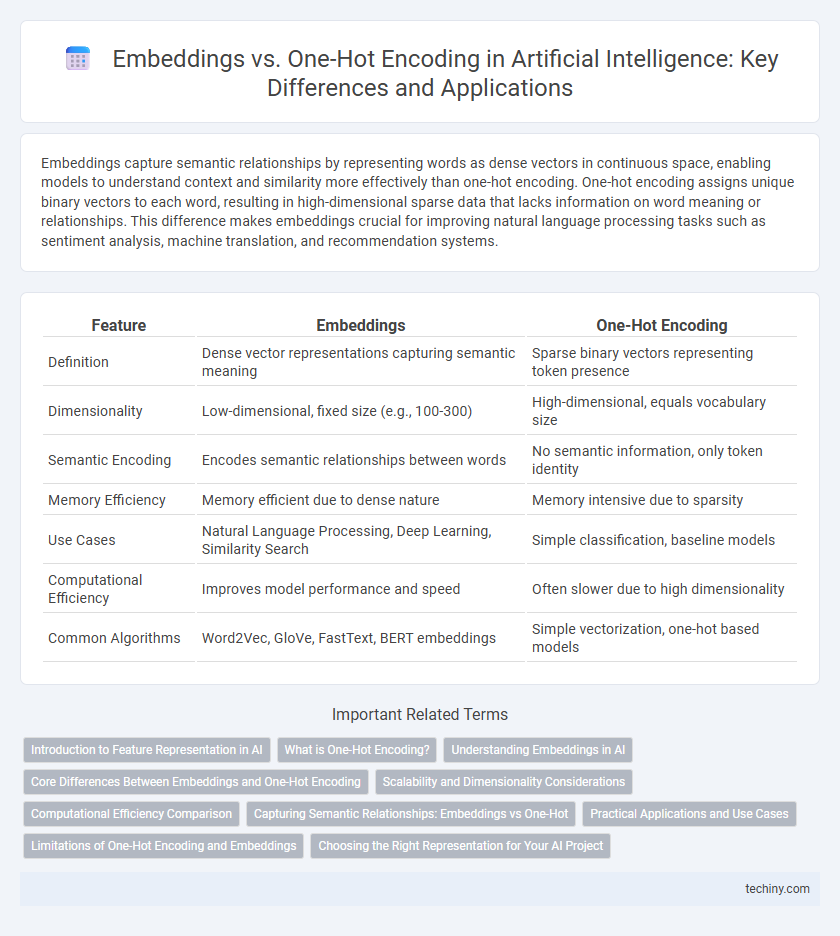

Embeddings capture semantic relationships by representing words as dense vectors in continuous space, enabling models to understand context and similarity more effectively than one-hot encoding. One-hot encoding assigns unique binary vectors to each word, resulting in high-dimensional sparse data that lacks information on word meaning or relationships. This difference makes embeddings crucial for improving natural language processing tasks such as sentiment analysis, machine translation, and recommendation systems.

Table of Comparison

| Feature | Embeddings | One-Hot Encoding |

|---|---|---|

| Definition | Dense vector representations capturing semantic meaning | Sparse binary vectors representing token presence |

| Dimensionality | Low-dimensional, fixed size (e.g., 100-300) | High-dimensional, equals vocabulary size |

| Semantic Encoding | Encodes semantic relationships between words | No semantic information, only token identity |

| Memory Efficiency | Memory efficient due to dense nature | Memory intensive due to sparsity |

| Use Cases | Natural Language Processing, Deep Learning, Similarity Search | Simple classification, baseline models |

| Computational Efficiency | Improves model performance and speed | Often slower due to high dimensionality |

| Common Algorithms | Word2Vec, GloVe, FastText, BERT embeddings | Simple vectorization, one-hot based models |

Introduction to Feature Representation in AI

Embeddings transform categorical data into dense, continuous vectors that capture semantic relationships, enabling AI models to understand context and similarity effectively. One-hot encoding represents categories as sparse vectors with binary values, which can lead to high-dimensionality and lack of relational information between features. Leveraging embeddings in feature representation enhances model performance by providing richer, compact data representations crucial for natural language processing and recommendation systems.

What is One-Hot Encoding?

One-hot encoding is a technique used in artificial intelligence to represent categorical data as binary vectors, where each category is converted into a vector with a single high (1) value and all other positions set to low (0). This method helps algorithms process categorical variables by transforming them into a numerical format suitable for machine learning models. However, one-hot encoding often results in sparse, high-dimensional data, which can lead to inefficiencies in storage and computation compared to dense representations like embeddings.

Understanding Embeddings in AI

Embeddings in AI transform categorical data into dense vectors that capture semantic relationships, enabling models to understand context and similarity between items more effectively than sparse one-hot encoding. Unlike one-hot vectors, embeddings reduce dimensionality and enhance generalization by placing related entities closer in the vector space. This approach is fundamental in natural language processing tasks, such as word representation, where capturing nuanced meanings improves model performance.

Core Differences Between Embeddings and One-Hot Encoding

Embeddings convert words into dense, continuous vector representations that capture semantic relationships and contextual meaning, while one-hot encoding represents words as sparse, high-dimensional vectors with a single active element and no inherent semantic information. Embeddings enable models to generalize better by learning similarities between words, whereas one-hot encoding treats each word as completely distinct and unrelated. Embeddings reduce dimensionality and improve computational efficiency compared to one-hot encoding's large and sparse vectors.

Scalability and Dimensionality Considerations

Embeddings offer superior scalability compared to one-hot encoding by representing words in dense, low-dimensional vectors that capture semantic relationships, reducing memory consumption significantly. One-hot encoding results in sparse, high-dimensional vectors equal to the vocabulary size, which leads to computational inefficiency and poor scalability in large datasets. Dimensionality reduction in embeddings enables faster training and improved model generalization, making them preferable for handling extensive corpora and complex AI tasks.

Computational Efficiency Comparison

Embeddings significantly reduce dimensionality by representing words as dense vectors, enabling faster processing and lower memory consumption compared to one-hot encoding's sparse and high-dimensional vectors. One-hot encoding requires computational resources that grow linearly with vocabulary size, leading to scalability issues in large datasets. Embeddings leverage continuous vector spaces, which optimize operations like similarity calculation and model training, resulting in enhanced computational efficiency for natural language processing tasks.

Capturing Semantic Relationships: Embeddings vs One-Hot

Embeddings capture semantic relationships by representing words as dense vectors in continuous space, enabling algorithms to identify contextual similarities and analogies. One-hot encoding represents words as sparse, high-dimensional vectors with no inherent semantic meaning, making it unable to capture relationships between words. Consequently, embeddings significantly enhance natural language processing tasks by modeling word semantics more effectively than one-hot encoding.

Practical Applications and Use Cases

Embeddings provide dense vector representations that capture semantic relationships between words, making them ideal for natural language processing tasks such as sentiment analysis, machine translation, and recommendation systems. One-hot encoding, while simple and interpretable, is limited by high dimensionality and sparsity, often used in smaller vocabulary settings or when interpretability is crucial, such as categorical feature encoding in traditional machine learning models. Practical applications favor embeddings in deep learning frameworks for text classification and search engines due to their efficiency in handling contextual meaning and analogies.

Limitations of One-Hot Encoding and Embeddings

One-Hot Encoding suffers from high dimensionality and sparsity, making it inefficient for large vocabularies and unable to capture semantic relationships between words. Embeddings mitigate these issues by representing words in dense, low-dimensional vectors that encode semantic meaning and contextual similarity, but they can be computationally expensive to train and require large datasets to generate accurate representations. Both methods face challenges in handling out-of-vocabulary words and context-dependent meanings, impacting their effectiveness in complex natural language processing tasks.

Choosing the Right Representation for Your AI Project

Embeddings capture semantic relationships by mapping words into dense vectors in continuous vector space, enhancing performance in natural language processing tasks. One-hot encoding represents words as sparse vectors with binary values, suitable for simpler models with smaller vocabularies but often leads to high dimensionality and inefficiency. Selecting the right representation depends on dataset size, computational resources, and the complexity required to capture contextual meaning in AI projects.

Embeddings vs One-Hot Encoding Infographic

techiny.com

techiny.com