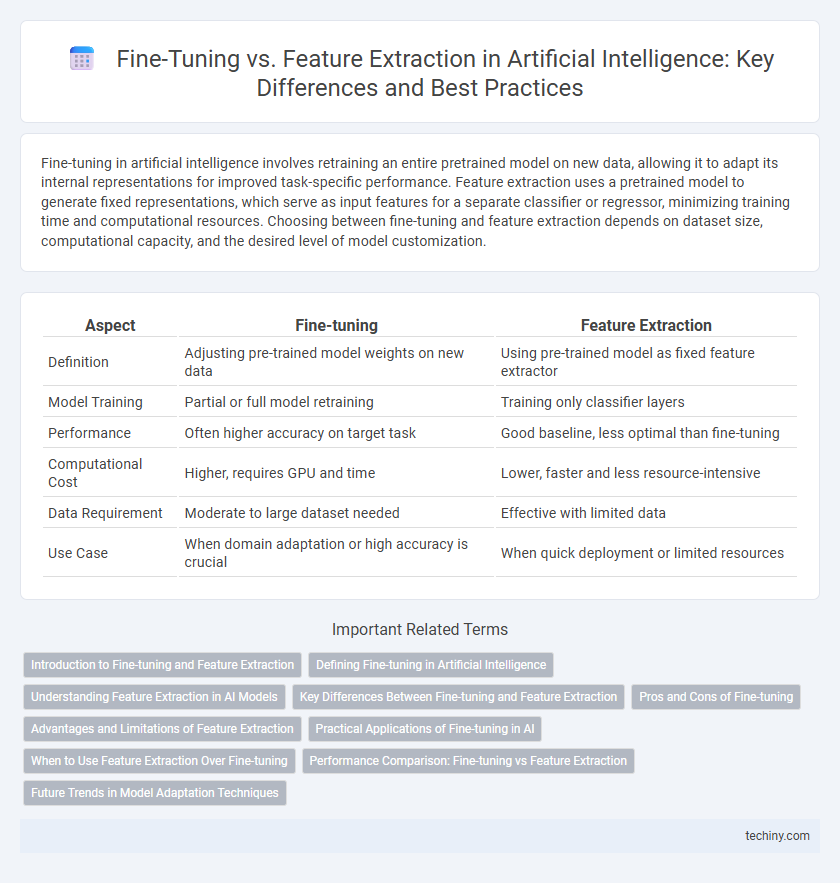

Fine-tuning in artificial intelligence involves retraining an entire pretrained model on new data, allowing it to adapt its internal representations for improved task-specific performance. Feature extraction uses a pretrained model to generate fixed representations, which serve as input features for a separate classifier or regressor, minimizing training time and computational resources. Choosing between fine-tuning and feature extraction depends on dataset size, computational capacity, and the desired level of model customization.

Table of Comparison

| Aspect | Fine-tuning | Feature Extraction |

|---|---|---|

| Definition | Adjusting pre-trained model weights on new data | Using pre-trained model as fixed feature extractor |

| Model Training | Partial or full model retraining | Training only classifier layers |

| Performance | Often higher accuracy on target task | Good baseline, less optimal than fine-tuning |

| Computational Cost | Higher, requires GPU and time | Lower, faster and less resource-intensive |

| Data Requirement | Moderate to large dataset needed | Effective with limited data |

| Use Case | When domain adaptation or high accuracy is crucial | When quick deployment or limited resources |

Introduction to Fine-tuning and Feature Extraction

Fine-tuning involves adapting a pre-trained neural network by updating its weights on a new dataset to improve task-specific performance, making it highly effective for transfer learning in artificial intelligence. Feature extraction leverages the pre-trained model's learned representations by freezing its layers and using them as input features for a new classifier, which reduces training time and computational resources. Both techniques play critical roles in optimizing AI models, with fine-tuning offering deeper customization and feature extraction providing efficient utilization of established feature hierarchies.

Defining Fine-tuning in Artificial Intelligence

Fine-tuning in artificial intelligence involves adjusting a pre-trained model on a new, specific dataset to improve performance on a related task. This process updates the model's weights, allowing it to learn task-specific features while leveraging previously learned knowledge. Fine-tuning is essential for adapting large models like BERT or GPT to specialized applications such as medical diagnosis or sentiment analysis.

Understanding Feature Extraction in AI Models

Feature extraction in AI models involves leveraging pre-trained neural networks to identify and utilize relevant data representations without updating the entire model's parameters. This approach efficiently captures important patterns by reusing learned features, significantly reducing computational cost and training time compared to full fine-tuning. By freezing early layers that extract generic features, models maintain robustness while adapting specific downstream tasks using only the final layers.

Key Differences Between Fine-tuning and Feature Extraction

Fine-tuning involves updating the weights of a pre-trained model on a new dataset, allowing the model to adapt deeply to the specific task with higher accuracy but requiring more computational resources and data. Feature extraction, on the other hand, keeps the pre-trained model's weights fixed and only trains a new classifier on top, making it faster and less prone to overfitting when limited data is available. Key differences lie in the trade-off between training time, data requirements, and the level of adaptation achieved in the model's representations.

Pros and Cons of Fine-tuning

Fine-tuning in artificial intelligence allows models to adapt pre-trained networks to new tasks by updating all layers, resulting in higher accuracy and better task-specific performance. This approach requires substantial computational resources and large labeled datasets to avoid overfitting and ensure effective learning. Fine-tuning offers more flexibility compared to feature extraction but demands careful hyperparameter tuning and longer training times.

Advantages and Limitations of Feature Extraction

Feature extraction enables efficient use of pre-trained models by freezing early layers and training only the final classifier, reducing computational costs and the need for large datasets. It leverages learned representations to improve performance on specific tasks but may lack adaptability to highly domain-specific features compared to fine-tuning. Limitations include potential suboptimal feature relevance and less flexibility in capturing new patterns unique to the target dataset.

Practical Applications of Fine-tuning in AI

Fine-tuning in artificial intelligence allows pretrained models to adapt to specific tasks with improved accuracy by retraining on smaller, domain-specific datasets. It is widely used in natural language processing for sentiment analysis, where models like BERT are fine-tuned to recognize nuanced emotional expressions in text. In computer vision, fine-tuning enables models such as ResNet to excel in specialized image classification tasks, including medical image diagnosis, by leveraging learned visual features and adapting them to new visual domains.

When to Use Feature Extraction Over Fine-tuning

Feature extraction is preferred when computational resources are limited or the dataset size is small, as it leverages pre-trained models to extract relevant features without updating all model parameters. It is ideal for scenarios where the target task is similar to the source task, ensuring effective transfer learning without the complexity of full model retraining. Feature extraction reduces training time and mitigates overfitting risks compared to fine-tuning, making it suitable for rapid deployment in resource-constrained environments.

Performance Comparison: Fine-tuning vs Feature Extraction

Fine-tuning typically achieves higher performance by updating pre-trained model weights, allowing adaptation to specific tasks and datasets, whereas feature extraction uses fixed pre-trained representations with a separate classifier, often resulting in faster training but lower accuracy. Studies show fine-tuning outperforms feature extraction in complex tasks like natural language understanding and image recognition, where task-specific nuances are crucial. However, feature extraction remains effective for limited data scenarios or computational constraints, emphasizing a trade-off between performance and efficiency.

Future Trends in Model Adaptation Techniques

Future trends in model adaptation techniques highlight a shift towards hybrid approaches combining fine-tuning and feature extraction to optimize performance and computational efficiency. Advancements in transfer learning and parameter-efficient tuning methods--such as adapters, LoRA (Low-Rank Adaptation), and prompt tuning--enable models to quickly adapt to new tasks while minimizing resource usage. The integration of automated neural architecture search and continual learning frameworks further accelerates personalized AI model refinement across diverse applications.

Fine-tuning vs Feature Extraction Infographic

techiny.com

techiny.com