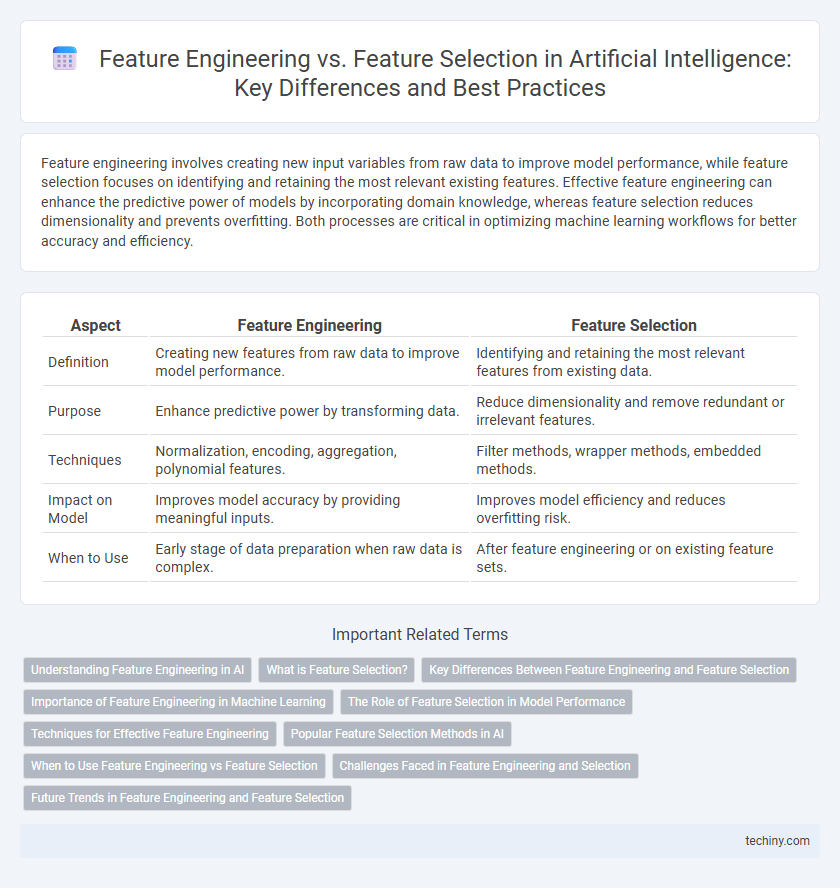

Feature engineering involves creating new input variables from raw data to improve model performance, while feature selection focuses on identifying and retaining the most relevant existing features. Effective feature engineering can enhance the predictive power of models by incorporating domain knowledge, whereas feature selection reduces dimensionality and prevents overfitting. Both processes are critical in optimizing machine learning workflows for better accuracy and efficiency.

Table of Comparison

| Aspect | Feature Engineering | Feature Selection |

|---|---|---|

| Definition | Creating new features from raw data to improve model performance. | Identifying and retaining the most relevant features from existing data. |

| Purpose | Enhance predictive power by transforming data. | Reduce dimensionality and remove redundant or irrelevant features. |

| Techniques | Normalization, encoding, aggregation, polynomial features. | Filter methods, wrapper methods, embedded methods. |

| Impact on Model | Improves model accuracy by providing meaningful inputs. | Improves model efficiency and reduces overfitting risk. |

| When to Use | Early stage of data preparation when raw data is complex. | After feature engineering or on existing feature sets. |

Understanding Feature Engineering in AI

Feature engineering in AI involves transforming raw data into meaningful inputs to improve model performance, often through techniques like normalization, encoding, and creation of new features. It requires domain knowledge to extract relevant patterns and enhance predictive accuracy before model training. Understanding feature engineering is crucial for optimizing data representation and enabling AI algorithms to learn more effectively.

What is Feature Selection?

Feature selection is the process of identifying and selecting the most relevant variables or features from a dataset to improve the performance of machine learning models. This technique reduces dimensionality, enhances model accuracy, and decreases computational complexity by eliminating redundant or irrelevant data. Common methods of feature selection include filter methods, wrapper methods, and embedded methods, each optimizing model efficiency in different ways.

Key Differences Between Feature Engineering and Feature Selection

Feature engineering involves creating new input features from raw data to improve model performance, whereas feature selection focuses on identifying and retaining the most relevant features to reduce dimensionality and prevent overfitting. Key differences include that feature engineering transforms or synthesizes data attributes, while feature selection evaluates and ranks existing features based on their predictive power. Effective use of both techniques enhances model accuracy and computational efficiency in machine learning workflows.

Importance of Feature Engineering in Machine Learning

Feature engineering transforms raw data into meaningful inputs that enhance machine learning model accuracy and predictive power. By creating relevant features through domain knowledge and data preprocessing, it captures essential patterns that improve model interpretability and performance. Effective feature engineering reduces dimensionality and mitigates overfitting, making it critical for developing robust AI systems.

The Role of Feature Selection in Model Performance

Feature selection plays a crucial role in enhancing model performance by identifying the most relevant attributes, reducing dimensionality, and mitigating overfitting in machine learning algorithms. By eliminating redundant or noisy features, it improves computational efficiency and helps models generalize better to unseen data. Effective feature selection leads to more accurate predictions and robust artificial intelligence systems.

Techniques for Effective Feature Engineering

Effective feature engineering techniques include data transformation methods such as normalization, scaling, and encoding categorical variables to enhance model performance. Creating interaction features and polynomial features helps capture complex relationships within data, improving predictive accuracy. Dimensionality reduction methods like PCA complement feature engineering by simplifying data representation, facilitating more effective learning.

Popular Feature Selection Methods in AI

Popular feature selection methods in artificial intelligence include filter, wrapper, and embedded techniques, each optimizing model performance differently. Filters rely on statistical measures like correlation and mutual information to evaluate feature relevance independently of any learning algorithm. Wrapper methods utilize predictive models to assess feature subsets, enhancing accuracy by selecting features that improve the model's cross-validation scores, while embedded methods perform feature selection during the model training process, as seen in LASSO regression or tree-based algorithms.

When to Use Feature Engineering vs Feature Selection

Feature engineering is essential when creating new variables or transforming raw data to improve model performance, especially in complex datasets requiring domain knowledge for meaningful feature creation. Feature selection is more appropriate when the dataset contains many redundant or irrelevant features, helping to reduce dimensionality and enhance model interpretability and efficiency. Use feature engineering early in the pipeline to enrich data representation, while feature selection should be applied before model training to optimize feature subsets.

Challenges Faced in Feature Engineering and Selection

Feature engineering and selection face challenges such as handling high-dimensional data, which can lead to overfitting and increased computational complexity. Identifying relevant features from noisy or redundant datasets requires sophisticated algorithms and domain expertise to enhance model accuracy. Balancing feature diversity while maintaining interpretability remains a critical obstacle in developing efficient machine learning models.

Future Trends in Feature Engineering and Feature Selection

Future trends in feature engineering and feature selection emphasize automation through advanced machine learning techniques like deep learning and reinforcement learning, enabling dynamic and context-aware feature extraction. Integration of explainable AI (XAI) methods ensures transparency and interpretability in feature relevance, enhancing model trustworthiness and regulatory compliance. Emphasis on real-time feature adaptation driven by streaming data facilitates continuous learning and optimization in rapidly evolving environments.

Feature Engineering vs Feature Selection Infographic

techiny.com

techiny.com