Convolutional Neural Networks (CNNs) excel at processing grid-like data such as images by capturing spatial hierarchies through convolutional layers. Recurrent Neural Networks (RNNs), on the other hand, specialize in sequential data by maintaining hidden states that capture temporal dependencies. While CNNs are preferred for tasks like image recognition, RNNs are more effective in natural language processing and time series analysis.

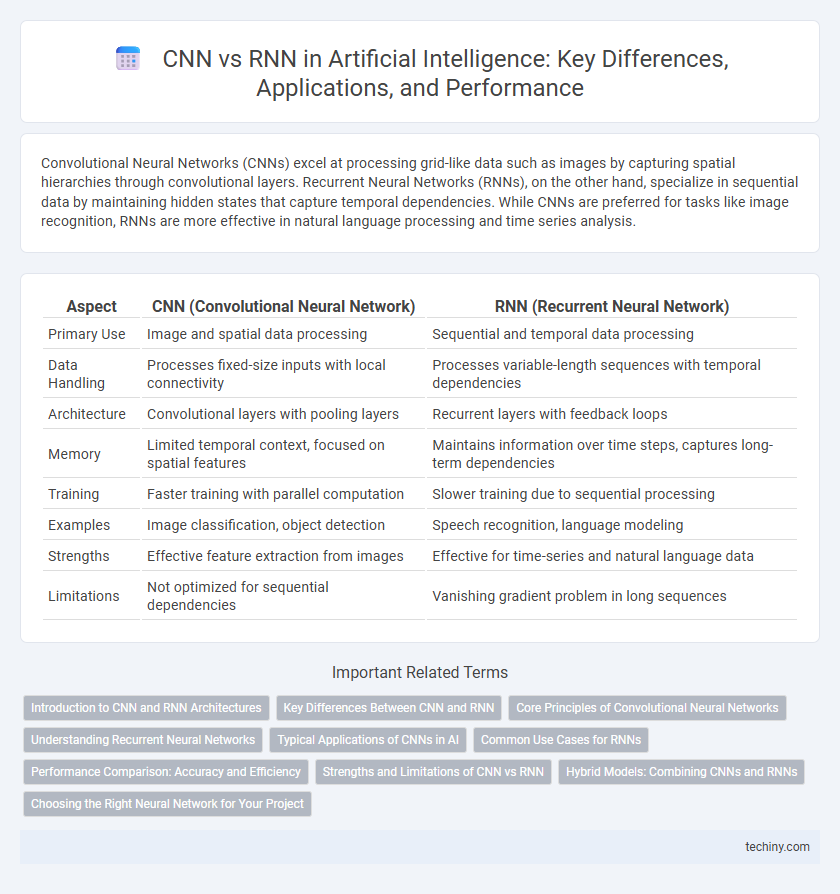

Table of Comparison

| Aspect | CNN (Convolutional Neural Network) | RNN (Recurrent Neural Network) |

|---|---|---|

| Primary Use | Image and spatial data processing | Sequential and temporal data processing |

| Data Handling | Processes fixed-size inputs with local connectivity | Processes variable-length sequences with temporal dependencies |

| Architecture | Convolutional layers with pooling layers | Recurrent layers with feedback loops |

| Memory | Limited temporal context, focused on spatial features | Maintains information over time steps, captures long-term dependencies |

| Training | Faster training with parallel computation | Slower training due to sequential processing |

| Examples | Image classification, object detection | Speech recognition, language modeling |

| Strengths | Effective feature extraction from images | Effective for time-series and natural language data |

| Limitations | Not optimized for sequential dependencies | Vanishing gradient problem in long sequences |

Introduction to CNN and RNN Architectures

Convolutional Neural Networks (CNNs) excel in processing grid-like data structures such as images by utilizing convolutional layers to automatically and adaptively learn spatial hierarchies of features. Recurrent Neural Networks (RNNs), in contrast, handle sequential data by maintaining hidden states that capture temporal dependencies, making them suitable for tasks like natural language processing and time series analysis. Both architectures form the foundation of deep learning models tailored for distinct data types--CNNs for spatial feature extraction and RNNs for temporal pattern recognition.

Key Differences Between CNN and RNN

Convolutional Neural Networks (CNNs) excel at processing spatial data through convolutional layers that capture local patterns in images, making them ideal for computer vision tasks. Recurrent Neural Networks (RNNs) specialize in handling sequential data by maintaining temporal dependencies via recurrent connections, which is crucial for natural language processing and time-series analysis. CNNs use fixed-size input dimensions and focus on spatial hierarchies, whereas RNNs accommodate variable-length sequences and emphasize temporal dynamics.

Core Principles of Convolutional Neural Networks

Convolutional Neural Networks (CNNs) leverage spatial hierarchies by applying convolutional filters that capture local patterns in data such as images, enabling efficient feature extraction. Their core principle involves shared weights and receptive fields, which reduce computational complexity and preserve spatial relationships. Unlike Recurrent Neural Networks (RNNs), CNNs excel in processing grid-like data structures by exploiting spatial correlations through layers of convolution and pooling.

Understanding Recurrent Neural Networks

Recurrent Neural Networks (RNNs) excel at processing sequential data by maintaining hidden states that capture temporal dependencies, making them ideal for tasks like language modeling and time series prediction. Unlike Convolutional Neural Networks (CNNs), which specialize in spatial feature extraction through convolutional layers, RNNs use feedback loops enabling dynamic temporal behavior and memory of previous inputs. Understanding RNN architectures such as LSTM and GRU is crucial for addressing vanishing gradient problems and improving sequence learning performance.

Typical Applications of CNNs in AI

Convolutional Neural Networks (CNNs) excel in image recognition, object detection, and facial recognition, making them vital for computer vision tasks. Their ability to capture spatial hierarchies and patterns enables effective processing of visual data in medical imaging, autonomous vehicles, and video surveillance. CNNs also contribute to advancements in natural language processing by extracting features from text and speech signals.

Common Use Cases for RNNs

Recurrent Neural Networks (RNNs) excel in processing sequential data, making them ideal for natural language processing tasks such as language modeling, machine translation, and sentiment analysis. They are widely used in speech recognition systems and time series prediction due to their ability to maintain context through temporal dependencies. Applications in video analysis and music generation also leverage RNN architectures to capture dynamic patterns over time.

Performance Comparison: Accuracy and Efficiency

Convolutional Neural Networks (CNNs) excel in image recognition tasks due to their ability to capture spatial hierarchies, often achieving higher accuracy and faster convergence compared to Recurrent Neural Networks (RNNs) in visual domains. RNNs, designed to handle sequential data, typically demonstrate superior performance in natural language processing and time-series prediction but suffer from longer training times and vanishing gradient issues affecting efficiency. Benchmark studies highlight CNNs' efficiency in parallel computation and feature extraction, whereas RNNs require more computational resources to maintain temporal dependencies, impacting overall throughput and latency.

Strengths and Limitations of CNN vs RNN

Convolutional Neural Networks (CNNs) excel at spatial data analysis, such as image recognition, due to their ability to capture local patterns through convolutional layers and pooling. Recurrent Neural Networks (RNNs) are designed for sequential data, excelling in tasks like language modeling and time series prediction by maintaining temporal dependencies through recurrent connections. CNNs struggle with sequential dependencies and variable-length inputs, whereas RNNs face challenges like vanishing gradients and slower training compared to CNNs.

Hybrid Models: Combining CNNs and RNNs

Hybrid models that combine Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) leverage CNNs' ability to extract spatial features with RNNs' capacity to capture temporal dependencies, enhancing performance in sequential data tasks like video analysis and speech recognition. These architectures integrate convolutional layers for feature extraction and recurrent layers for sequence modeling, providing superior accuracy in complex pattern recognition problems. Recent advancements in hybrid CNN-RNN models demonstrate improved robustness and efficiency in natural language processing and time-series prediction applications.

Choosing the Right Neural Network for Your Project

Convolutional Neural Networks (CNNs) excel in processing spatial data such as images, making them ideal for computer vision tasks and image recognition projects. Recurrent Neural Networks (RNNs) are designed for sequential data, proving effective for natural language processing, speech recognition, and time-series analysis. Selecting the right neural network depends on the data structure and project goals, where CNNs prioritize spatial hierarchies and RNNs emphasize temporal dependencies.

CNN vs RNN Infographic

techiny.com

techiny.com