Convolutional Neural Networks (CNNs) excel at processing spatial data, making them ideal for image recognition and computer vision tasks by capturing hierarchical patterns through convolutional layers. Recurrent Neural Networks (RNNs) are designed to handle sequential data, such as time series or natural language, by maintaining hidden states that capture temporal dependencies. Choosing between CNNs and RNNs depends on the data structure and application, with CNNs prioritizing spatial feature extraction and RNNs focusing on context within sequences.

Table of Comparison

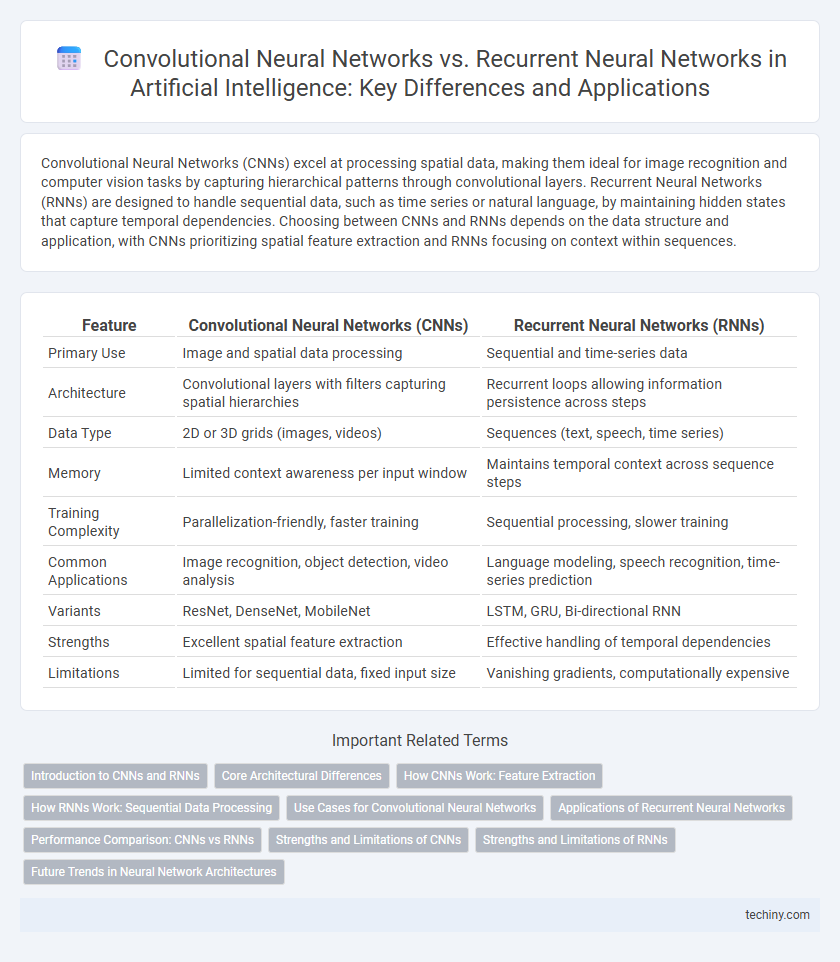

| Feature | Convolutional Neural Networks (CNNs) | Recurrent Neural Networks (RNNs) |

|---|---|---|

| Primary Use | Image and spatial data processing | Sequential and time-series data |

| Architecture | Convolutional layers with filters capturing spatial hierarchies | Recurrent loops allowing information persistence across steps |

| Data Type | 2D or 3D grids (images, videos) | Sequences (text, speech, time series) |

| Memory | Limited context awareness per input window | Maintains temporal context across sequence steps |

| Training Complexity | Parallelization-friendly, faster training | Sequential processing, slower training |

| Common Applications | Image recognition, object detection, video analysis | Language modeling, speech recognition, time-series prediction |

| Variants | ResNet, DenseNet, MobileNet | LSTM, GRU, Bi-directional RNN |

| Strengths | Excellent spatial feature extraction | Effective handling of temporal dependencies |

| Limitations | Limited for sequential data, fixed input size | Vanishing gradients, computationally expensive |

Introduction to CNNs and RNNs

Convolutional Neural Networks (CNNs) specialize in processing grid-like data structures such as images, utilizing convolutional layers to automatically and adaptively learn spatial hierarchies of features. Recurrent Neural Networks (RNNs) excel in handling sequential data like time series or natural language by maintaining hidden states that capture temporal dependencies. Both architectures underpin numerous AI applications, with CNNs dominating computer vision tasks and RNNs being crucial for speech recognition and language modeling.

Core Architectural Differences

Convolutional Neural Networks (CNNs) utilize spatial hierarchies through convolutional layers to effectively process grid-like data such as images by capturing local patterns and spatial dependencies. Recurrent Neural Networks (RNNs) feature cyclic connections enabling sequential data handling by maintaining hidden states that model temporal dependencies across time steps. Core architectural differences lie in CNNs' parallelizable, fixed-size input handling versus RNNs' dynamic sequence length processing and inherent temporal context retention.

How CNNs Work: Feature Extraction

Convolutional Neural Networks (CNNs) utilize convolutional layers with filters to automatically detect spatial hierarchies of features in input images, enabling efficient feature extraction by scanning local regions. These filters capture edges, textures, and patterns at various depths, progressively building complex feature representations through pooling and activation functions. The hierarchical structure of CNNs allows for robust image recognition, making them particularly effective for tasks like object detection and classification.

How RNNs Work: Sequential Data Processing

Recurrent Neural Networks (RNNs) process sequential data by maintaining a hidden state that captures information from previous time steps, enabling them to model temporal dependencies. This architecture allows RNNs to handle variable-length sequences effectively, making them suitable for tasks like speech recognition and language modeling. Unlike Convolutional Neural Networks (CNNs), which excel in spatial data, RNNs specialize in analyzing patterns across time or ordered data points.

Use Cases for Convolutional Neural Networks

Convolutional Neural Networks (CNNs) excel in image recognition, object detection, and video analysis due to their ability to capture spatial hierarchies in visual data. CNN architectures are widely used in medical imaging diagnostics, autonomous vehicle vision systems, and facial recognition technologies. Their effectiveness in processing grid-like data makes them the preferred choice for tasks involving high-dimensional image inputs.

Applications of Recurrent Neural Networks

Recurrent Neural Networks (RNNs) excel in processing sequential data, making them ideal for applications such as natural language processing, speech recognition, and time series forecasting. Their ability to maintain hidden states allows RNNs to capture temporal dependencies essential for tasks like language translation, sentiment analysis, and stock price prediction. Unlike Convolutional Neural Networks (CNNs), which are optimized for spatial data, RNNs specialize in handling variable-length sequences and contextual information across time steps.

Performance Comparison: CNNs vs RNNs

Convolutional Neural Networks (CNNs) excel in processing spatial data like images through hierarchical feature extraction, resulting in faster training times and higher accuracy in pattern recognition tasks. Recurrent Neural Networks (RNNs), including variants like LSTM and GRU, are designed to handle sequential data such as text or time series by maintaining temporal dependencies, but they often suffer from vanishing gradient problems, leading to slower convergence. Performance comparison shows CNNs outperform RNNs in image-related tasks due to their parallelizable architecture, while RNNs remain superior for sequence prediction and language modeling despite higher computational costs.

Strengths and Limitations of CNNs

Convolutional Neural Networks (CNNs) excel in processing spatial data, particularly images, due to their ability to capture hierarchical patterns through convolutional layers and pooling operations, making them ideal for tasks like image recognition and object detection. Their limitations include a lack of inherent capability to handle sequential data or temporal dependencies, which constrains their effectiveness in natural language processing or time series analysis compared to Recurrent Neural Networks (RNNs). CNNs also require substantial computational resources for training on large datasets but benefit from parallelization, improving efficiency for large-scale vision applications.

Strengths and Limitations of RNNs

Recurrent Neural Networks (RNNs) excel at processing sequential data by maintaining temporal dependencies through hidden states, making them ideal for tasks like language modeling, speech recognition, and time series prediction. Their ability to handle variable-length input sequences allows capturing context over time, but they face challenges such as vanishing and exploding gradients, which hinder learning long-term dependencies. Despite these limitations, advanced variants like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) mitigate such issues, enhancing stability and performance in complex sequential tasks.

Future Trends in Neural Network Architectures

Emerging trends in neural network architectures emphasize hybrid models that combine Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) to leverage spatial feature extraction with temporal sequence modeling for enhanced performance in complex AI tasks. Advanced architectures such as Transformers and Graph Neural Networks are increasingly integrating CNN and RNN components to improve adaptability and efficiency in real-time data processing and natural language understanding. Research in explainability and energy-efficient neural networks is driving innovations that optimize CNN-RNN hybrids for deployment in edge AI devices and large-scale cloud environments.

Convolutional Neural Networks vs Recurrent Neural Networks Infographic

techiny.com

techiny.com