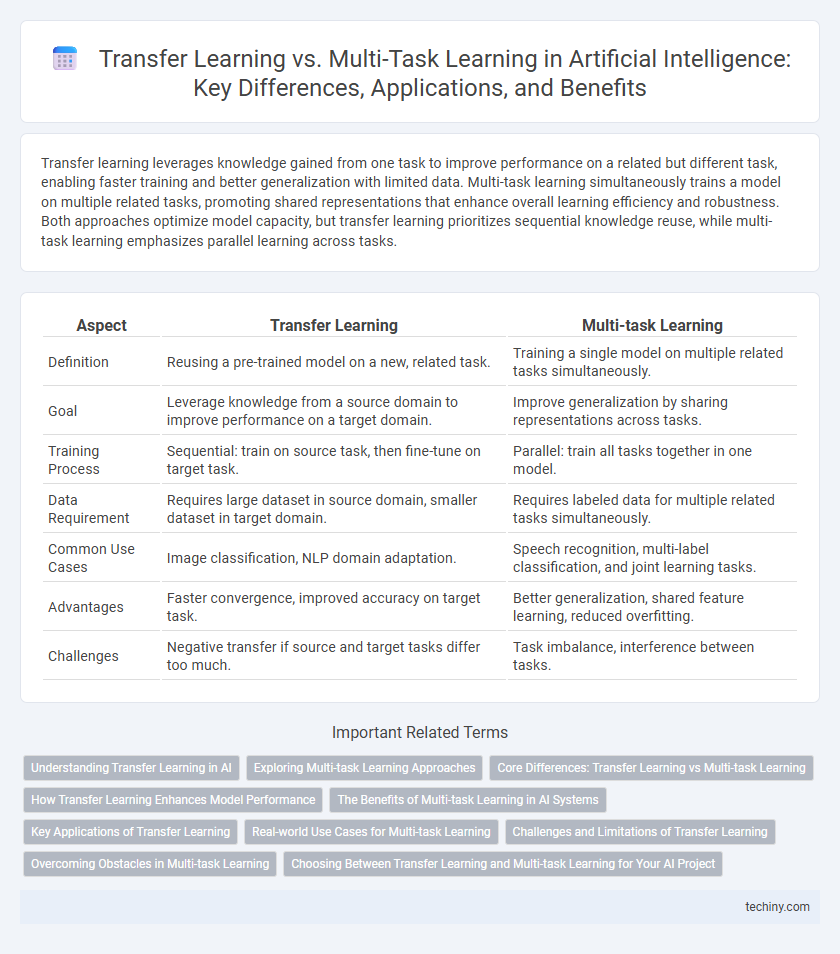

Transfer learning leverages knowledge gained from one task to improve performance on a related but different task, enabling faster training and better generalization with limited data. Multi-task learning simultaneously trains a model on multiple related tasks, promoting shared representations that enhance overall learning efficiency and robustness. Both approaches optimize model capacity, but transfer learning prioritizes sequential knowledge reuse, while multi-task learning emphasizes parallel learning across tasks.

Table of Comparison

| Aspect | Transfer Learning | Multi-task Learning |

|---|---|---|

| Definition | Reusing a pre-trained model on a new, related task. | Training a single model on multiple related tasks simultaneously. |

| Goal | Leverage knowledge from a source domain to improve performance on a target domain. | Improve generalization by sharing representations across tasks. |

| Training Process | Sequential: train on source task, then fine-tune on target task. | Parallel: train all tasks together in one model. |

| Data Requirement | Requires large dataset in source domain, smaller dataset in target domain. | Requires labeled data for multiple related tasks simultaneously. |

| Common Use Cases | Image classification, NLP domain adaptation. | Speech recognition, multi-label classification, and joint learning tasks. |

| Advantages | Faster convergence, improved accuracy on target task. | Better generalization, shared feature learning, reduced overfitting. |

| Challenges | Negative transfer if source and target tasks differ too much. | Task imbalance, interference between tasks. |

Understanding Transfer Learning in AI

Transfer learning in AI enables models to leverage knowledge gained from one task to improve performance on related but distinct tasks, reducing the need for extensive labeled data. This technique involves pre-training a neural network on a large dataset and fine-tuning it on a smaller, task-specific dataset, enhancing efficiency and accuracy. Transfer learning is particularly effective in domains like natural language processing and computer vision, where labeled data scarcity poses significant challenges.

Exploring Multi-task Learning Approaches

Multi-task learning (MTL) enhances model generalization by simultaneously training on multiple related tasks, leveraging shared representations to improve performance across diverse applications. This approach reduces the risk of overfitting compared to single-task learning and enables knowledge transfer without requiring large labeled datasets for every specific task. MTL architectures such as hard parameter sharing and soft parameter sharing optimize feature extraction, making them effective for complex domains like natural language processing and computer vision.

Core Differences: Transfer Learning vs Multi-task Learning

Transfer learning leverages a pre-trained model on a large dataset to improve performance on a related but different target task, effectively transferring knowledge from source to target domains. Multi-task learning simultaneously trains a single model on multiple related tasks, aiming to improve generalization by sharing representations across these tasks. The core difference lies in transfer learning's sequential adaptation process versus multi-task learning's parallel optimization for shared model parameters.

How Transfer Learning Enhances Model Performance

Transfer learning enhances model performance by leveraging pre-trained models on large datasets, which accelerates learning and improves accuracy on related but distinct tasks. By applying knowledge gained from source tasks, models require less labeled data and training time to achieve high performance in target domains. This approach is especially effective in domains with limited data availability, enabling robust model generalization and improved predictive capabilities.

The Benefits of Multi-task Learning in AI Systems

Multi-task learning enhances AI systems by enabling simultaneous learning of multiple related tasks, which improves generalization and reduces overfitting compared to single-task models. It leverages shared representations across tasks, leading to better data efficiency and faster model convergence. This approach is particularly beneficial in scenarios with limited labeled data, as knowledge transfer between tasks boosts overall model performance.

Key Applications of Transfer Learning

Transfer learning excels in applications where labeled data is scarce, enabling pre-trained models on large datasets to adapt efficiently to new, related tasks such as image recognition, natural language processing, and medical diagnostics. It significantly enhances performance in domains like autonomous driving and speech recognition by leveraging knowledge from general-purpose models. Industries benefit from transfer learning's ability to reduce training time and improve model accuracy in specialized environments.

Real-world Use Cases for Multi-task Learning

Multi-task learning enables AI models to simultaneously tackle multiple related tasks by sharing representations, improving efficiency and performance in domains such as autonomous driving, where perception, prediction, and planning must operate jointly. Real-world applications include healthcare diagnostics, where models analyze various medical imaging tasks concurrently to enhance accuracy and reduce training data requirements. This approach contrasts with transfer learning's sequential task adaptation, offering more robust solutions for complex, interconnected problems.

Challenges and Limitations of Transfer Learning

Transfer Learning faces challenges related to domain mismatch, where models trained on source tasks struggle to generalize effectively to target tasks with different data distributions. The reliance on large, high-quality labeled datasets for the source domain limits applicability in scenarios with scarce annotated data. Negative transfer occurs when knowledge from unrelated tasks degrades performance, highlighting the difficulty in selecting appropriate source tasks.

Overcoming Obstacles in Multi-task Learning

Multi-task learning faces challenges such as task interference and negative transfer, where competing gradients hinder model performance. Transfer learning helps overcome these obstacles by pre-training on a large source task, enabling better feature extraction and knowledge sharing across tasks. Techniques like gradient surgery and adaptive weighting further mitigate conflicts, enhancing multi-task learning efficiency and robustness.

Choosing Between Transfer Learning and Multi-task Learning for Your AI Project

Choosing between transfer learning and multi-task learning depends on the availability of labeled data and the similarity between tasks in your AI project. Transfer learning excels when adapting a pre-trained model to a new but related task with limited data, leveraging knowledge from a source domain to improve performance. Multi-task learning is ideal when multiple related tasks can be learned simultaneously, enabling shared representations that enhance generalization across all tasks.

Transfer Learning vs Multi-task Learning Infographic

techiny.com

techiny.com