Transfer learning leverages knowledge gained from pre-trained models on one task to improve performance on a related but different task, significantly reducing training time and data requirements. Multitask learning simultaneously trains a model on multiple related tasks, encouraging shared representations that enhance generalization and robustness across all tasks. Both approaches aim to exploit commonalities among tasks, yet transfer learning focuses on sequential knowledge transfer, whereas multitask learning emphasizes concurrent learning to boost overall model efficiency.

Table of Comparison

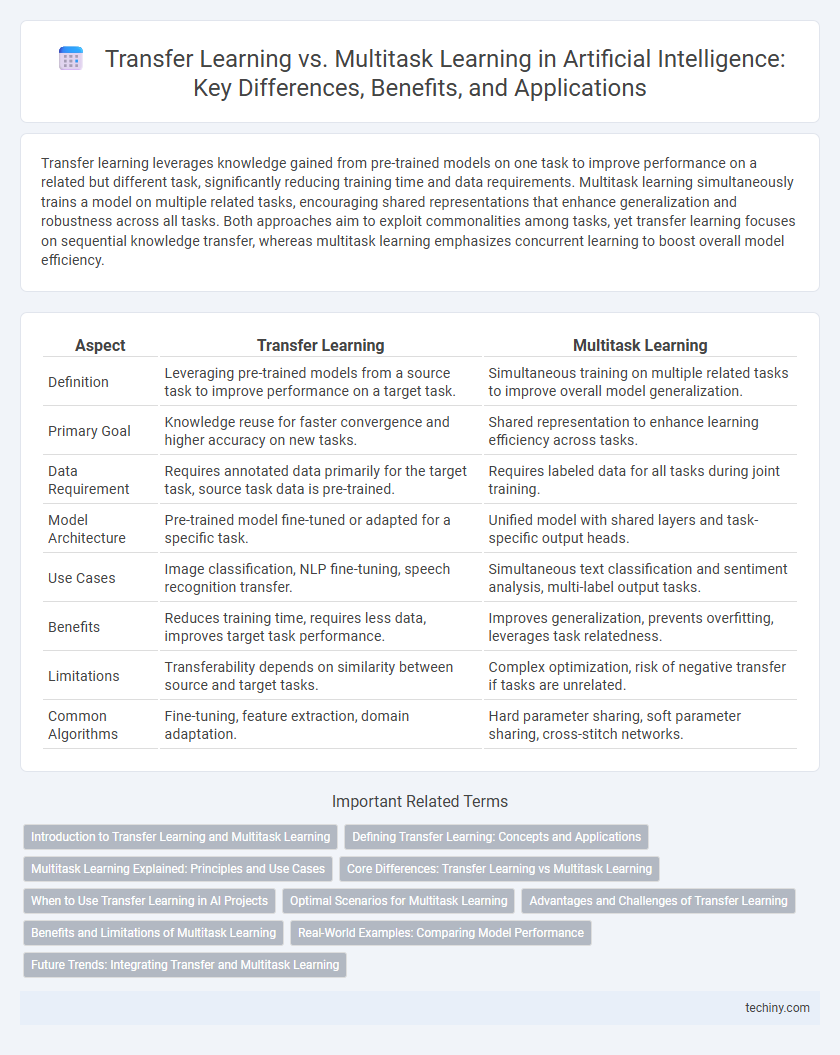

| Aspect | Transfer Learning | Multitask Learning |

|---|---|---|

| Definition | Leveraging pre-trained models from a source task to improve performance on a target task. | Simultaneous training on multiple related tasks to improve overall model generalization. |

| Primary Goal | Knowledge reuse for faster convergence and higher accuracy on new tasks. | Shared representation to enhance learning efficiency across tasks. |

| Data Requirement | Requires annotated data primarily for the target task, source task data is pre-trained. | Requires labeled data for all tasks during joint training. |

| Model Architecture | Pre-trained model fine-tuned or adapted for a specific task. | Unified model with shared layers and task-specific output heads. |

| Use Cases | Image classification, NLP fine-tuning, speech recognition transfer. | Simultaneous text classification and sentiment analysis, multi-label output tasks. |

| Benefits | Reduces training time, requires less data, improves target task performance. | Improves generalization, prevents overfitting, leverages task relatedness. |

| Limitations | Transferability depends on similarity between source and target tasks. | Complex optimization, risk of negative transfer if tasks are unrelated. |

| Common Algorithms | Fine-tuning, feature extraction, domain adaptation. | Hard parameter sharing, soft parameter sharing, cross-stitch networks. |

Introduction to Transfer Learning and Multitask Learning

Transfer Learning leverages pre-trained models on large datasets to improve performance on related but distinct tasks with limited data, enhancing efficiency and accuracy. Multitask Learning simultaneously trains a single model on multiple related tasks, enabling shared feature representations that improve generalization across all tasks. Both approaches optimize knowledge transfer, reducing the need for extensive labeled data in new or concurrent learning scenarios.

Defining Transfer Learning: Concepts and Applications

Transfer learning involves leveraging knowledge gained from training a model on one task and applying it to improve learning in a related but different task, significantly reducing the need for large datasets and computational resources. Common applications include natural language processing, where pre-trained language models like BERT are fine-tuned for specific tasks such as sentiment analysis or question answering. This approach enhances model efficiency and accuracy by reusing learned features and representations across domains.

Multitask Learning Explained: Principles and Use Cases

Multitask learning (MTL) is a machine learning paradigm where a single model is trained simultaneously on multiple related tasks, leveraging shared representations to improve generalization and efficiency. By exploiting commonalities among tasks, MTL reduces the risk of overfitting and enhances performance on each task compared to training separate models. Applications of multitask learning span natural language processing, computer vision, and recommendation systems, where models benefit from learning complementary information across tasks.

Core Differences: Transfer Learning vs Multitask Learning

Transfer learning involves leveraging a pre-trained model on a source task to improve performance on a different, but related, target task by transferring knowledge, typically with fine-tuning. In contrast, multitask learning trains a single model simultaneously on multiple related tasks, optimizing shared representations to improve generalization across all tasks. The core difference lies in transfer learning's sequential adaptation approach versus multitask learning's concurrent, joint optimization for multiple tasks.

When to Use Transfer Learning in AI Projects

Transfer learning is ideal in AI projects when labeled data for the target task is scarce but abundant in a related source domain, enabling models pretrained on large datasets like ImageNet or GPT to quickly adapt with minimal fine-tuning. It excels in scenarios requiring rapid development and deployment, such as medical imaging diagnostics or natural language understanding, where leveraging pretrained knowledge reduces training time and computational costs. Transfer learning also benefits projects aiming at domain adaptation, where models must generalize from one domain to another with varying feature distributions.

Optimal Scenarios for Multitask Learning

Multitask learning excels in scenarios where related tasks share common features, allowing models to leverage shared representations for improved generalization and reduced overfitting. It is particularly effective when labeled data is scarce for individual tasks but abundant collectively, enhancing performance through joint training. Domains such as natural language processing, computer vision, and healthcare benefit from multitask learning by simultaneously optimizing tasks like sentiment analysis, object detection, and disease diagnosis.

Advantages and Challenges of Transfer Learning

Transfer Learning leverages pre-trained models to significantly reduce training time and data requirements for new tasks, enhancing efficiency in domains with limited labeled data. It enables knowledge transfer across related tasks, improving model performance and generalization when domain similarity is sufficient. Challenges include negative transfer risks when source and target domains differ substantially, and the need for careful adaptation to avoid overfitting or loss of relevant features.

Benefits and Limitations of Multitask Learning

Multitask learning enhances model efficiency by simultaneously training on multiple related tasks, which improves generalization and reduces overfitting through shared representations. It mitigates the need for large labeled datasets in individual tasks, accelerating learning in data-scarce environments. However, challenges include negative transfer where unrelated tasks can degrade performance, and increased complexity in model design and optimization, requiring careful task selection and balancing.

Real-World Examples: Comparing Model Performance

Transfer learning demonstrates superior performance in real-world applications such as medical imaging, where pretrained models on large datasets adapt quickly to specific tasks with limited labeled data. Multitask learning excels in scenarios like natural language processing, simultaneously improving performance across related tasks such as sentiment analysis and entity recognition by leveraging shared representations. Evaluations show transfer learning often requires less data for fine-tuning, while multitask learning enhances generalization by jointly optimizing multiple objectives.

Future Trends: Integrating Transfer and Multitask Learning

Future trends in artificial intelligence emphasize the integration of transfer learning and multitask learning to create more adaptable and efficient models. Combining transfer learning's ability to leverage pre-trained knowledge with multitask learning's capacity to simultaneously handle diverse tasks can significantly improve generalization and reduce training time. This synergy is expected to drive advancements in applications such as natural language processing and computer vision, enabling AI systems to perform complex tasks with greater accuracy and less data dependency.

Transfer Learning vs Multitask Learning Infographic

techiny.com

techiny.com