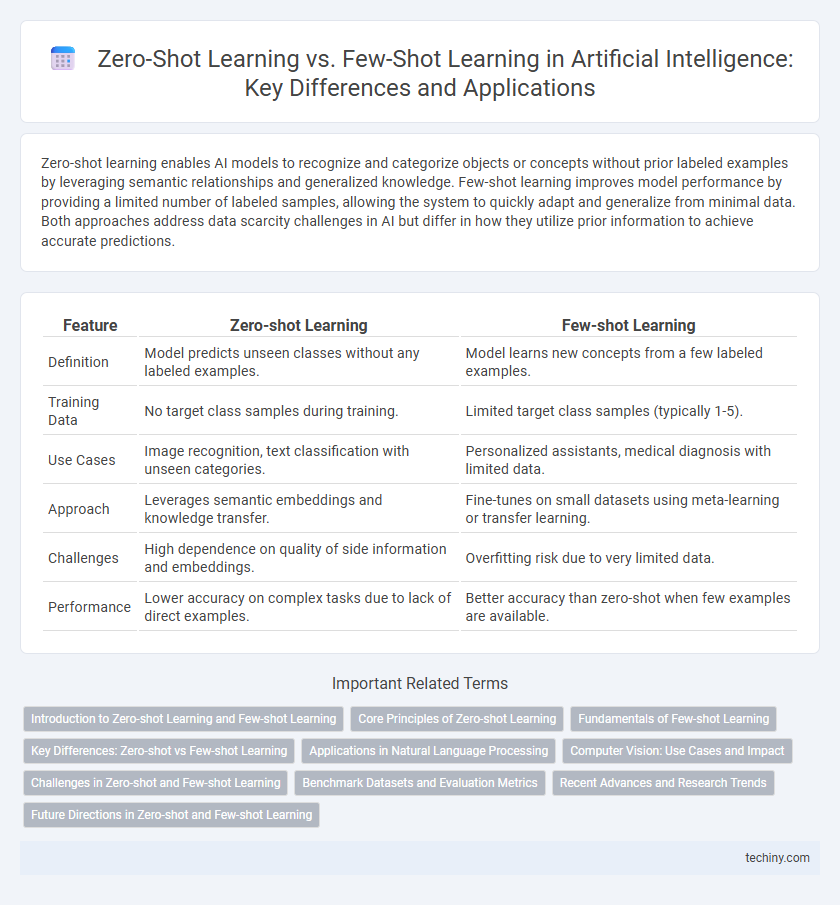

Zero-shot learning enables AI models to recognize and categorize objects or concepts without prior labeled examples by leveraging semantic relationships and generalized knowledge. Few-shot learning improves model performance by providing a limited number of labeled samples, allowing the system to quickly adapt and generalize from minimal data. Both approaches address data scarcity challenges in AI but differ in how they utilize prior information to achieve accurate predictions.

Table of Comparison

| Feature | Zero-shot Learning | Few-shot Learning |

|---|---|---|

| Definition | Model predicts unseen classes without any labeled examples. | Model learns new concepts from a few labeled examples. |

| Training Data | No target class samples during training. | Limited target class samples (typically 1-5). |

| Use Cases | Image recognition, text classification with unseen categories. | Personalized assistants, medical diagnosis with limited data. |

| Approach | Leverages semantic embeddings and knowledge transfer. | Fine-tunes on small datasets using meta-learning or transfer learning. |

| Challenges | High dependence on quality of side information and embeddings. | Overfitting risk due to very limited data. |

| Performance | Lower accuracy on complex tasks due to lack of direct examples. | Better accuracy than zero-shot when few examples are available. |

Introduction to Zero-shot Learning and Few-shot Learning

Zero-shot learning enables artificial intelligence models to recognize and classify objects or concepts without prior examples by leveraging semantic embeddings and knowledge transfer from related classes. Few-shot learning improves model adaptability by training on only a limited number of labeled samples, using techniques like meta-learning or data augmentation to enhance performance. Both approaches address challenges in data scarcity for AI, facilitating better generalization and faster adaptation in tasks such as image recognition, natural language processing, and robotics.

Core Principles of Zero-shot Learning

Zero-shot learning enables AI models to recognize and classify objects or concepts without prior exposure during training by leveraging semantic embeddings and knowledge transfer. It relies on mapping visual or textual features to a shared representation space, allowing inference on unseen classes through auxiliary information like attributes or natural language descriptions. This core principle distinguishes zero-shot learning from few-shot learning, which requires limited labeled examples for each new class.

Fundamentals of Few-shot Learning

Few-shot learning enables artificial intelligence models to recognize new concepts or tasks with minimal labeled examples, leveraging prior knowledge through meta-learning or transfer learning techniques. It focuses on building robust feature representations and parameter-efficient adaptation to generalize from limited data. This approach contrasts with zero-shot learning, which relies on semantic embeddings and external knowledge to classify unseen categories without any labeled samples.

Key Differences: Zero-shot vs Few-shot Learning

Zero-shot learning enables AI models to recognize and classify objects without any prior examples, leveraging semantic embeddings and knowledge transfer. Few-shot learning requires a limited number of labeled examples to adapt and generalize to new tasks, often using meta-learning or fine-tuning techniques. The key difference lies in data dependency: zero-shot operates with no training instances for the target class, while few-shot depends on minimal annotated samples to improve accuracy.

Applications in Natural Language Processing

Zero-shot learning enables natural language processing models to classify or generate text for unseen categories without prior labeled examples, improving adaptability in tasks like sentiment analysis and language translation. Few-shot learning leverages minimal labeled data to fine-tune models rapidly, enhancing performance in domain-specific question answering and named entity recognition. Both approaches reduce the dependence on extensive annotated datasets, accelerating deployment in multilingual and low-resource environments.

Computer Vision: Use Cases and Impact

Zero-shot learning enables computer vision systems to recognize objects or scenes without prior exposure to labeled examples, significantly enhancing scalability in image classification tasks for emerging categories. Few-shot learning improves model adaptability by requiring only a minimal number of annotated images to achieve high accuracy, proving vital for medical imaging diagnostics and personalized retail applications. Both approaches reduce dependency on large labeled datasets, accelerating deployment in real-world scenarios like autonomous driving and wildlife monitoring.

Challenges in Zero-shot and Few-shot Learning

Zero-shot learning faces significant challenges due to its reliance on transferring knowledge from seen classes to completely unseen classes without any labeled examples, often resulting in poor generalization and semantic gap issues. Few-shot learning struggles with overfitting and limited data scarcity, as models must quickly adapt to new classes with only a handful of labeled samples, making robust feature extraction and efficient use of prior knowledge essential. Both paradigms demand advanced embedding techniques and inherently face difficulties in achieving accurate class representation and minimizing bias towards seen categories.

Benchmark Datasets and Evaluation Metrics

Zero-shot learning and few-shot learning are evaluated using benchmark datasets such as ImageNet, CIFAR-100, and Omniglot, which provide diverse classes and varying sample sizes to test model generalization. Evaluation metrics commonly include accuracy, precision, recall, and F1-score, with specialized measures like mean per-class accuracy used in zero-shot learning to handle unseen categories. These benchmarks and metrics enable standardized comparison of models' ability to recognize novel classes with limited or no training examples.

Recent Advances and Research Trends

Recent advances in Artificial Intelligence have propelled zero-shot and few-shot learning techniques to new heights, enabling models to generalize from minimal or no labeled data effectively. Cutting-edge research emphasizes enhancing semantic embeddings and leveraging large-scale pre-trained models like GPT and CLIP to bridge the gap between unseen and seen classes. Emerging trends also explore hybrid architectures that integrate meta-learning with transfer learning to improve robustness and scalability in real-world applications.

Future Directions in Zero-shot and Few-shot Learning

Future directions in zero-shot and few-shot learning emphasize enhancing model generalization across diverse domains with limited labeled data. Integrating advanced techniques like meta-learning, self-supervised learning, and knowledge distillation can improve performance in unseen tasks. Research is also focused on developing scalable architectures that efficiently transfer knowledge from large pre-trained models to novel scenarios while minimizing data dependence.

Zero-shot Learning vs Few-shot Learning Infographic

techiny.com

techiny.com