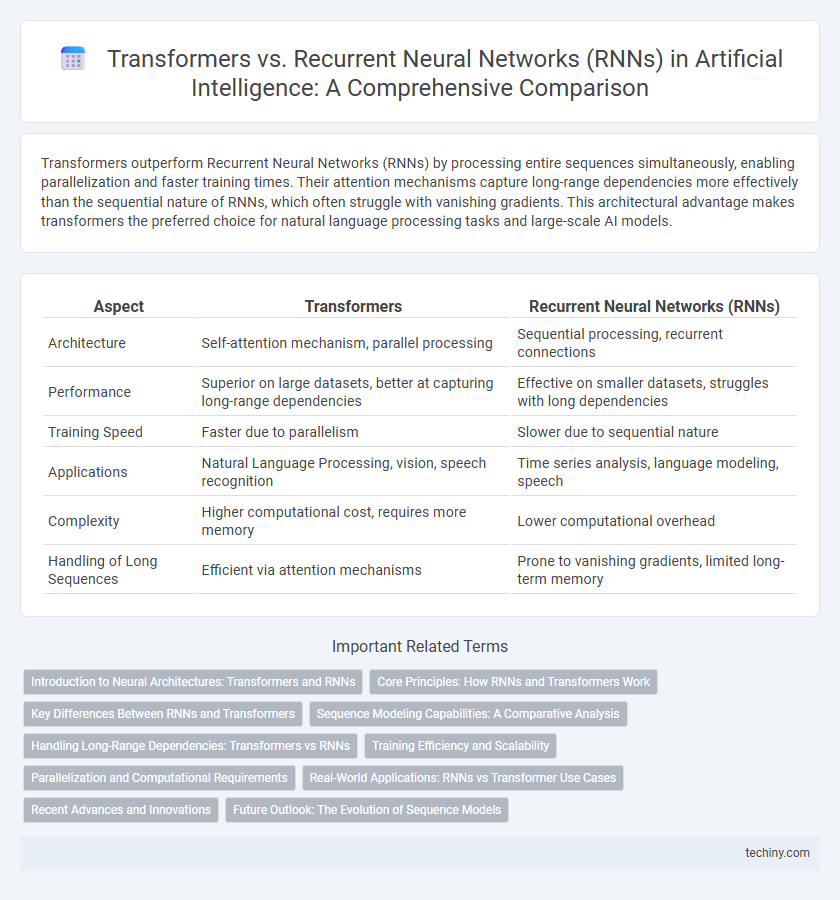

Transformers outperform Recurrent Neural Networks (RNNs) by processing entire sequences simultaneously, enabling parallelization and faster training times. Their attention mechanisms capture long-range dependencies more effectively than the sequential nature of RNNs, which often struggle with vanishing gradients. This architectural advantage makes transformers the preferred choice for natural language processing tasks and large-scale AI models.

Table of Comparison

| Aspect | Transformers | Recurrent Neural Networks (RNNs) |

|---|---|---|

| Architecture | Self-attention mechanism, parallel processing | Sequential processing, recurrent connections |

| Performance | Superior on large datasets, better at capturing long-range dependencies | Effective on smaller datasets, struggles with long dependencies |

| Training Speed | Faster due to parallelism | Slower due to sequential nature |

| Applications | Natural Language Processing, vision, speech recognition | Time series analysis, language modeling, speech |

| Complexity | Higher computational cost, requires more memory | Lower computational overhead |

| Handling of Long Sequences | Efficient via attention mechanisms | Prone to vanishing gradients, limited long-term memory |

Introduction to Neural Architectures: Transformers and RNNs

Transformers revolutionize neural architectures by utilizing self-attention mechanisms that process entire sequences simultaneously, enabling parallelization and capturing long-range dependencies more effectively than Recurrent Neural Networks (RNNs). RNNs process data sequentially, maintaining hidden states that limit their efficiency with long sequences due to vanishing gradient problems and slower training times. The shift towards Transformers has significantly advanced natural language processing tasks by improving performance in translation, summarization, and language modeling.

Core Principles: How RNNs and Transformers Work

Recurrent Neural Networks (RNNs) process sequences by maintaining hidden states that capture temporal dependencies through iterative steps, enabling them to handle sequential input with a form of memory. Transformers utilize self-attention mechanisms to weigh the importance of different parts of the input sequence simultaneously, allowing parallel processing and better modeling of long-range dependencies. The core difference lies in RNNs' sequential data flow versus Transformers' parallel attention-based architecture, which significantly improves efficiency and scalability in natural language processing tasks.

Key Differences Between RNNs and Transformers

Transformers leverage self-attention mechanisms to process entire input sequences simultaneously, enabling parallelization and capturing long-range dependencies more effectively than Recurrent Neural Networks (RNNs), which process data sequentially and struggle with vanishing gradients over long sequences. Unlike RNNs that maintain hidden states to encode temporal information, Transformers use positional encodings to retain sequence order without recursion. These architectural differences result in Transformers achieving superior performance on large-scale language tasks and faster training times compared to traditional RNN models.

Sequence Modeling Capabilities: A Comparative Analysis

Transformers excel in sequence modeling by leveraging self-attention mechanisms that capture long-range dependencies more efficiently than Recurrent Neural Networks (RNNs), which process sequences sequentially and often struggle with vanishing gradient issues. The parallelizable architecture of Transformers allows for faster training on large datasets, improving performance on tasks such as natural language processing and time series analysis. RNNs, including variants like LSTM and GRU, remain effective for modeling sequential data with shorter dependencies but are generally outperformed by Transformers in handling complex, long-range sequence relationships.

Handling Long-Range Dependencies: Transformers vs RNNs

Transformers excel at handling long-range dependencies by leveraging self-attention mechanisms that allow direct connections between distant tokens, overcoming the vanishing gradient problem typical in Recurrent Neural Networks (RNNs). RNNs process sequences sequentially, which limits their ability to capture dependencies over extended context lengths due to gradient decay during backpropagation through time. This makes Transformers more effective for tasks requiring understanding of global context, such as language modeling and machine translation.

Training Efficiency and Scalability

Transformers outperform Recurrent Neural Networks (RNNs) in training efficiency due to their parallel processing capabilities, which eliminate sequential data dependencies inherent in RNNs. The self-attention mechanism in Transformers enables better scalability by allowing models to handle longer sequences without degradation in performance or exponential growth in computational resources. Large-scale applications in natural language processing and computer vision demonstrate that Transformers achieve faster convergence and improved accuracy compared to traditional RNN architectures.

Parallelization and Computational Requirements

Transformers significantly outperform Recurrent Neural Networks (RNNs) in parallelization due to their self-attention mechanism, which enables simultaneous processing of input sequences, reducing training time on large datasets. RNNs require sequential data processing, leading to increased computational requirements and slower training speeds, especially for long sequences. The transformer architecture's scalability on modern GPUs and TPUs enhances model efficiency and supports extensive language modeling tasks more effectively than RNNs.

Real-World Applications: RNNs vs Transformer Use Cases

Transformers excel in natural language processing tasks such as language translation, text summarization, and sentiment analysis due to their ability to model long-range dependencies efficiently. Recurrent Neural Networks (RNNs) remain effective for sequential data like time series forecasting, speech recognition, and video analysis where temporal dynamics are crucial. Industry adoption favors Transformers for large-scale applications like chatbots and recommendation systems, while RNNs continue to power real-time sequence prediction in resource-constrained environments.

Recent Advances and Innovations

Transformer architectures have revolutionized natural language processing by enabling parallel processing of tokens, significantly outperforming Recurrent Neural Networks (RNNs) in handling long-range dependencies and scaling to massive datasets. Recent advances include the development of efficient transformer variants like Longformer and Reformer, which reduce computational complexity while preserving performance in large contexts. Innovations such as sparse attention mechanisms and enhanced pre-training strategies have further pushed the boundaries of transformer models, setting new benchmarks in tasks like language understanding and generation.

Future Outlook: The Evolution of Sequence Models

Transformers are poised to dominate future sequence modeling due to their superior parallel processing capabilities and ability to capture long-range dependencies, outperforming traditional Recurrent Neural Networks (RNNs) in various applications. The integration of advanced attention mechanisms within Transformers enables more efficient handling of complex sequences, driving innovations in natural language processing, speech recognition, and time-series forecasting. As research progresses, hybrid models combining the strengths of Transformers and RNNs are expected to emerge, further enhancing the evolution of sequence models for diverse AI challenges.

Transformers vs Recurrent Neural Networks (RNNs) Infographic

techiny.com

techiny.com