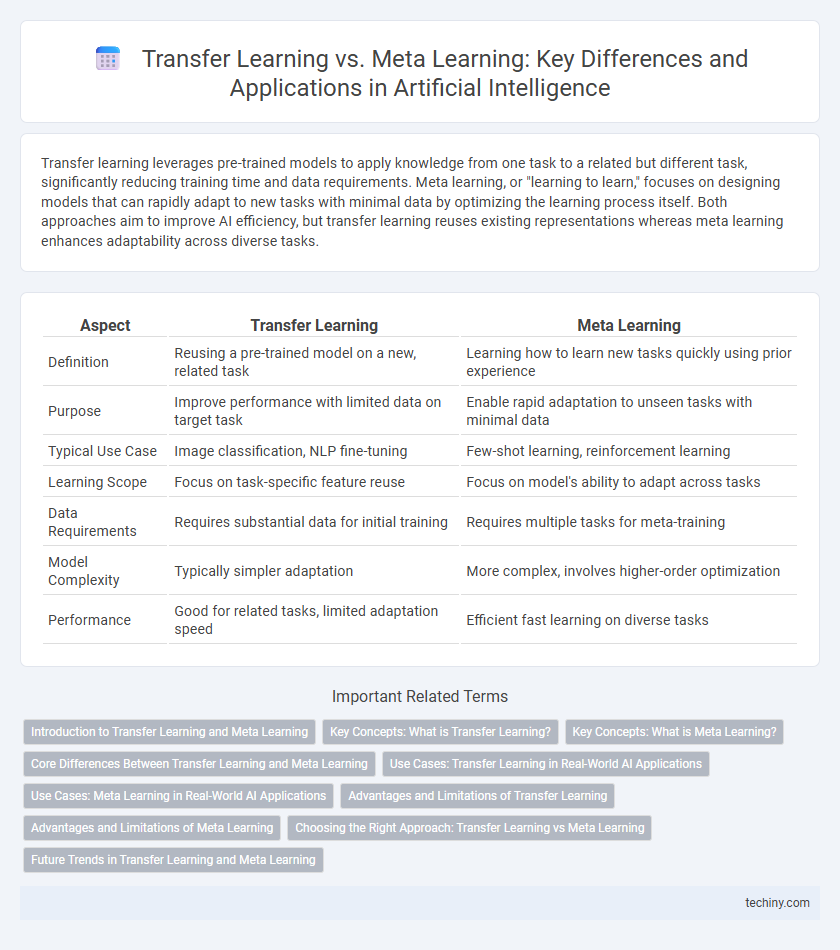

Transfer learning leverages pre-trained models to apply knowledge from one task to a related but different task, significantly reducing training time and data requirements. Meta learning, or "learning to learn," focuses on designing models that can rapidly adapt to new tasks with minimal data by optimizing the learning process itself. Both approaches aim to improve AI efficiency, but transfer learning reuses existing representations whereas meta learning enhances adaptability across diverse tasks.

Table of Comparison

| Aspect | Transfer Learning | Meta Learning |

|---|---|---|

| Definition | Reusing a pre-trained model on a new, related task | Learning how to learn new tasks quickly using prior experience |

| Purpose | Improve performance with limited data on target task | Enable rapid adaptation to unseen tasks with minimal data |

| Typical Use Case | Image classification, NLP fine-tuning | Few-shot learning, reinforcement learning |

| Learning Scope | Focus on task-specific feature reuse | Focus on model's ability to adapt across tasks |

| Data Requirements | Requires substantial data for initial training | Requires multiple tasks for meta-training |

| Model Complexity | Typically simpler adaptation | More complex, involves higher-order optimization |

| Performance | Good for related tasks, limited adaptation speed | Efficient fast learning on diverse tasks |

Introduction to Transfer Learning and Meta Learning

Transfer learning leverages knowledge gained from a pre-trained model on one task to improve learning efficiency and performance on a related, but different task, reducing the need for large datasets. Meta learning, also known as "learning to learn," focuses on designing models that can quickly adapt to new tasks with minimal data by extracting meta-knowledge across various tasks. Both approaches address the challenge of data scarcity in AI, but transfer learning emphasizes reusing learned features, while meta learning optimizes the model's adaptability and generalization capabilities.

Key Concepts: What is Transfer Learning?

Transfer learning is a machine learning technique where a pre-trained model on a large dataset is reused as the starting point for a related but different task, enabling faster training and improved performance with limited data. This approach leverages knowledge gained from one domain to boost learning efficiency in another, reducing the need for extensive labeled data. Transfer learning is widely applied in computer vision and natural language processing, where models pretrained on massive datasets serve as foundations for specialized tasks.

Key Concepts: What is Meta Learning?

Meta learning, often described as "learning to learn," enables AI models to rapidly adapt to new tasks using prior experience from related tasks, minimizing the need for extensive retraining. Unlike traditional transfer learning, which reuses pretrained models for similar tasks, meta learning focuses on optimizing the learning process itself, improving adaptability across diverse tasks with limited data. This approach leverages algorithms like Model-Agnostic Meta-Learning (MAML) to enhance generalization and accelerate learning in dynamic environments.

Core Differences Between Transfer Learning and Meta Learning

Transfer Learning leverages knowledge from a pre-trained model on a specific task to improve learning efficiency on a related but different task, primarily focusing on reusing features and representations. Meta Learning, or "learning to learn," aims to train models that can quickly adapt to new tasks by optimizing the learning process itself, emphasizing rapid generalization across diverse tasks. The core difference lies in Transfer Learning's reliance on fixed representations for knowledge transfer, whereas Meta Learning develops adaptive strategies to generalize learning across multiple tasks.

Use Cases: Transfer Learning in Real-World AI Applications

Transfer Learning accelerates model training in real-world AI applications such as image recognition, natural language processing, and medical diagnostics by leveraging pre-trained models on large datasets like ImageNet or GPT. It enables efficient adaptation to specific tasks with limited labeled data, improving performance in sectors like autonomous driving, healthcare, and customer service chatbots. This approach contrasts with Meta Learning, which focuses on learning to learn across multiple tasks for rapid adaptation, but Transfer Learning remains the dominant choice for practical deployment in industry.

Use Cases: Meta Learning in Real-World AI Applications

Meta learning excels in few-shot learning environments, enabling AI systems to quickly adapt to new tasks with minimal data, which is critical in healthcare for personalized treatment recommendations. In robotics, meta learning facilitates rapid adaptation to dynamic conditions, enhancing autonomous navigation and manipulation capabilities. These real-world applications demonstrate meta learning's effectiveness in transferability and generalization across diverse, ever-changing scenarios.

Advantages and Limitations of Transfer Learning

Transfer learning leverages pre-trained models to significantly reduce training time and improve performance on related tasks, especially when labeled data is scarce. It excels in scenarios with limited domain-specific data but may suffer from negative transfer if the source and target tasks are poorly aligned. Despite its efficiency, transfer learning has limited adaptability to highly diverse or rapidly changing environments due to reliance on fixed source knowledge.

Advantages and Limitations of Meta Learning

Meta learning excels at adapting models quickly to new tasks with limited data, enhancing efficiency in diverse AI applications. Its ability to learn learning strategies allows generalization across tasks, but it often requires complex model design and substantial computational resources. Limitations include sensitivity to task distribution shifts and challenges in scalability for large-scale problems.

Choosing the Right Approach: Transfer Learning vs Meta Learning

Selecting between transfer learning and meta learning depends on the availability of labeled data and task similarity. Transfer learning excels when a pre-trained model on a large dataset can be fine-tuned for a related but different task, reducing training time and resource requirements. Meta learning is preferred for rapid adaptation to new, diverse tasks with minimal data by learning an underlying strategy for learning itself.

Future Trends in Transfer Learning and Meta Learning

Transfer learning is expected to advance through improved domain adaptation techniques and efficient knowledge transfer across heterogeneous tasks, enabling AI models to learn with fewer labeled examples. Meta learning will likely deepen its focus on rapid adaptation and generalization across diverse environments by leveraging higher-order optimization algorithms and few-shot learning paradigms. Future trends emphasize hybrid approaches combining transfer and meta learning to enhance model robustness, scalability, and real-world applicability in complex AI systems.

Transfer Learning vs Meta Learning Infographic

techiny.com

techiny.com