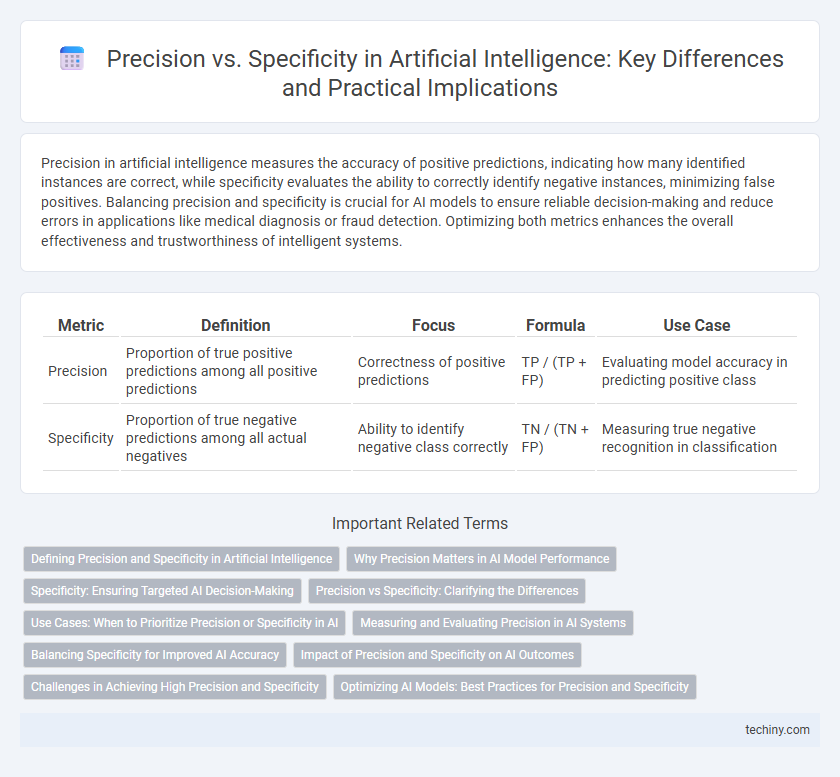

Precision in artificial intelligence measures the accuracy of positive predictions, indicating how many identified instances are correct, while specificity evaluates the ability to correctly identify negative instances, minimizing false positives. Balancing precision and specificity is crucial for AI models to ensure reliable decision-making and reduce errors in applications like medical diagnosis or fraud detection. Optimizing both metrics enhances the overall effectiveness and trustworthiness of intelligent systems.

Table of Comparison

| Metric | Definition | Focus | Formula | Use Case |

|---|---|---|---|---|

| Precision | Proportion of true positive predictions among all positive predictions | Correctness of positive predictions | TP / (TP + FP) | Evaluating model accuracy in predicting positive class |

| Specificity | Proportion of true negative predictions among all actual negatives | Ability to identify negative class correctly | TN / (TN + FP) | Measuring true negative recognition in classification |

Defining Precision and Specificity in Artificial Intelligence

Precision in artificial intelligence measures the accuracy of positive predictions by calculating the ratio of true positive results to the total predicted positives, emphasizing the reduction of false positives. Specificity, or true negative rate, quantifies the model's ability to correctly identify negative cases by dividing true negatives by the sum of true negatives and false positives, thus focusing on minimizing false alarms. Both metrics are crucial for evaluating classification models, particularly in applications like medical diagnosis and fraud detection, where accurate differentiation between classes is essential.

Why Precision Matters in AI Model Performance

Precision in AI model performance measures the accuracy of positive predictions, directly impacting model reliability and user trust. High precision minimizes false positives, ensuring that the AI system delivers relevant and actionable results, especially critical in applications like medical diagnosis and fraud detection. Optimizing precision enhances decision-making processes and reduces costly errors, making it a key metric for evaluating AI effectiveness.

Specificity: Ensuring Targeted AI Decision-Making

Specificity in AI refers to the model's ability to correctly identify negative cases, minimizing false positives in decision-making processes. High specificity is crucial for applications like medical diagnostics and fraud detection, where incorrectly flagging benign instances can lead to unnecessary interventions or costs. Ensuring targeted AI decision-making through specificity enhances trust and accuracy by aligning outcomes closely with real-world negative scenarios.

Precision vs Specificity: Clarifying the Differences

Precision measures the proportion of true positive predictions among all positive predictions made by an AI model, reflecting the accuracy of its positive results. Specificity, also known as the true negative rate, quantifies the ability of the model to correctly identify negative cases, minimizing false positives. Understanding the distinction between precision and specificity is crucial for optimizing AI performance metrics depending on the context of false positive and false negative costs.

Use Cases: When to Prioritize Precision or Specificity in AI

In AI use cases such as fraud detection or medical diagnosis, prioritizing precision minimizes false positives, ensuring that positive results are highly reliable and reducing unnecessary interventions. In contrast, specificity is crucial in contexts like spam filtering or disease screening, where correctly identifying true negatives prevents overlooking legitimate cases. Balancing precision and specificity depends on the cost of false positives versus false negatives within the application's domain.

Measuring and Evaluating Precision in AI Systems

Precision in AI systems measures the proportion of true positive results among all positive predictions, reflecting the accuracy of the system in identifying relevant instances. Evaluating precision requires comprehensive test datasets with accurately labeled outcomes to benchmark model predictions against ground truth. High precision minimizes false positives, which is crucial in applications like medical diagnosis and fraud detection where incorrect positive predictions carry significant consequences.

Balancing Specificity for Improved AI Accuracy

Balancing specificity is critical in optimizing AI accuracy, as overly specific models risk overfitting while insufficient specificity can lead to underfitting and generalized errors. Precision measures the proportion of true positive predictions among all positive results, but without appropriate specificity in feature selection and model parameters, precision may not reflect meaningful outcomes. Implementing techniques such as regularization and feature engineering maintains specificity at an optimal level, enhancing AI systems' ability to accurately identify relevant patterns and reduce false positives.

Impact of Precision and Specificity on AI Outcomes

Precision in AI models improves the accuracy of positive predictions, directly reducing false positives and enhancing decision reliability in applications like medical diagnosis or fraud detection. High specificity minimizes false negatives, ensuring that negative cases are correctly identified, which is critical in safety-sensitive environments such as autonomous driving or security systems. Balancing precision and specificity optimizes model performance, yielding more trustworthy and effective AI outcomes across diverse industries.

Challenges in Achieving High Precision and Specificity

Achieving high precision and specificity in artificial intelligence models is challenged by the need for extensive, high-quality labeled data to minimize false positives while accurately identifying true positives. The balance between precision and specificity requires careful tuning of algorithms to prevent overfitting, which can degrade performance on diverse datasets. Complexity in feature selection and data variability further complicate optimizing these metrics in real-world AI applications.

Optimizing AI Models: Best Practices for Precision and Specificity

Optimizing AI models for precision and specificity involves fine-tuning algorithms to reduce false positives while maximizing true positive rates, ensuring accurate and relevant outputs. Techniques such as cross-validation, threshold adjustment, and utilizing confusion matrices enable precise balancing between detecting relevant instances and minimizing irrelevant classifications. Employing domain-specific feature engineering and continuous model evaluation further enhances both precision and specificity in AI systems.

Precision vs Specificity Infographic

techiny.com

techiny.com