AI bias occurs when algorithms produce unfair outcomes due to prejudiced data or flawed assumptions, leading to discrimination against certain groups. AI fairness aims to identify and mitigate these biases, ensuring equitable treatment and decisions across diverse populations. Developing transparent models and inclusive datasets is crucial to promoting fairness and reducing bias in artificial intelligence systems.

Table of Comparison

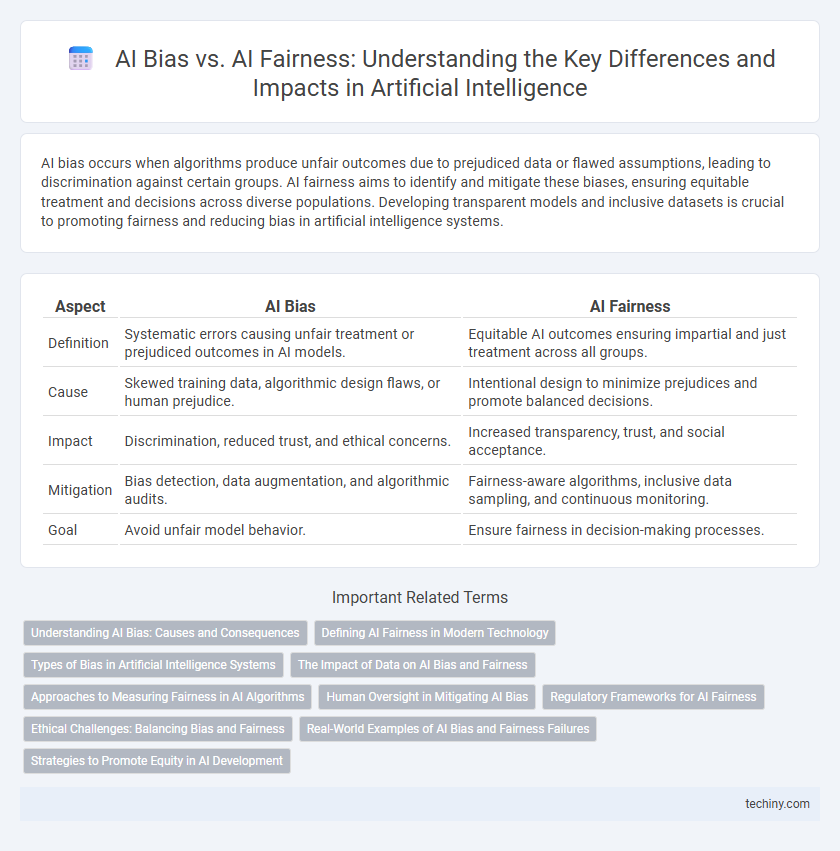

| Aspect | AI Bias | AI Fairness |

|---|---|---|

| Definition | Systematic errors causing unfair treatment or prejudiced outcomes in AI models. | Equitable AI outcomes ensuring impartial and just treatment across all groups. |

| Cause | Skewed training data, algorithmic design flaws, or human prejudice. | Intentional design to minimize prejudices and promote balanced decisions. |

| Impact | Discrimination, reduced trust, and ethical concerns. | Increased transparency, trust, and social acceptance. |

| Mitigation | Bias detection, data augmentation, and algorithmic audits. | Fairness-aware algorithms, inclusive data sampling, and continuous monitoring. |

| Goal | Avoid unfair model behavior. | Ensure fairness in decision-making processes. |

Understanding AI Bias: Causes and Consequences

AI bias originates from training data that reflects historical inequalities, skewed algorithms, and limited diversity in development teams. These biases can lead to unfair treatment of individuals or groups, perpetuating social stereotypes and discrimination. Understanding AI bias is crucial for developing fair AI systems that promote equity and avoid reinforcing harmful prejudices.

Defining AI Fairness in Modern Technology

AI fairness in modern technology refers to the design and deployment of algorithms that avoid discrimination against any individual or group based on race, gender, age, or other protected attributes. Ensuring AI fairness requires continuous evaluation of training data, model transparency, and the implementation of bias mitigation techniques to promote equitable outcomes. This concept is essential to building trust and accountability in AI systems across diverse applications such as hiring, lending, and criminal justice.

Types of Bias in Artificial Intelligence Systems

Types of bias in artificial intelligence systems include data bias, where training datasets are unrepresentative or skewed, algorithmic bias caused by flawed model design, and societal bias reflecting prejudiced human decisions embedded in AI outputs. Measurement bias occurs when performance metrics fail to capture fairness across diverse populations, while interaction bias arises from real-time user interactions influencing AI behavior. Addressing these biases is crucial for developing AI fairness, ensuring equitable treatment across demographic groups and minimizing harm.

The Impact of Data on AI Bias and Fairness

The impact of data on AI bias and fairness is profound, as biased or unrepresentative datasets can lead to discriminatory outcomes in machine learning models. Ensuring diversity, accuracy, and inclusiveness in training data is crucial to mitigating bias and promoting fairness in AI decision-making processes. Data governance frameworks and continuous monitoring are essential to identify and correct biases that arise during data collection, preprocessing, and model training.

Approaches to Measuring Fairness in AI Algorithms

Measuring fairness in AI algorithms involves quantitative metrics such as demographic parity, equal opportunity, and disparate impact, which assess whether outcomes are independent of sensitive attributes like race or gender. Statistical fairness approaches rely on analyzing outcome distributions across different groups to identify bias and ensure equitable treatment. Advanced techniques include explainability methods and fairness-aware machine learning models designed to minimize bias while maintaining predictive accuracy.

Human Oversight in Mitigating AI Bias

Human oversight plays a critical role in mitigating AI bias by continuously monitoring algorithms and outcomes to identify and correct discriminatory patterns. Incorporating diverse human perspectives during the data annotation, model training, and evaluation phases helps ensure AI fairness by addressing systemic biases that automated processes alone may overlook. Effective oversight combines technical audits with ethical reviews to create transparent AI systems aligned with societal values and equitable treatment for all users.

Regulatory Frameworks for AI Fairness

Regulatory frameworks for AI fairness emphasize the establishment of clear guidelines and standards to mitigate AI bias and promote equitable outcomes across diverse populations. These frameworks incorporate principles such as transparency, accountability, and inclusivity, ensuring AI systems are audited and designed to prevent discriminatory behavior. Enforcement mechanisms include compliance checks, impact assessments, and penalties, fostering the development of responsible AI technologies aligned with ethical norms and human rights.

Ethical Challenges: Balancing Bias and Fairness

AI bias stems from prejudiced data or flawed algorithms that lead to unfair treatment of individuals or groups, raising significant ethical challenges in technology deployment. Ensuring AI fairness demands rigorous evaluation methods and transparency to mitigate discriminatory outcomes and promote equitable decision-making. Balancing bias and fairness in AI systems requires ongoing vigilance, ethical guidelines, and diverse data representation to uphold social justice and trust.

Real-World Examples of AI Bias and Fairness Failures

AI bias manifests in real-world examples such as facial recognition systems misidentifying minority groups, leading to wrongful arrests and discrimination. Fairness failures are evident in hiring algorithms that disproportionately disadvantage women or marginalized communities by perpetuating historical inequalities. These issues highlight the critical need for transparency, diverse training data, and rigorous testing to mitigate biases in AI applications.

Strategies to Promote Equity in AI Development

Mitigating AI bias requires implementing diverse training datasets, regularly auditing algorithms, and involving multidisciplinary teams to identify and address potential discrimination. Promoting AI fairness involves transparency in model development, establishing clear ethical guidelines, and leveraging bias detection tools to ensure equitable outcomes. Integrating stakeholder feedback and prioritizing inclusivity during the AI lifecycle enhances the development of unbiased, fair artificial intelligence systems.

AI Bias vs AI Fairness Infographic

techiny.com

techiny.com