Depth sensing uses cameras and infrared sensors to create detailed 3D maps by measuring the distance between the sensor and objects, enabling precise object detection in augmented reality environments. Lidar scanning employs laser pulses to generate highly accurate spatial data, allowing for superior depth accuracy and faster environmental mapping in larger or more complex scenes. While depth sensing excels in close-range interactions and real-time adjustments, lidar scanning offers enhanced precision and range, making it ideal for outdoor and large-scale AR applications.

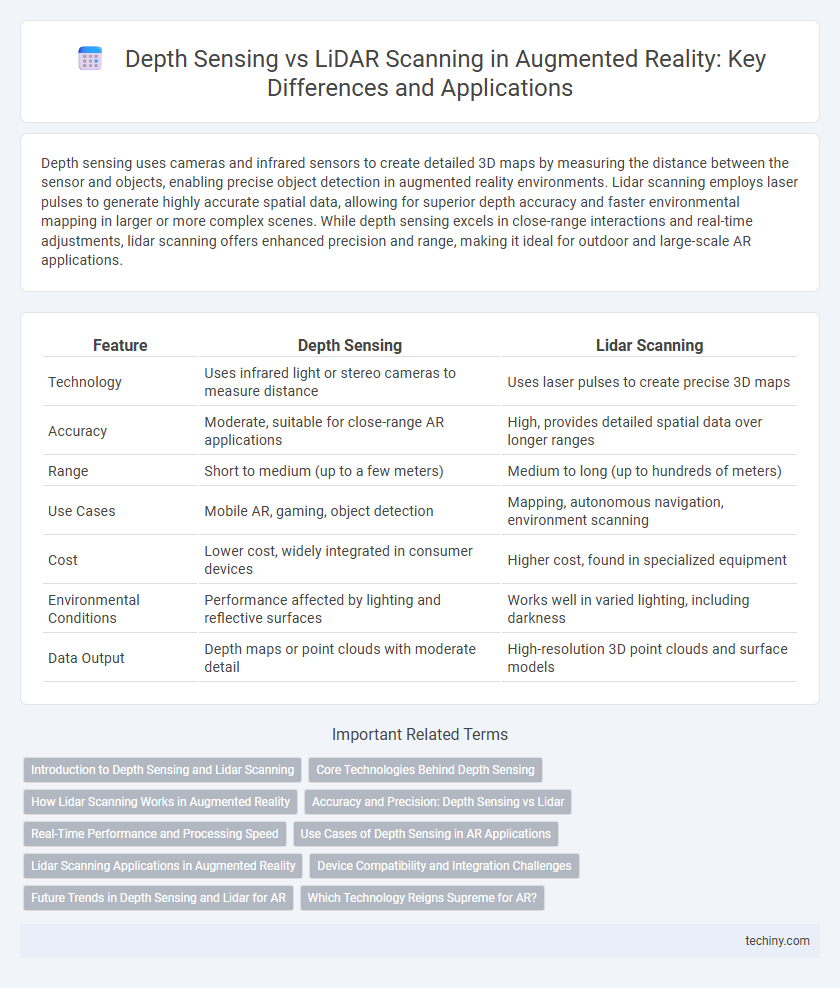

Table of Comparison

| Feature | Depth Sensing | Lidar Scanning |

|---|---|---|

| Technology | Uses infrared light or stereo cameras to measure distance | Uses laser pulses to create precise 3D maps |

| Accuracy | Moderate, suitable for close-range AR applications | High, provides detailed spatial data over longer ranges |

| Range | Short to medium (up to a few meters) | Medium to long (up to hundreds of meters) |

| Use Cases | Mobile AR, gaming, object detection | Mapping, autonomous navigation, environment scanning |

| Cost | Lower cost, widely integrated in consumer devices | Higher cost, found in specialized equipment |

| Environmental Conditions | Performance affected by lighting and reflective surfaces | Works well in varied lighting, including darkness |

| Data Output | Depth maps or point clouds with moderate detail | High-resolution 3D point clouds and surface models |

Introduction to Depth Sensing and Lidar Scanning

Depth sensing technology captures the distance between the sensor and objects in the environment using infrared light or stereo cameras, enabling accurate 3D mapping in augmented reality applications. Lidar scanning employs laser pulses to measure precise distances by timing the reflection of light off surfaces, producing highly detailed spatial data for real-time AR experiences. Both methods enhance environmental understanding, but Lidar generally offers superior range and resolution, crucial for complex AR scenarios.

Core Technologies Behind Depth Sensing

Depth sensing in augmented reality primarily relies on technologies like structured light, time-of-flight (ToF) sensors, and stereoscopic vision to accurately capture spatial information. These core methods emit or analyze light patterns and measure the time taken for reflections to return, enabling precise 3D mapping of environments. Unlike LiDAR scanning, which uses laser pulses for distance measurement, depth sensing focuses on real-time data acquisition through compact, low-power sensors ideal for AR devices.

How Lidar Scanning Works in Augmented Reality

Lidar scanning in augmented reality works by emitting rapid laser pulses to measure distances between the sensor and surrounding objects, creating precise 3D maps of the environment. This technology enhances AR experiences by providing real-time spatial awareness and accurate depth information, crucial for object placement and interaction. Integrating Lidar with AR devices improves scene understanding, enabling more immersive and responsive applications in navigation, gaming, and industrial design.

Accuracy and Precision: Depth Sensing vs Lidar

Depth sensing technology typically uses structured light or time-of-flight techniques to measure distances, offering moderate accuracy suitable for indoor augmented reality applications. Lidar scanning employs laser pulses to create highly accurate and precise 3D maps with finer spatial resolution, making it ideal for outdoor and large-scale AR environments. The enhanced precision of lidar enables better object detection and environmental mapping compared to standard depth sensors.

Real-Time Performance and Processing Speed

Depth sensing methods, such as structured light or time-of-flight sensors, provide rapid real-time performance crucial for responsive augmented reality (AR) applications by capturing depth data at high frame rates. LiDAR scanning offers high-precision spatial mapping but typically involves heavier processing loads, resulting in slower update rates compared to depth sensors optimized for speed. For AR experiences requiring immediate interaction and fluid user engagement, depth sensing technology currently delivers superior processing speed and real-time responsiveness.

Use Cases of Depth Sensing in AR Applications

Depth sensing in augmented reality enables precise environmental mapping for applications like interactive gaming, virtual try-ons, and spatial measurement. Unlike LiDAR scanning, depth sensors offer real-time data capture with lower power consumption, making them ideal for mobile AR devices. This technology enhances user experiences by accurately detecting object distances and surface textures within a device's immediate surroundings.

Lidar Scanning Applications in Augmented Reality

Lidar scanning enhances augmented reality by providing precise 3D spatial mapping and depth measurement, enabling accurate object placement and improved environmental understanding. Its applications include indoor navigation, real-time obstacle detection, and immersive AR gaming, where detailed environmental data enhances user interaction. Lidar technology's high-resolution depth sensing is essential for creating seamless and realistic AR experiences in complex environments.

Device Compatibility and Integration Challenges

Depth sensing technology is widely compatible with smartphones and tablets due to its reliance on infrared or structured light sensors embedded in consumer-grade devices. LiDAR scanning, while offering higher precision and longer range, is primarily integrated into premium devices like the latest iPhone and iPad Pro models, limiting its widespread adoption. Integration challenges for LiDAR include the need for specialized hardware and software optimization, whereas depth sensing benefits from broader OS support and easier deployment across diverse mobile platforms.

Future Trends in Depth Sensing and Lidar for AR

Future trends in depth sensing and LiDAR scanning for augmented reality (AR) emphasize enhanced spatial understanding and improved environmental mapping accuracy. Emerging technologies will integrate machine learning algorithms to refine depth data interpretation, enabling more immersive and interactive AR experiences. Miniaturization and cost reduction of LiDAR sensors are also anticipated to drive widespread adoption in consumer AR devices, expanding real-world applications.

Which Technology Reigns Supreme for AR?

Depth sensing excels in close-range object detection and real-time interaction, making it ideal for augmented reality applications requiring precise spatial mapping and immediate environmental feedback. LiDAR scanning offers superior long-range accuracy and detailed 3D mapping, enhancing AR experiences in expansive or outdoor environments by capturing intricate surface geometry. For AR technology, depth sensing dominates indoor and user-centric scenarios, while LiDAR reigns supreme in large-scale or outdoor spatial analysis.

Depth Sensing vs Lidar Scanning Infographic

techiny.com

techiny.com