Gaze input in augmented reality enables hands-free interaction by tracking where the user looks, offering intuitive navigation without physical contact. Tap input requires direct physical touch, providing precise control but limiting mobility and ease of use in complex environments. Combining gaze and tap inputs can enhance user experience by balancing natural interaction with accuracy and reliability.

Table of Comparison

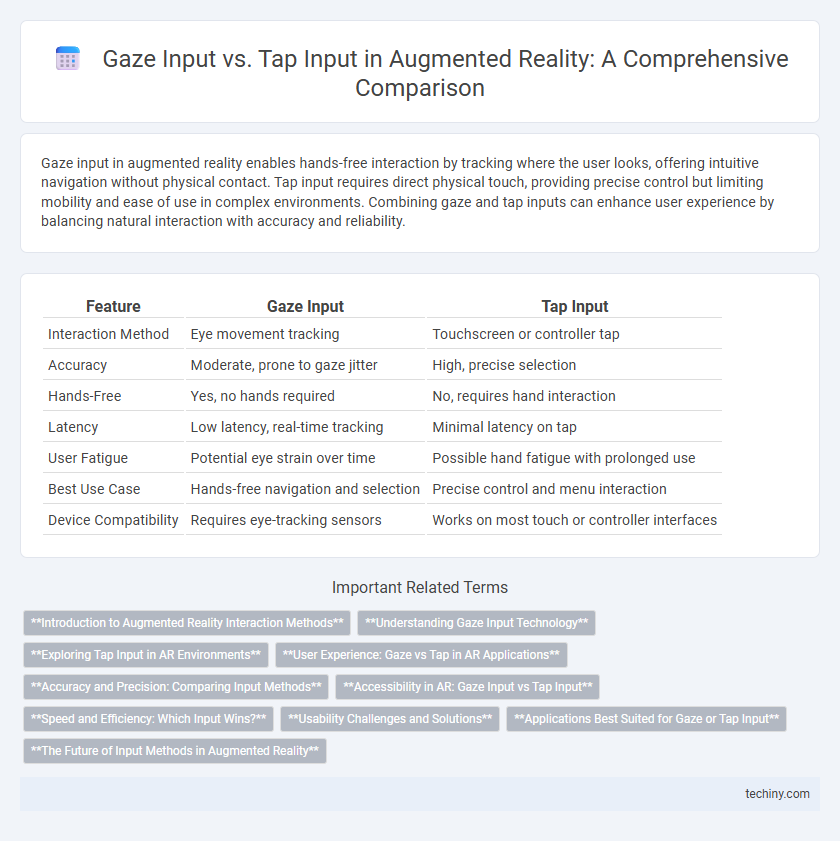

| Feature | Gaze Input | Tap Input |

|---|---|---|

| Interaction Method | Eye movement tracking | Touchscreen or controller tap |

| Accuracy | Moderate, prone to gaze jitter | High, precise selection |

| Hands-Free | Yes, no hands required | No, requires hand interaction |

| Latency | Low latency, real-time tracking | Minimal latency on tap |

| User Fatigue | Potential eye strain over time | Possible hand fatigue with prolonged use |

| Best Use Case | Hands-free navigation and selection | Precise control and menu interaction |

| Device Compatibility | Requires eye-tracking sensors | Works on most touch or controller interfaces |

Introduction to Augmented Reality Interaction Methods

Gaze input and tap input represent two primary interaction methods in augmented reality (AR) environments, each leveraging distinct user engagement techniques. Gaze input utilizes eye-tracking technology to enable hands-free navigation and selection, enhancing accessibility and immersive experiences in AR applications. Tap input, often implemented on handheld or wearable devices, relies on touch gestures for precise control, making it intuitive for tasks requiring direct manipulation within AR interfaces.

Understanding Gaze Input Technology

Gaze input technology leverages eye-tracking sensors to interpret user attention and control augmented reality (AR) interfaces through precise eye movement detection. This method enhances hands-free interaction by continuously monitoring gaze direction, enabling intuitive object selection and navigation within AR environments. Compared to tap input, gaze input offers faster response times and reduces physical effort, making it ideal for immersive and accessible AR applications.

Exploring Tap Input in AR Environments

Tap input in AR environments offers precise interaction by allowing users to select and manipulate virtual objects with direct touch, enhancing control and intuitiveness. This method reduces reliance on gaze tracking accuracy, making it effective in dynamic settings where eye tracking may struggle due to lighting or movement. Tap input supports complex commands and multi-touch gestures, expanding the range of user interactions in augmented reality applications.

User Experience: Gaze vs Tap in AR Applications

Gaze input in AR applications enhances user experience by enabling hands-free interaction, allowing users to seamlessly navigate interfaces through natural eye movements, which reduces physical effort and increases engagement. Tap input offers precise control and immediate feedback, essential for tasks requiring accuracy but may interrupt immersion due to the need for physical gestures. Optimizing AR interfaces by combining gaze tracking with tap input can create intuitive, efficient user experiences that leverage the strengths of both interaction methods.

Accuracy and Precision: Comparing Input Methods

Gaze input leverages eye-tracking technology to enable hands-free interaction, offering high precision in selecting small or distant virtual elements by detecting subtle eye movements. Tap input relies on direct touch, providing tactile feedback and often higher accuracy in densely populated user interfaces where deliberate selections are required. Studies show gaze input excels in speed but may suffer from lower accuracy in cluttered environments, whereas tap input maintains consistent precision regardless of interface complexity.

Accessibility in AR: Gaze Input vs Tap Input

Gaze input in augmented reality enhances accessibility by enabling hands-free interaction, particularly benefiting users with limited motor skills or disabilities. Unlike tap input, which requires precise finger coordination, gaze tracking allows intuitive navigation through eye movement, reducing physical strain and increasing usability. Integrating gaze input with adaptive feedback systems improves overall accessibility and user experience in AR environments.

Speed and Efficiency: Which Input Wins?

Gaze input offers faster interaction by reducing the need for physical movement, enabling users to select items simply by looking, which significantly enhances speed in augmented reality environments. Tap input, while more precise, introduces additional latency due to the required hand or finger movement, potentially slowing down task completion. Efficiency in AR favors gaze input for rapid, hands-free operations, making it the superior method for speed-critical applications.

Usability Challenges and Solutions

Gaze input in augmented reality presents usability challenges such as the "Midas touch" problem, where unintended selections occur due to prolonged focus, and limited precision compared to tap input, which offers more deliberate interaction but requires physical reach and dexterity. Solutions include implementing dwell time thresholds, gaze gesture combinations, and adaptive calibration techniques to enhance accuracy and reduce fatigue. Tap input can be optimized with ergonomic controllers and haptic feedback to improve user comfort and interaction reliability in diverse AR environments.

Applications Best Suited for Gaze or Tap Input

Applications involving hands-free control, such as medical procedures or industrial maintenance, are best suited for gaze input due to its ability to allow users to navigate interfaces without physical contact. Tap input excels in environments requiring precise selections and interactions, like gaming or detailed design tasks, where tactile feedback is crucial for accuracy. Mixed reality applications often combine both gaze and tap inputs to optimize user experience by leveraging the strengths of each method.

The Future of Input Methods in Augmented Reality

Gaze input leverages eye-tracking technology to enable hands-free interaction, enhancing user immersion and precision in augmented reality environments. Tap input remains intuitive and reliable, particularly for quick commands or selections, but may be limited in complex AR scenarios requiring dynamic control. Emerging hybrid input systems combining gaze and tap are poised to define the future of augmented reality, offering more natural, efficient, and context-aware user experiences.

Gaze Input vs Tap Input Infographic

techiny.com

techiny.com