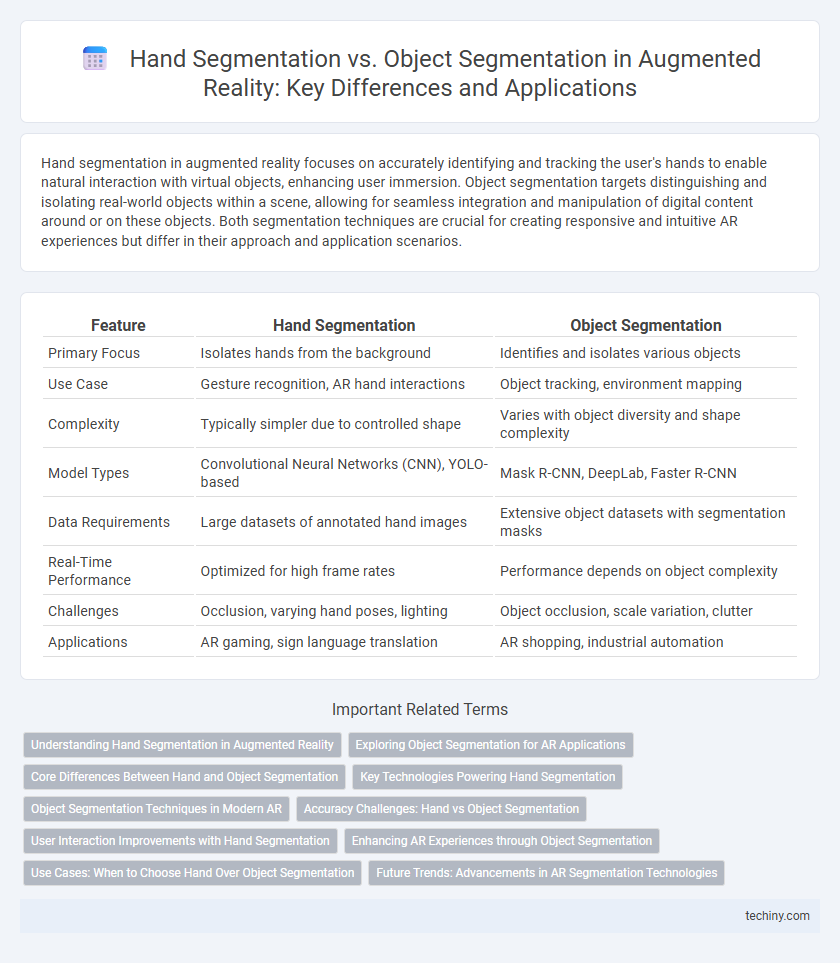

Hand segmentation in augmented reality focuses on accurately identifying and tracking the user's hands to enable natural interaction with virtual objects, enhancing user immersion. Object segmentation targets distinguishing and isolating real-world objects within a scene, allowing for seamless integration and manipulation of digital content around or on these objects. Both segmentation techniques are crucial for creating responsive and intuitive AR experiences but differ in their approach and application scenarios.

Table of Comparison

| Feature | Hand Segmentation | Object Segmentation |

|---|---|---|

| Primary Focus | Isolates hands from the background | Identifies and isolates various objects |

| Use Case | Gesture recognition, AR hand interactions | Object tracking, environment mapping |

| Complexity | Typically simpler due to controlled shape | Varies with object diversity and shape complexity |

| Model Types | Convolutional Neural Networks (CNN), YOLO-based | Mask R-CNN, DeepLab, Faster R-CNN |

| Data Requirements | Large datasets of annotated hand images | Extensive object datasets with segmentation masks |

| Real-Time Performance | Optimized for high frame rates | Performance depends on object complexity |

| Challenges | Occlusion, varying hand poses, lighting | Object occlusion, scale variation, clutter |

| Applications | AR gaming, sign language translation | AR shopping, industrial automation |

Understanding Hand Segmentation in Augmented Reality

Hand segmentation in augmented reality involves precisely isolating the user's hands from the background to enable natural interaction and gesture recognition. This process leverages deep learning models and computer vision techniques to accurately map hand shapes, movements, and positions in real-time. Unlike object segmentation, which focuses on detecting and classifying various physical items, hand segmentation requires high temporal resolution and fine detail to interpret complex hand gestures for immersive AR experiences.

Exploring Object Segmentation for AR Applications

Object segmentation in augmented reality enhances scene understanding by accurately isolating and identifying various objects within the environment, enabling more realistic and interactive AR experiences. Unlike hand segmentation, which focuses solely on detecting and tracking hand movements, object segmentation allows AR systems to interact with a wide range of physical items, improving spatial awareness and context-driven content placement. Advanced deep learning models leveraging convolutional neural networks (CNNs) and transformers enable precise object segmentation, critical for applications such as furniture visualization, immersive gaming, and industrial maintenance.

Core Differences Between Hand and Object Segmentation

Hand segmentation in augmented reality specifically isolates human hands by leveraging anatomical features such as fingers, palm contours, and skin texture, whereas object segmentation focuses on identifying and separating various non-hand items within the environment. The challenge in hand segmentation lies in managing frequent occlusions, articulation, and skin tone variability, while object segmentation deals with diverse shapes, sizes, and textures of numerous objects, requiring more generalized models. Effective hand segmentation often employs specialized neural networks trained on datasets like EgoHands or Hand-Object Interaction to capture precise hand dynamics, contrasting with broader object segmentation models that use datasets such as COCO or ImageNet for multi-class object detection.

Key Technologies Powering Hand Segmentation

Hand segmentation in augmented reality relies heavily on convolutional neural networks (CNNs) and depth-sensing technologies to accurately distinguish hand contours from complex backgrounds. Advanced machine learning algorithms, including semantic segmentation models like U-Net and Mask R-CNN, enable fine-grained differentiation of hand parts for interactive AR applications. Integrating infrared sensors and stereo cameras enhances depth perception, improving the precision of hand segmentation compared to general object segmentation.

Object Segmentation Techniques in Modern AR

Object segmentation techniques in modern augmented reality leverage deep learning models such as convolutional neural networks (CNNs) and transformers to accurately identify and isolate 3D objects within complex environments. Advanced methods use instance segmentation algorithms like Mask R-CNN and point cloud processing to enable real-time overlay and interaction with virtual content. Semantic understanding combined with depth-sensing technology enhances object boundary detection, improving AR experiences in applications ranging from industrial maintenance to gaming.

Accuracy Challenges: Hand vs Object Segmentation

Hand segmentation in augmented reality faces greater accuracy challenges compared to object segmentation due to the complex and highly articulated nature of human hands, which result in frequent self-occlusions and varying hand poses. Object segmentation typically benefits from more rigid shapes and consistent textures, making it easier to achieve precise boundaries and reduce false positives. The dynamic variability and fine-grained details of hands require advanced machine learning models and higher-resolution sensor data to improve segmentation accuracy effectively.

User Interaction Improvements with Hand Segmentation

Hand segmentation in augmented reality significantly enhances user interaction by enabling precise tracking of finger movements and gestures, leading to more intuitive and natural control. Unlike object segmentation, which focuses on identifying and isolating physical items within the environment, hand segmentation allows AR systems to interpret complex hand poses and dynamic actions in real-time. This results in improved responsiveness and seamless manipulation of digital elements, fostering immersive and user-friendly AR experiences.

Enhancing AR Experiences through Object Segmentation

Object segmentation enhances AR experiences by accurately identifying and isolating specific items within a scene, enabling precise interaction and realistic overlays. Unlike hand segmentation, which focuses solely on tracking hand movements, object segmentation allows AR applications to recognize a wide range of objects, facilitating complex tasks such as virtual object placement and environment mapping. This capability significantly improves user immersion and interaction fidelity in AR environments.

Use Cases: When to Choose Hand Over Object Segmentation

Hand segmentation excels in applications requiring fine-grained interaction detection, such as gesture control in augmented reality (AR) interfaces and virtual object manipulation. It offers precise hand movement tracking crucial for immersive AR gaming and sign language recognition systems. Object segmentation is preferable for static or known items, but hand segmentation dominates when user inputs and dynamic hand poses are central to user experience.

Future Trends: Advancements in AR Segmentation Technologies

Future trends in augmented reality emphasize enhanced hand segmentation accuracy using deep learning models with real-time processing capabilities and broader datasets for diverse hand poses and lighting conditions. Object segmentation advancements leverage multi-modal sensor fusion, integrating RGB, depth, and thermal data to improve precision in dynamic environments and occlusions. Emerging techniques focus on contextual understanding and adaptive segmentation, enabling more intuitive AR interactions and seamless integration of virtual and physical elements.

Hand segmentation vs Object segmentation Infographic

techiny.com

techiny.com