Scene understanding enables augmented reality systems to interpret and interact with real-world objects by recognizing shapes, surfaces, and spatial relationships. Environment mapping captures the physical surroundings by creating detailed 3D representations to anchor virtual elements accurately. Combining scene understanding with environment mapping enhances AR experiences by improving object placement and realistic interaction within dynamic spaces.

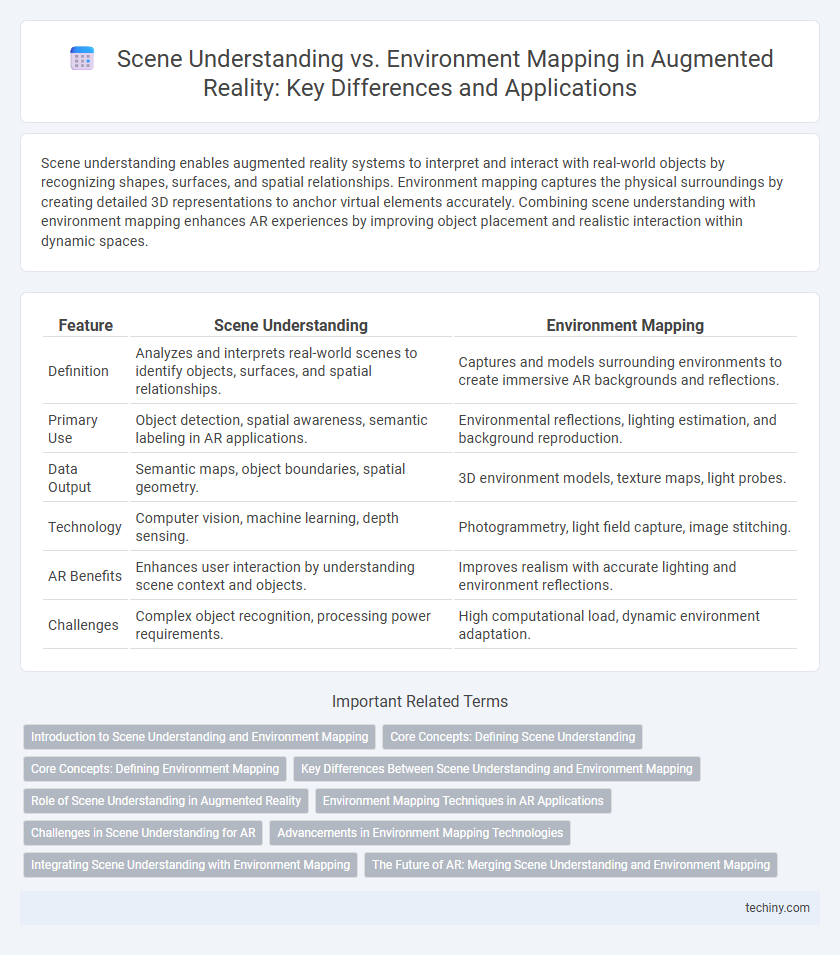

Table of Comparison

| Feature | Scene Understanding | Environment Mapping |

|---|---|---|

| Definition | Analyzes and interprets real-world scenes to identify objects, surfaces, and spatial relationships. | Captures and models surrounding environments to create immersive AR backgrounds and reflections. |

| Primary Use | Object detection, spatial awareness, semantic labeling in AR applications. | Environmental reflections, lighting estimation, and background reproduction. |

| Data Output | Semantic maps, object boundaries, spatial geometry. | 3D environment models, texture maps, light probes. |

| Technology | Computer vision, machine learning, depth sensing. | Photogrammetry, light field capture, image stitching. |

| AR Benefits | Enhances user interaction by understanding scene context and objects. | Improves realism with accurate lighting and environment reflections. |

| Challenges | Complex object recognition, processing power requirements. | High computational load, dynamic environment adaptation. |

Introduction to Scene Understanding and Environment Mapping

Scene understanding in augmented reality involves interpreting and analyzing the physical environment to identify objects, surfaces, and spatial relationships, enabling realistic and interactive digital content placement. Environment mapping captures detailed visual information of surroundings to create dynamic reflections and lighting effects on virtual objects, enhancing immersion. Both techniques are crucial for achieving accurate alignment and realistic integration of virtual elements within real-world scenes.

Core Concepts: Defining Scene Understanding

Scene Understanding in Augmented Reality refers to the technology's ability to interpret and recognize objects, surfaces, and spatial relationships within a physical environment, enabling interactive and context-aware experiences. Unlike Environment Mapping, which primarily captures the geometry and lighting conditions of a space to overlay virtual content, Scene Understanding involves semantic analysis that allows AR systems to identify and classify elements in real time. Core concepts of Scene Understanding include depth sensing, object detection, and spatial awareness, which facilitate accurate placement and interaction of virtual objects within the user's environment.

Core Concepts: Defining Environment Mapping

Environment mapping in augmented reality refers to the process of capturing and reconstructing the physical surroundings to create a digital model that accurately represents spatial geometry and surface properties. It involves techniques such as photogrammetry, depth sensing, and spatial meshing to generate detailed 3D representations used for realistic object placement and interaction. Environment mapping serves as the foundational layer enabling context-aware AR experiences by providing precise environmental data for scene rendering and occlusion handling.

Key Differences Between Scene Understanding and Environment Mapping

Scene understanding in augmented reality involves interpreting and classifying objects, surfaces, and spatial relationships within a user's environment to enable meaningful interaction and context-aware experiences. Environment mapping focuses on capturing and creating a dynamic 3D representation of the physical surroundings, primarily used for accurate lighting, reflections, and spatial anchoring. Key differences include scene understanding's emphasis on semantic analysis and object recognition, while environment mapping centers on spatial geometry and environmental texture reconstruction.

Role of Scene Understanding in Augmented Reality

Scene understanding in augmented reality enables the device to recognize objects, surfaces, and spatial relationships within the physical environment, facilitating accurate and context-aware digital content placement. It uses advanced computer vision and machine learning algorithms to interpret real-world scenes, allowing AR applications to interact naturally with users and surroundings. This capability ensures immersive experiences by aligning virtual elements with real-world cues in real-time, surpassing static environment mapping that only captures geometric data.

Environment Mapping Techniques in AR Applications

Environment mapping techniques in augmented reality (AR) involve capturing and representing the physical surroundings to create immersive and context-aware experiences. Methods such as photogrammetry, depth sensing, and LiDAR scanning enable precise reconstruction of spatial geometry, allowing AR systems to accurately overlay virtual objects in real-world environments. Real-time environment mapping enhances occlusion, lighting estimation, and interaction fidelity, crucial for applications in gaming, navigation, and industrial maintenance.

Challenges in Scene Understanding for AR

Scene understanding in augmented reality involves accurately interpreting and reconstructing complex spatial layouts, which is challenged by dynamic lighting conditions, occlusions, and varying object textures. Unlike environment mapping, which primarily captures static reflections and surface properties, scene understanding requires real-time semantic segmentation and depth estimation to enable meaningful interactions. Ensuring robustness and low latency in diverse and unpredictable real-world settings remains a critical hurdle for AR applications.

Advancements in Environment Mapping Technologies

Advancements in environment mapping technologies have significantly enhanced augmented reality by enabling more accurate and dynamic representations of physical spaces. Techniques such as photogrammetry and LiDAR enable high-resolution 3D environment captures, improving spatial awareness and occlusion handling in AR applications. These innovations allow seamless integration of virtual objects with real-world surroundings, elevating user immersion and interaction fidelity.

Integrating Scene Understanding with Environment Mapping

Integrating scene understanding with environment mapping enhances augmented reality by enabling devices to recognize and interpret real-world objects and spaces while simultaneously creating detailed 3D models of the surroundings. This fusion allows for more accurate placement of virtual elements, improved occlusion handling, and realistic interactions between digital content and the physical environment. Leveraging advanced computer vision algorithms and sensor data, the combined approach significantly boosts spatial awareness and user immersion in AR applications.

The Future of AR: Merging Scene Understanding and Environment Mapping

Scene understanding in augmented reality involves interpreting spatial relationships and object recognition within a user's environment, while environment mapping captures the physical surroundings' geometry and texture. The future of AR emphasizes the fusion of these technologies to create more immersive and context-aware experiences by enabling real-time interaction with dynamic elements and precise spatial awareness. Advancements in machine learning and sensor fusion will accelerate this integration, enhancing AR applications across gaming, navigation, and remote collaboration.

Scene Understanding vs Environment Mapping Infographic

techiny.com

techiny.com