Direct rendering in augmented reality allows virtual objects to be displayed straight onto the user's view with minimal latency, enhancing real-time interaction and immersion. Indirect rendering processes the virtual content separately before compositing it with the real-world scene, which can introduce delays but may improve visual quality and compatibility with diverse hardware. Choosing between direct and indirect rendering depends on the application's performance demands and the balance between speed and graphical fidelity.

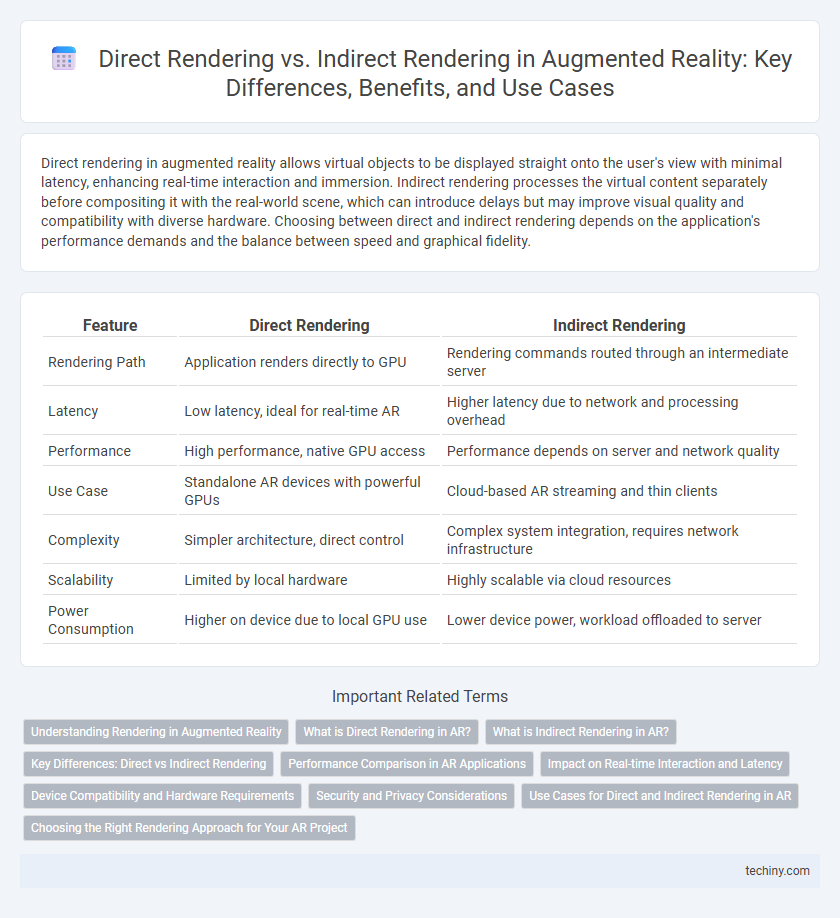

Table of Comparison

| Feature | Direct Rendering | Indirect Rendering |

|---|---|---|

| Rendering Path | Application renders directly to GPU | Rendering commands routed through an intermediate server |

| Latency | Low latency, ideal for real-time AR | Higher latency due to network and processing overhead |

| Performance | High performance, native GPU access | Performance depends on server and network quality |

| Use Case | Standalone AR devices with powerful GPUs | Cloud-based AR streaming and thin clients |

| Complexity | Simpler architecture, direct control | Complex system integration, requires network infrastructure |

| Scalability | Limited by local hardware | Highly scalable via cloud resources |

| Power Consumption | Higher on device due to local GPU use | Lower device power, workload offloaded to server |

Understanding Rendering in Augmented Reality

Direct rendering in augmented reality (AR) involves the immediate visualization of virtual objects by directly outputting graphics to the display, ensuring minimal latency and a more immersive user experience. Indirect rendering relies on intermediate processing steps, such as offloading rendering tasks to external servers or using buffered frames, which can introduce latency but allows for complex computations and enhanced detail. Understanding these rendering approaches is critical for optimizing AR applications in terms of performance, visual fidelity, and real-time interaction.

What is Direct Rendering in AR?

Direct Rendering in Augmented Reality refers to the process where graphics are generated and displayed immediately on the device's screen without intermediate steps or delays. This method allows for faster visualization and lower latency by directly accessing the hardware's rendering pipeline, enhancing real-time interaction and immersion. It is crucial for AR applications requiring rapid response times and high frame rates, such as gaming and live navigation.

What is Indirect Rendering in AR?

Indirect rendering in augmented reality (AR) refers to the process where virtual content is first rendered off-screen into an intermediate buffer before being composited with the real-world camera feed. This technique enables complex visual effects and precise alignment of virtual objects with real environments by allowing additional processing steps such as image filtering or depth-based adjustments. Indirect rendering improves the visual integration of AR elements but can introduce latency compared to direct rendering, where content is rendered straight to the display.

Key Differences: Direct vs Indirect Rendering

Direct rendering in augmented reality (AR) systems involves the GPU rendering graphics directly to the display frame buffer, resulting in lower latency and higher performance critical for real-time AR experiences. Indirect rendering, on the other hand, routes rendering commands through an intermediate layer or server before reaching the display, which can introduce latency but allows for more complex processing or remote rendering. Key differences include latency impact, processing efficiency, and suitability for different AR applications, with direct rendering favored for time-sensitive tasks and indirect rendering beneficial when leveraging centralized resources.

Performance Comparison in AR Applications

Direct rendering in augmented reality (AR) applications offers superior performance by minimizing latency and allowing real-time interaction through direct GPU communication. Indirect rendering, involving intermediate processing layers, can introduce delays and reduce frame rates, impacting user experience in dynamic AR environments. Performance benchmarks reveal that direct rendering achieves higher throughput and lower jitter, essential for maintaining seamless AR visuals and responsiveness.

Impact on Real-time Interaction and Latency

Direct rendering in augmented reality significantly reduces latency by bypassing intermediate processing layers, enabling faster frame updates and more responsive real-time interaction. Indirect rendering involves additional compositing steps that increase latency, potentially causing delays and degrading the fluidity of user experience. Optimizing rendering pipelines to prioritize direct rendering methods enhances synchronization between virtual content and physical environments, crucial for immersive AR applications.

Device Compatibility and Hardware Requirements

Direct rendering in augmented reality leverages the device's GPU to render graphics in real-time, offering high performance but requiring advanced hardware such as dedicated graphics processors and sufficient memory bandwidth. Indirect rendering relies on cloud or external servers to process and render visuals before streaming them to the device, enabling broader compatibility with lower-spec hardware but introducing potential latency and network dependency. Device compatibility varies significantly, as direct rendering favors high-end AR headsets and smartphones with robust GPUs, while indirect rendering supports a wider range of devices including low-power AR glasses and mobile devices with limited computational resources.

Security and Privacy Considerations

Direct rendering in augmented reality (AR) systems processes visual data locally on the device, minimizing data transmission and reducing exposure to external threats, thereby enhancing user privacy and security. Indirect rendering involves offloading rendering tasks to cloud servers, which can introduce vulnerabilities such as data interception, unauthorized access, and potential breaches of sensitive user information during transmission or storage. Implementing robust encryption protocols and secure authentication methods is critical in indirect rendering to protect user data and maintain integrity in AR applications.

Use Cases for Direct and Indirect Rendering in AR

Direct rendering in augmented reality is ideal for applications requiring real-time interaction and low latency, such as gaming, medical visualization, and industrial maintenance, where immediate response and high frame rates are critical. Indirect rendering suits use cases involving complex lighting and detailed reflections, like architectural visualization and product design, where photorealistic quality enhances user experience but can tolerate higher processing delays. The choice between direct and indirect rendering hinges on the balance between visual fidelity and performance constraints specific to each AR scenario.

Choosing the Right Rendering Approach for Your AR Project

Direct rendering delivers real-time visuals by processing graphics data immediately on AR devices, ensuring minimal latency and high performance essential for immersive experiences. Indirect rendering, involving a separate server or cloud processing unit to handle graphics before sending the output to the AR device, offers scalability and complex scene handling but may introduce latency. Selecting the right rendering approach depends on project requirements such as device capability, network conditions, and desired visual fidelity to balance performance with user experience in augmented reality applications.

Direct Rendering vs Indirect Rendering Infographic

techiny.com

techiny.com