Gesture recognition in augmented reality offers intuitive and precise control by tracking hand movements, enabling users to interact with digital objects naturally within their environment. Voice commands provide a hands-free interface, allowing seamless multitasking and accessibility, especially in scenarios where physical gestures are impractical. Balancing these input methods enhances user experience by combining the tactile immediacy of gestures with the convenience of vocal instructions.

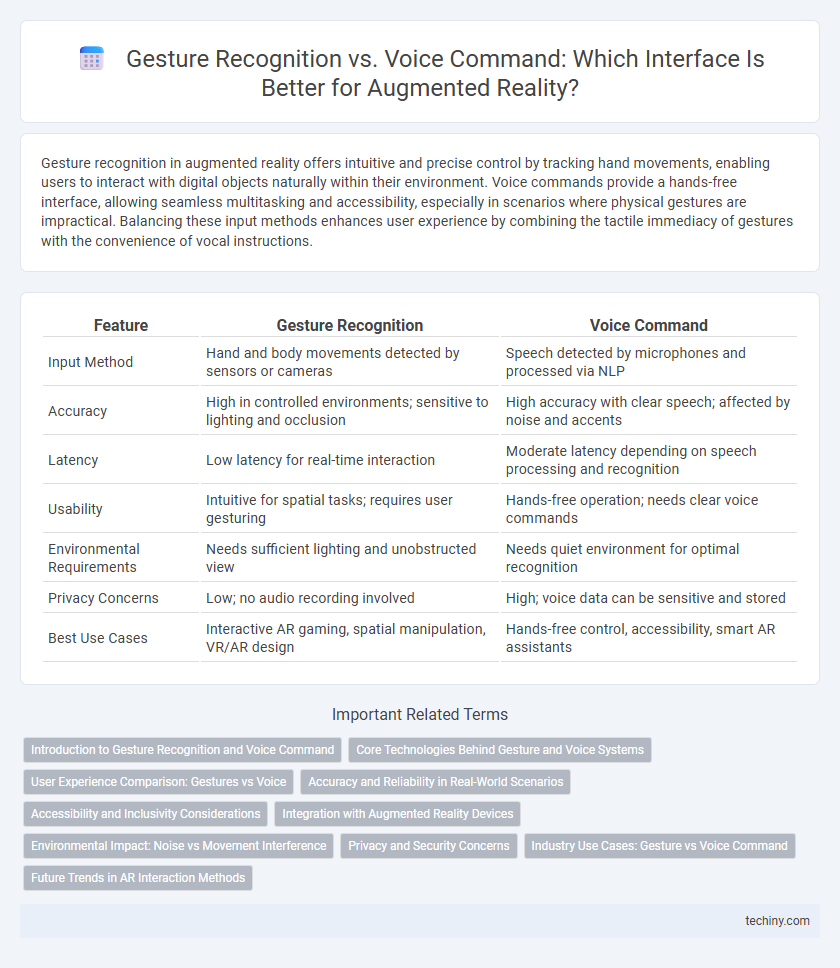

Table of Comparison

| Feature | Gesture Recognition | Voice Command |

|---|---|---|

| Input Method | Hand and body movements detected by sensors or cameras | Speech detected by microphones and processed via NLP |

| Accuracy | High in controlled environments; sensitive to lighting and occlusion | High accuracy with clear speech; affected by noise and accents |

| Latency | Low latency for real-time interaction | Moderate latency depending on speech processing and recognition |

| Usability | Intuitive for spatial tasks; requires user gesturing | Hands-free operation; needs clear voice commands |

| Environmental Requirements | Needs sufficient lighting and unobstructed view | Needs quiet environment for optimal recognition |

| Privacy Concerns | Low; no audio recording involved | High; voice data can be sensitive and stored |

| Best Use Cases | Interactive AR gaming, spatial manipulation, VR/AR design | Hands-free control, accessibility, smart AR assistants |

Introduction to Gesture Recognition and Voice Command

Gesture recognition in augmented reality (AR) systems enables users to interact with digital content through hand and body movements detected by sensors or cameras, offering an intuitive, hands-free control method. Voice command technology processes spoken language to execute commands within AR environments, facilitating seamless user interaction without physical input devices. Both methods enhance AR usability by providing natural, immersive interfaces that accommodate different user preferences and situational needs.

Core Technologies Behind Gesture and Voice Systems

Gesture recognition systems in augmented reality primarily rely on computer vision techniques, employing camera sensors and machine learning algorithms to interpret hand movements and spatial gestures. Voice command technologies utilize automatic speech recognition (ASR) and natural language processing (NLP) frameworks to convert spoken language into actionable commands within AR environments. Both systems integrate advanced sensor fusion and AI-driven pattern analysis to enhance accuracy and responsiveness in interactive AR applications.

User Experience Comparison: Gestures vs Voice

Gesture recognition in augmented reality offers intuitive, hands-free control that enhances user immersion by allowing natural physical movements to manipulate virtual objects. Voice commands provide a convenient, multitasking-friendly interface, enabling users to issue complex instructions without physical effort or interruption. User experience favors gestures for precision and spatial interaction, while voice commands excel in accessibility and reducing cognitive load in noisy environments.

Accuracy and Reliability in Real-World Scenarios

Gesture recognition in augmented reality often demonstrates higher accuracy in controlled environments but can suffer from variability due to lighting conditions and occlusions, impacting reliability in real-world applications. Voice command systems leverage advanced natural language processing and noise-cancellation technologies to maintain performance amidst ambient noise, enhancing their robustness in dynamic settings. Combining multimodal inputs can improve overall interaction accuracy and reliability, addressing individual limitations of gesture and voice recognition.

Accessibility and Inclusivity Considerations

Gesture recognition in augmented reality enhances accessibility by enabling hands-free control for users with speech impairments or those in noisy environments, while voice commands provide an inclusive interface for individuals with mobility challenges or limited hand dexterity. Incorporating both modalities ensures AR systems accommodate diverse user needs, promoting equitable interaction regardless of physical or environmental constraints. Optimizing AR interfaces for multimodal input supports broader usability and inclusivity, crucial for accessible technology design.

Integration with Augmented Reality Devices

Gesture recognition offers precise, real-time interaction within augmented reality (AR) environments by tracking hand and body movements, enabling intuitive control without physical controllers. Voice command integration provides hands-free operation through natural language processing, allowing users to execute complex commands and access information seamlessly within AR devices. Combining gesture recognition and voice commands enhances AR device usability, delivering a multimodal interface that adapts to diverse user contexts and improves immersive experiences.

Environmental Impact: Noise vs Movement Interference

Gesture recognition in augmented reality minimizes noise pollution by eliminating the need for vocal input, making it ideal for quiet environments and reducing auditory distractions. Voice commands, while hands-free, can contribute to noise interference in shared or public spaces, potentially disrupting others and affecting system accuracy. Movement interference from gestures can be mitigated with precise tracking technology, ensuring reliable interaction without environmental disturbance.

Privacy and Security Concerns

Gesture recognition in augmented reality offers enhanced privacy by processing movements locally on devices, reducing exposure to cloud-based data breaches. Voice command systems, while convenient, often transmit audio recordings to external servers, increasing risks related to unauthorized access and data interception. Securing AR interfaces demands robust encryption and user consent protocols to mitigate vulnerabilities linked to both input methods.

Industry Use Cases: Gesture vs Voice Command

Gesture recognition in augmented reality offers precise control in industrial settings such as assembly lines and quality inspections, enabling hands-free operation where manual dexterity is essential. Voice command enhances efficiency in noisy environments like warehouses or maintenance sites by allowing workers to interact with AR systems without physical contact. Both technologies optimize workflow, but gesture recognition excels in tasks requiring fine motor skills, while voice command supports multitasking and accessibility in dynamic industrial scenarios.

Future Trends in AR Interaction Methods

Future trends in AR interaction methods emphasize increasingly sophisticated gesture recognition systems using AI-driven computer vision, enabling precise, natural hand movements for immersive user experiences. Voice command integration advances with context-aware, multi-language processing, allowing seamless, hands-free AR control in diverse environments. Combining gesture and voice interfaces creates hybrid models that improve accessibility, responsiveness, and customization in next-generation augmented reality platforms.

Gesture Recognition vs Voice Command Infographic

techiny.com

techiny.com