Real-world occlusion occurs when physical objects block the view of virtual elements, enhancing the realism of augmented reality by integrating digital content seamlessly into the environment. Virtual occlusion refers to digitally simulating object blocking within AR applications, allowing virtual objects to obscure each other or appear behind physical structures without relying on actual physical barriers. Mastering both occlusion types is essential for creating immersive and believable AR experiences that accurately reflect spatial relationships between real and digital objects.

Table of Comparison

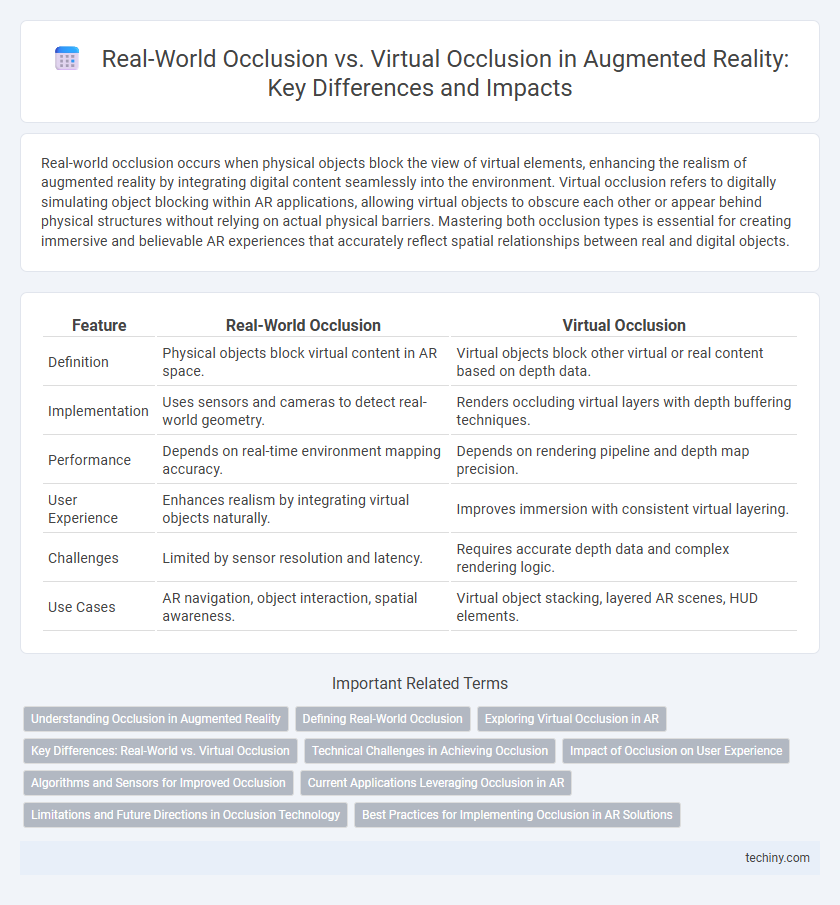

| Feature | Real-World Occlusion | Virtual Occlusion |

|---|---|---|

| Definition | Physical objects block virtual content in AR space. | Virtual objects block other virtual or real content based on depth data. |

| Implementation | Uses sensors and cameras to detect real-world geometry. | Renders occluding virtual layers with depth buffering techniques. |

| Performance | Depends on real-time environment mapping accuracy. | Depends on rendering pipeline and depth map precision. |

| User Experience | Enhances realism by integrating virtual objects naturally. | Improves immersion with consistent virtual layering. |

| Challenges | Limited by sensor resolution and latency. | Requires accurate depth data and complex rendering logic. |

| Use Cases | AR navigation, object interaction, spatial awareness. | Virtual object stacking, layered AR scenes, HUD elements. |

Understanding Occlusion in Augmented Reality

Understanding occlusion in augmented reality involves distinguishing real-world occlusion, where physical objects block virtual elements, from virtual occlusion, where digital objects mask other virtual content. Accurate occlusion handling enhances the realism and immersion of AR experiences by ensuring proper depth perception and spatial alignment. Advanced computer vision and depth-sensing technologies enable AR systems to dynamically detect and render occlusions, creating seamless integration between virtual and physical environments.

Defining Real-World Occlusion

Real-world occlusion in augmented reality refers to accurately rendering virtual objects so they are naturally hidden or partially obscured by physical objects in the user's environment. This process relies on spatial mapping and depth sensing technologies, such as LiDAR or stereo cameras, to detect real-world surfaces and their geometries. Proper implementation of real-world occlusion enhances immersion by ensuring virtual content interacts seamlessly with the physical space.

Exploring Virtual Occlusion in AR

Virtual occlusion in augmented reality enhances realism by accurately layering digital objects behind or in front of real-world elements, creating seamless integration between virtual and physical environments. By leveraging depth sensing and spatial mapping technologies, AR systems identify occlusion boundaries, enabling virtual objects to be partially or fully hidden by real-world surfaces. This approach improves user immersion and interaction fidelity, making AR applications in gaming, design, and training more convincing and effective.

Key Differences: Real-World vs. Virtual Occlusion

Real-world occlusion in augmented reality involves accurately blocking virtual objects behind physical objects using depth sensing and environmental understanding, ensuring seamless integration with the user's surroundings. Virtual occlusion, however, relies on software algorithms and pre-defined object models to simulate overlapping between virtual elements, often lacking the precision of real-world depth data. The key difference lies in real-world occlusion's reliance on live sensor data for dynamic, context-aware interactions, whereas virtual occlusion depends on static or scripted scenes without real-time environmental feedback.

Technical Challenges in Achieving Occlusion

Achieving accurate real-world occlusion in augmented reality involves complex depth sensing and spatial mapping to correctly identify and render objects behind physical barriers. Virtual occlusion demands precise alignment of digital objects with real-world geometry, requiring advanced algorithms to handle varying lighting conditions and dynamic environments. Technical challenges include latency in depth data processing and limited sensor resolution, which often result in imperfect blending and visual artifacts.

Impact of Occlusion on User Experience

Real-world occlusion in augmented reality ensures virtual objects are accurately hidden or revealed based on their interaction with physical environments, significantly improving spatial realism and user immersion. Virtual occlusion, by contrast, relies on pre-defined layering and can create discrepancies that break the illusion of integration between real and digital elements. Effective occlusion handling reduces visual confusion and enhances depth perception, leading to a seamless AR experience that closely mimics natural sight.

Algorithms and Sensors for Improved Occlusion

Advanced algorithms such as depth sensing and machine learning-based segmentation enable accurate real-world occlusion by distinguishing physical objects within augmented reality scenes. Sensors including LiDAR, time-of-flight cameras, and stereo vision systems capture precise depth information to enhance virtual occlusion, ensuring digital elements correctly appear behind or in front of real-world objects. Integration of these technologies improves spatial coherence and immersion, optimizing occlusion handling for more realistic AR experiences.

Current Applications Leveraging Occlusion in AR

Current applications leveraging occlusion in augmented reality enhance user immersion by accurately blending virtual objects with the physical environment. Real-world occlusion enables digital elements to be hidden behind real objects, improving depth perception and realism in AR navigation, gaming, and retail experiences. Virtual occlusion, where real objects are obscured by virtual ones, is utilized in training simulations and design prototyping to facilitate interaction between physical and digital assets.

Limitations and Future Directions in Occlusion Technology

Real-World Occlusion in augmented reality faces significant limitations due to challenges in accurately mapping complex environments and dynamically updating occlusion in real time, often resulting in visual artifacts or misaligned overlays. Virtual Occlusion techniques struggle with balancing computational efficiency and realism, as current algorithms may not fully capture the depth and texture variability of real objects. Future directions emphasize enhancing sensor fusion, leveraging AI-driven depth estimation, and developing adaptive occlusion frameworks to create more seamless and immersive AR experiences.

Best Practices for Implementing Occlusion in AR Solutions

Effective augmented reality occlusion requires precise depth sensing and environment mapping to differentiate between real-world objects and virtual elements. Leveraging machine learning algorithms can enhance virtual occlusion accuracy by predicting object boundaries and movements in dynamic scenes. Best practices include real-time depth buffer integration, calibration of sensors, and minimizing latency to ensure seamless interaction between physical and digital layers.

Real-World Occlusion vs Virtual Occlusion Infographic

techiny.com

techiny.com