Visual Simultaneous Localization and Mapping (V-SLAM) leverages camera data to create detailed, real-time 3D maps while tracking the user's position in augmented reality environments. LiDAR-based mapping uses laser sensors to generate highly accurate depth information, excelling in precision under diverse lighting conditions but often at higher costs and power consumption. Choosing between V-SLAM and LiDAR depends on the specific AR application requirements, balancing accuracy, environmental adaptability, and hardware constraints.

Table of Comparison

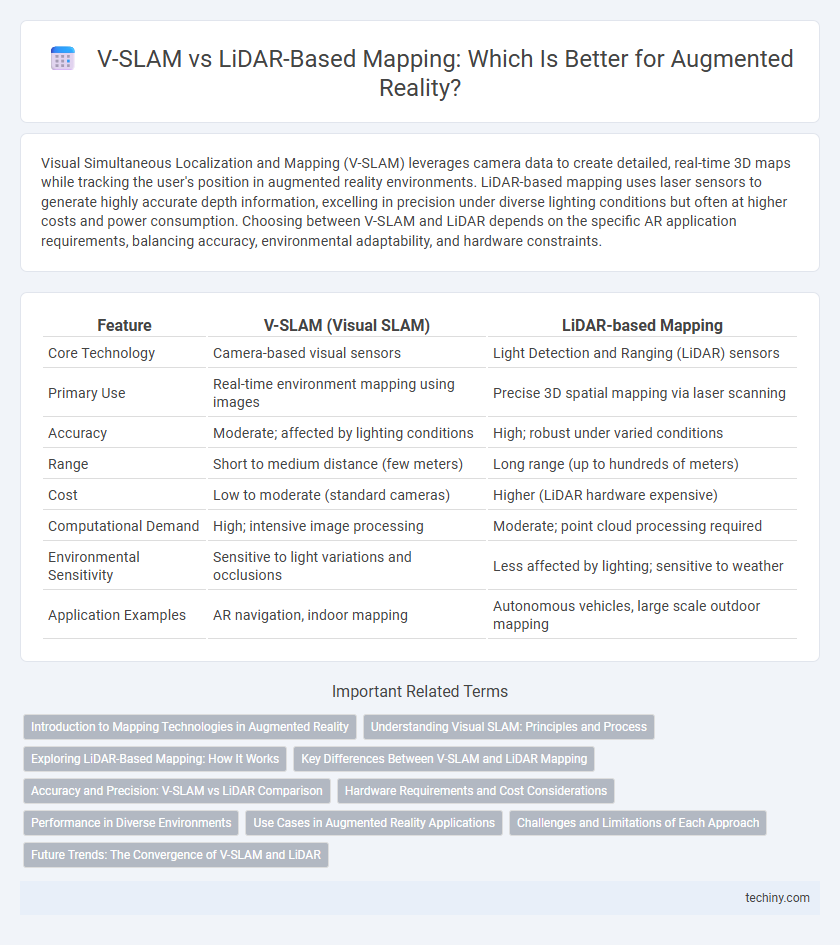

| Feature | V-SLAM (Visual SLAM) | LiDAR-based Mapping |

|---|---|---|

| Core Technology | Camera-based visual sensors | Light Detection and Ranging (LiDAR) sensors |

| Primary Use | Real-time environment mapping using images | Precise 3D spatial mapping via laser scanning |

| Accuracy | Moderate; affected by lighting conditions | High; robust under varied conditions |

| Range | Short to medium distance (few meters) | Long range (up to hundreds of meters) |

| Cost | Low to moderate (standard cameras) | Higher (LiDAR hardware expensive) |

| Computational Demand | High; intensive image processing | Moderate; point cloud processing required |

| Environmental Sensitivity | Sensitive to light variations and occlusions | Less affected by lighting; sensitive to weather |

| Application Examples | AR navigation, indoor mapping | Autonomous vehicles, large scale outdoor mapping |

Introduction to Mapping Technologies in Augmented Reality

Visual Simultaneous Localization and Mapping (V-SLAM) leverages camera data to create real-time 3D maps of environments, enabling accurate localization and mapping in augmented reality (AR) applications. LiDAR-based mapping utilizes laser scanning to generate precise depth information and detailed spatial models, enhancing environmental understanding in AR systems. Both technologies serve as foundational tools for spatial awareness, with V-SLAM excelling in texture-rich environments and LiDAR providing superior accuracy in complex or low-light conditions.

Understanding Visual SLAM: Principles and Process

Visual Simultaneous Localization and Mapping (V-SLAM) utilizes camera inputs to construct real-time 3D maps by detecting and tracking visual features within an environment, enabling precise localization without prior knowledge of the surroundings. V-SLAM processes include feature extraction, motion estimation, loop closure detection, and map optimization to maintain an accurate representation despite dynamic conditions. Compared to LiDAR-based mapping, V-SLAM offers richer contextual information from visual data but may face challenges under low visibility or textureless surfaces.

Exploring LiDAR-Based Mapping: How It Works

LiDAR-based mapping utilizes laser pulses to measure distances by illuminating targets and analyzing the reflected light, creating high-resolution 3D spatial maps with precise depth information. This technology captures detailed environmental geometry even in low-light or feature-poor conditions, making it highly effective for autonomous navigation and complex augmented reality applications. Unlike V-SLAM, which relies on camera images and visual features for localization and mapping, LiDAR provides direct metric measurements, enhancing accuracy and robustness in dynamic or visually challenging environments.

Key Differences Between V-SLAM and LiDAR Mapping

Visual Simultaneous Localization and Mapping (V-SLAM) utilizes camera data to generate 3D maps and track device position by analyzing visual features, enabling cost-effective and lightweight implementation in augmented reality applications. LiDAR-based mapping relies on laser pulses to measure distances with high accuracy, providing precise depth information and robust performance in low-light or textureless environments. Key differences include V-SLAM's sensitivity to lighting conditions and feature richness versus LiDAR's superior depth accuracy and resilience to environmental variations.

Accuracy and Precision: V-SLAM vs LiDAR Comparison

Visual Simultaneous Localization and Mapping (V-SLAM) relies on camera data to construct detailed 3D maps with high precision in texture-rich environments but may struggle with accuracy under poor lighting or repetitive patterns. LiDAR-based mapping delivers superior accuracy and precision in depth measurement by using laser pulses, offering reliable performance in diverse lighting conditions and complex geometries. Combining V-SLAM and LiDAR sensor data enhances overall mapping robustness, balancing visual detail with depth accuracy in augmented reality applications.

Hardware Requirements and Cost Considerations

Visual Simultaneous Localization and Mapping (V-SLAM) systems primarily rely on RGB cameras and standard processors, resulting in lower hardware costs and broader device compatibility compared to LiDAR-based mapping. LiDAR sensors require specialized, often expensive components such as laser emitters and photodetectors, driving up the overall system cost and power consumption. For AR applications prioritizing affordability and ease of integration, V-SLAM offers a more cost-effective and hardware-efficient solution than LiDAR-based approaches.

Performance in Diverse Environments

V-SLAM leverages camera data to create real-time 3D maps, excelling in texture-rich, indoor settings but struggling under low-light or feature-poor conditions. LiDAR-based mapping provides superior accuracy and reliability in diverse environments, including outdoor, low-visibility, or dynamic scenes, due to its active sensing and precise distance measurements. In mixed or challenging environments, integrating V-SLAM with LiDAR yields robust performance by combining visual detail with comprehensive spatial awareness.

Use Cases in Augmented Reality Applications

Visual Simultaneous Localization and Mapping (V-SLAM) excels in augmented reality applications requiring real-time environment mapping using standard RGB cameras, making it ideal for mobile AR experiences like indoor navigation and gaming. LiDAR-based mapping offers higher accuracy and depth perception, benefiting AR applications in complex outdoor environments such as autonomous vehicles and large-scale construction site visualization. Combining V-SLAM with LiDAR can enhance robustness and precision in AR, enabling seamless interaction with both dynamic and static environments.

Challenges and Limitations of Each Approach

Visual Simultaneous Localization and Mapping (V-SLAM) faces challenges such as sensitivity to lighting conditions, motion blur, and textureless environments, which can degrade accuracy and robustness in augmented reality applications. LiDAR-based mapping, while offering precise depth measurements and robustness to lighting variations, struggles with high costs, bulkier hardware, and reduced performance in reflective or transparent surfaces. Both approaches require significant computational resources, but LiDAR typically demands more power, impacting real-time processing and device miniaturization for AR deployments.

Future Trends: The Convergence of V-SLAM and LiDAR

Future trends in augmented reality emphasize the convergence of Visual Simultaneous Localization and Mapping (V-SLAM) and LiDAR-based mapping to enhance spatial accuracy and environmental understanding. Integrating V-SLAM's real-time visual data processing with LiDAR's precise depth sensing enables robust, scalable mapping solutions for AR applications in complex indoor and outdoor environments. Advances in sensor fusion algorithms and edge computing hardware will drive improvements in latency, reliability, and power efficiency, unlocking new possibilities for immersive AR experiences.

Visual Simultaneous Localization and Mapping (V-SLAM) vs LiDAR-based Mapping Infographic

techiny.com

techiny.com