Pose estimation identifies the position and orientation of a specific object or person in 3D space, enabling precise spatial understanding for applications like AR gaming and virtual try-ons. Object detection locates and classifies objects within a 2D image or video frame, providing essential information for overlaying digital content onto real-world scenes. While object detection determines what is present, pose estimation reveals how it is positioned, offering deeper interaction possibilities in augmented reality environments.

Table of Comparison

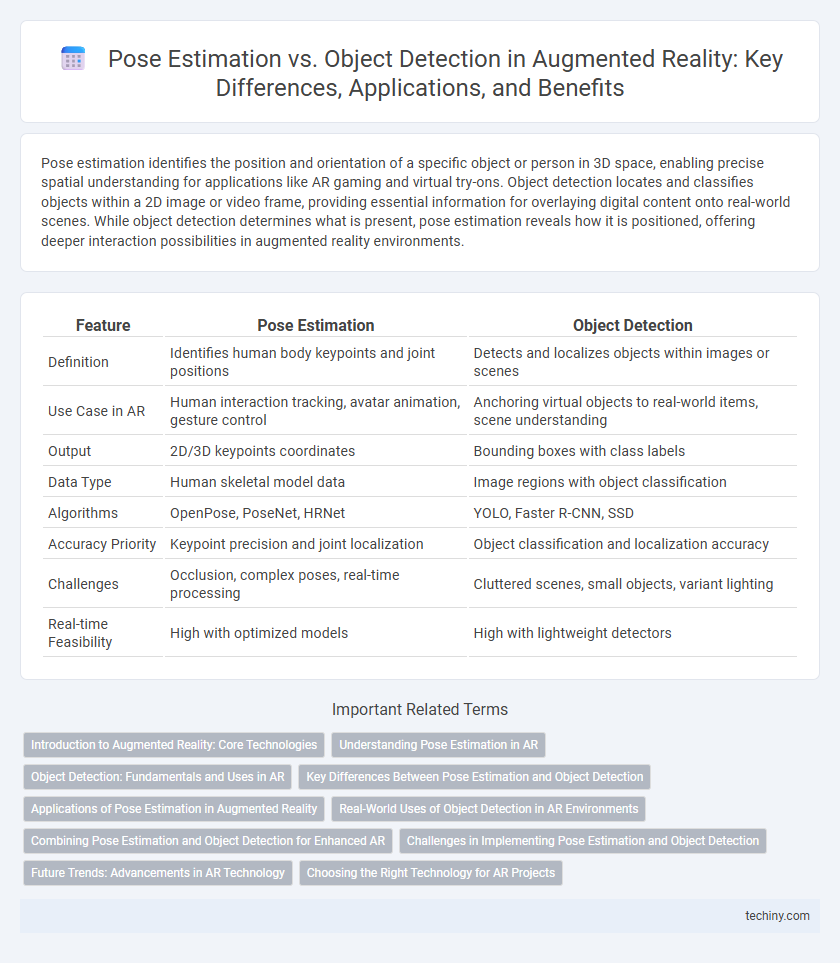

| Feature | Pose Estimation | Object Detection |

|---|---|---|

| Definition | Identifies human body keypoints and joint positions | Detects and localizes objects within images or scenes |

| Use Case in AR | Human interaction tracking, avatar animation, gesture control | Anchoring virtual objects to real-world items, scene understanding |

| Output | 2D/3D keypoints coordinates | Bounding boxes with class labels |

| Data Type | Human skeletal model data | Image regions with object classification |

| Algorithms | OpenPose, PoseNet, HRNet | YOLO, Faster R-CNN, SSD |

| Accuracy Priority | Keypoint precision and joint localization | Object classification and localization accuracy |

| Challenges | Occlusion, complex poses, real-time processing | Cluttered scenes, small objects, variant lighting |

| Real-time Feasibility | High with optimized models | High with lightweight detectors |

Introduction to Augmented Reality: Core Technologies

Pose estimation captures the precise orientation and position of objects or users within a 3D space, crucial for seamless interaction in augmented reality environments. Object detection identifies and classifies objects within a scene, enabling overlaying relevant virtual information on physical objects. Together, these core technologies enhance spatial awareness and interaction fidelity, driving immersive AR experiences.

Understanding Pose Estimation in AR

Pose estimation in augmented reality precisely determines the position and orientation of a user or object in 3D space, enabling seamless integration of virtual elements with the real world for immersive experiences. Unlike object detection that identifies and classifies objects within a frame, pose estimation tracks spatial coordinates and angular rotations to interpret movement and gestures accurately. High-quality pose estimation algorithms utilize depth sensors, inertial measurement units (IMUs), and machine learning models to enhance AR applications in gaming, navigation, and remote assistance.

Object Detection: Fundamentals and Uses in AR

Object Detection in Augmented Reality (AR) involves identifying and localizing specific objects within a real-world environment, enabling AR systems to overlay digital content precisely on physical items. It utilizes machine learning algorithms and convolutional neural networks (CNNs) to analyze visual data and detect objects with high accuracy and speed. This technology is fundamental for interactive AR applications such as retail visualization, industrial maintenance, and gaming, where real-time object recognition enhances user engagement and operational efficiency.

Key Differences Between Pose Estimation and Object Detection

Pose estimation identifies the exact spatial orientation and coordinates of a subject's body joints, enabling precise tracking of movement in augmented reality applications. Object detection locates and classifies objects within an image but does not provide detailed information about the object's pose or articulation. Pose estimation requires advanced algorithms to analyze body or object structure, while object detection primarily focuses on bounding box placement and object categorization.

Applications of Pose Estimation in Augmented Reality

Pose estimation in augmented reality enables accurate tracking of a user's body, hands, or facial expressions, which is essential for immersive interactive experiences such as virtual try-ons, fitness coaching, and gesture-based control systems. Unlike object detection, which identifies and classifies objects within a scene, pose estimation provides detailed spatial orientation and movement data necessary for overlaying digital content that adapts dynamically to user actions. This technology enhances real-time AR applications by delivering precise alignment between virtual elements and the user's physical environment, improving engagement and usability.

Real-World Uses of Object Detection in AR Environments

Object detection enhances augmented reality applications by recognizing and tracking physical objects in real-time, enabling interactive overlays and context-aware experiences. It facilitates practical uses like furniture placement visualization, real-time translation of text on signs, and interactive gaming by anchoring digital content to detected items. Unlike pose estimation, which focuses on the orientation and position of devices or body parts, object detection drives the identification and contextual interaction with real-world items in AR environments.

Combining Pose Estimation and Object Detection for Enhanced AR

Combining pose estimation and object detection significantly enhances augmented reality experiences by enabling more accurate and context-aware interactions. Pose estimation provides precise spatial orientation and movement tracking of users or objects, while object detection identifies and classifies items within the environment. Integrating both technologies allows AR systems to deliver seamless overlays and dynamic responses, improving realism and user engagement in applications such as gaming, industrial maintenance, and medical training.

Challenges in Implementing Pose Estimation and Object Detection

Pose estimation in augmented reality faces challenges such as accurately determining the 3D orientation and position of objects despite occlusions, varying lighting conditions, and dynamic backgrounds. Object detection struggles with real-time performance constraints and the need for extensive training datasets to handle diverse object appearances and environments. Both tasks require robust algorithms and optimized hardware to ensure seamless integration and user experience in AR applications.

Future Trends: Advancements in AR Technology

Future trends in augmented reality technology emphasize enhanced pose estimation techniques that leverage deep learning and sensor fusion for more precise spatial understanding. Advancements in real-time object detection algorithms will improve AR interactions by enabling faster and more accurate recognition of dynamic environments. Integration of AI-driven pose estimation with object detection is anticipated to create seamless and immersive AR experiences, pushing the boundaries of virtual and physical world blending.

Choosing the Right Technology for AR Projects

Pose estimation excels in augmented reality projects requiring precise tracking of human or object positions for interactive experiences, offering detailed spatial orientation and movement data. Object detection provides efficient identification and localization of multiple objects within a scene, making it ideal for applications focused on recognizing items or environments rather than detailed pose information. Selecting the right technology depends on the AR project's goals: use pose estimation for immersive user interactions and object detection for environment mapping and item recognition.

Pose Estimation vs Object Detection Infographic

techiny.com

techiny.com