Unoccluded rendering in augmented reality ensures virtual objects remain fully visible regardless of real-world obstructions, enhancing user engagement and clarity. Occluded rendering, by contrast, integrates virtual elements behind real-world objects, creating a more immersive and realistic experience by accurately simulating depth and spatial relationships. Effective use of occlusion requires precise environmental scanning and depth sensing to seamlessly blend digital content with physical surroundings.

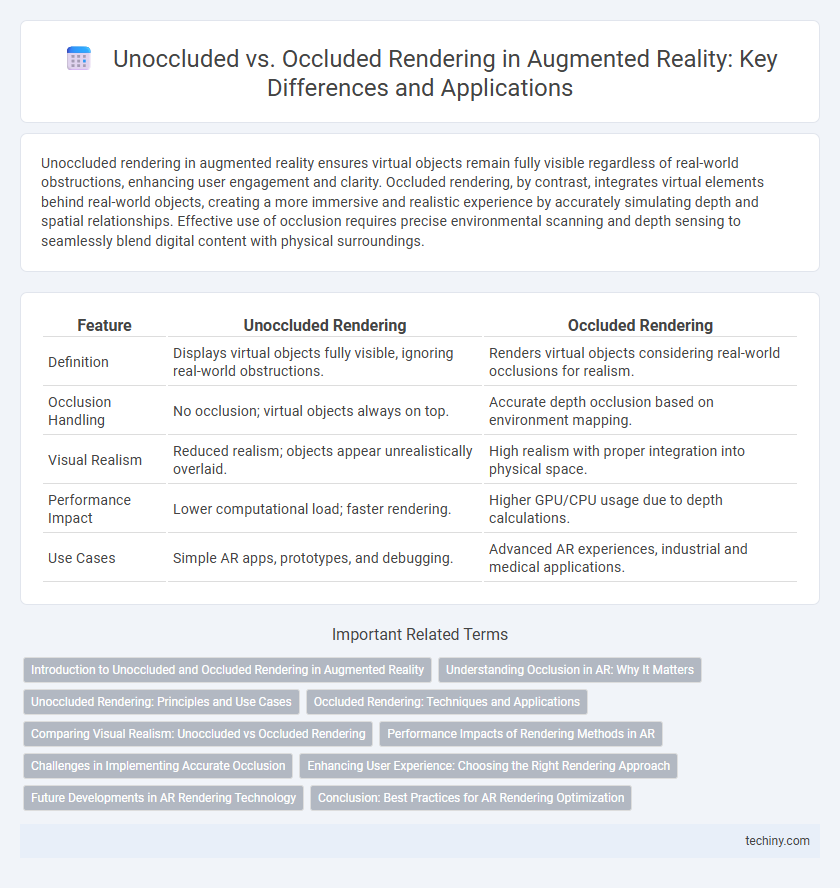

Table of Comparison

| Feature | Unoccluded Rendering | Occluded Rendering |

|---|---|---|

| Definition | Displays virtual objects fully visible, ignoring real-world obstructions. | Renders virtual objects considering real-world occlusions for realism. |

| Occlusion Handling | No occlusion; virtual objects always on top. | Accurate depth occlusion based on environment mapping. |

| Visual Realism | Reduced realism; objects appear unrealistically overlaid. | High realism with proper integration into physical space. |

| Performance Impact | Lower computational load; faster rendering. | Higher GPU/CPU usage due to depth calculations. |

| Use Cases | Simple AR apps, prototypes, and debugging. | Advanced AR experiences, industrial and medical applications. |

Introduction to Unoccluded and Occluded Rendering in Augmented Reality

Unoccluded rendering in augmented reality displays virtual objects clearly without any obstruction by real-world elements, enhancing visibility and user interaction. Occluded rendering, conversely, involves accurately layering virtual objects behind real physical objects, creating a realistic depth perception crucial for immersive AR experiences. Effective occlusion processing relies on depth sensing technologies like LiDAR or stereo cameras to map the environment and ensure virtual objects blend seamlessly with the real world.

Understanding Occlusion in AR: Why It Matters

Occlusion in augmented reality (AR) refers to the accurate layering of virtual objects behind real-world elements to create a convincing depth perception. Unoccluded rendering displays virtual objects without considering real-world obstructions, leading to unrealistic visuals and breaking immersion. Correct occluded rendering enhances spatial awareness and user interaction by integrating virtual content naturally within the physical environment.

Unoccluded Rendering: Principles and Use Cases

Unoccluded rendering in augmented reality (AR) involves displaying virtual objects without any real-world objects blocking or intersecting with them, ensuring these elements remain fully visible to the user. This technique is essential for applications such as virtual product visualization, architectural design previews, and educational demonstrations where clear visibility of AR content enhances user engagement and comprehension. By bypassing complex occlusion detection algorithms, unoccluded rendering offers higher performance and simpler implementation in scenarios where real-world obstruction is negligible or irrelevant.

Occluded Rendering: Techniques and Applications

Occluded rendering in augmented reality employs depth sensing, environmental mapping, and real-time object recognition to accurately block virtual objects behind real-world elements, enhancing spatial realism and user immersion. Techniques such as depth buffer testing, stencil buffers, and machine learning-based segmentation ensure precise alignment and occlusion by dynamically updating scene geometry and depth information. Applications span from immersive gaming and industrial training simulations to advanced medical visualization, where realistic layering of virtual and physical objects is critical for user interaction and situational awareness.

Comparing Visual Realism: Unoccluded vs Occluded Rendering

Unoccluded rendering in augmented reality presents virtual objects fully visible without environmental obstruction, enhancing brightness and clarity but potentially breaking immersion by ignoring real-world occlusions. Occluded rendering integrates depth sensing to correctly hide or partially obscure virtual elements behind real-world objects, significantly improving visual realism and spatial understanding. The accuracy of occlusion handling directly impacts user perception, making occluded rendering essential for seamless AR experiences that convincingly blend virtual content with physical surroundings.

Performance Impacts of Rendering Methods in AR

Unoccluded rendering in augmented reality demands higher GPU resources as it renders virtual objects without considering real-world obstructions, resulting in increased computational load and potential frame rate drops. Occluded rendering leverages depth sensing and environmental mapping to accurately hide virtual elements behind real-world objects, optimizing rendering efficiency and improving overall performance. Balancing these methods is critical for achieving seamless AR experiences while maintaining device battery life and responsiveness.

Challenges in Implementing Accurate Occlusion

Accurate occlusion in augmented reality requires precise depth sensing and real-time environmental mapping to correctly identify and render objects that should appear hidden. Challenges include integrating diverse sensor data like LiDAR, stereo cameras, and depth sensors while minimizing latency to prevent visual discrepancies. Complex lighting conditions and dynamic scenes further complicate maintaining seamless occlusion that aligns with the user's perspective.

Enhancing User Experience: Choosing the Right Rendering Approach

Unoccluded rendering in augmented reality (AR) provides clear visibility of virtual objects by allowing them to appear in front of all real-world elements, enhancing user engagement with unobstructed visuals. Occluded rendering improves realism by correctly layering virtual objects behind real-world surfaces, enabling seamless integration and depth perception crucial for immersive experiences. Selecting the appropriate rendering approach depends on the AR application's goals, balancing visual clarity and spatial accuracy to optimize user experience.

Future Developments in AR Rendering Technology

Future developments in AR rendering technology will enhance unoccluded rendering by improving real-time depth sensing and spatial mapping accuracy, enabling seamless integration of virtual objects with the physical environment. Advances in machine learning algorithms will optimize occluded rendering, allowing for precise layering and natural interactions between virtual elements and real-world objects. These innovations will drive more immersive and realistic augmented reality experiences across various applications, from gaming to industrial design.

Conclusion: Best Practices for AR Rendering Optimization

Unoccluded rendering in augmented reality enhances user immersion by ensuring virtual objects remain fully visible, while occluded rendering improves realism by accurately integrating virtual elements with physical world obstructions. Best practices for AR rendering optimization balance performance and visual fidelity through efficient occlusion culling, depth sensing technologies, and adaptive rendering techniques tailored to device capabilities. Prioritizing scene understanding and leveraging hardware acceleration enables seamless AR experiences with optimized computational resources.

Unoccluded Rendering vs Occluded Rendering Infographic

techiny.com

techiny.com