Latency measures the time it takes for data to travel from the source to the destination, affecting the overall speed of network communication. Jitter refers to the variation in packet arrival times, causing irregular delays that can disrupt real-time applications such as voice and video calls. Minimizing both latency and jitter is crucial for maintaining a smooth and responsive networking experience for pets using connected devices.

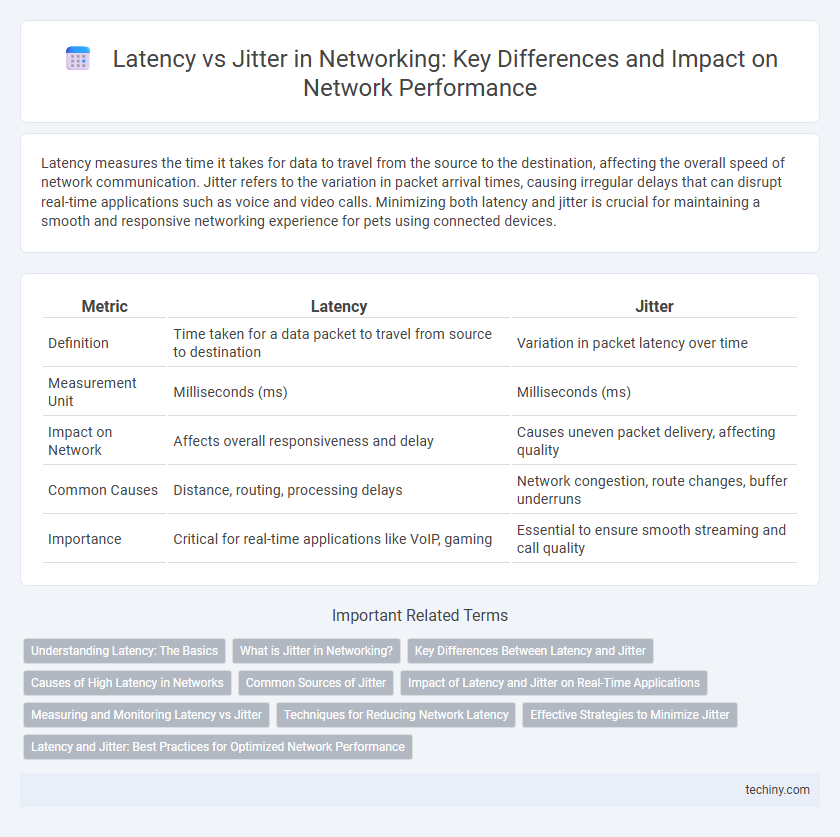

Table of Comparison

| Metric | Latency | Jitter |

|---|---|---|

| Definition | Time taken for a data packet to travel from source to destination | Variation in packet latency over time |

| Measurement Unit | Milliseconds (ms) | Milliseconds (ms) |

| Impact on Network | Affects overall responsiveness and delay | Causes uneven packet delivery, affecting quality |

| Common Causes | Distance, routing, processing delays | Network congestion, route changes, buffer underruns |

| Importance | Critical for real-time applications like VoIP, gaming | Essential to ensure smooth streaming and call quality |

Understanding Latency: The Basics

Latency refers to the time delay experienced in data transmission between a source and destination, typically measured in milliseconds (ms). It is influenced by factors such as physical distance, network congestion, and processing delays at routers or switches. Understanding latency is crucial for optimizing network performance, especially in real-time applications like VoIP and online gaming where low latency ensures smoother user experiences.

What is Jitter in Networking?

Jitter in networking refers to the variation in packet arrival times during data transmission, causing inconsistent delays between packets. It directly impacts real-time communications like VoIP and video conferencing by causing disruptions and reduced quality. Measuring and minimizing jitter is crucial to maintain smooth and reliable network performance.

Key Differences Between Latency and Jitter

Latency measures the time delay for data to travel from source to destination, typically expressed in milliseconds, while jitter refers to the variability in packet arrival times during transmission. High latency affects overall network responsiveness, causing noticeable lag, whereas high jitter impacts the consistency of data flow, leading to disruptions in real-time applications like VoIP and online gaming. Understanding the distinction between latency and jitter is crucial for optimizing network performance and ensuring smooth communication experiences.

Causes of High Latency in Networks

High latency in networks is often caused by excessive congestion, resulting from overloaded routers and switches that delay packet processing. Long physical distances between devices increase propagation delay, significantly impacting response times. Inefficient routing protocols and hardware limitations also contribute to increased latency, affecting overall network performance.

Common Sources of Jitter

Common sources of jitter in networking include network congestion, route changes, and improper buffering, which cause variations in packet arrival times. Hardware issues such as malfunctioning routers or switches and wireless interference from other devices also contribute to jitter. Understanding these factors helps optimize quality of service (QoS) for real-time applications like VoIP and video conferencing.

Impact of Latency and Jitter on Real-Time Applications

Latency directly affects the responsiveness of real-time applications like VoIP and online gaming, causing noticeable delays in communication. Jitter, the variation in packet arrival times, leads to inconsistent performance, resulting in choppy audio, video distortions, and impaired user experience. Both increased latency and jitter degrade the quality of real-time interactions, making network optimization essential for maintaining seamless connectivity.

Measuring and Monitoring Latency vs Jitter

Measuring latency involves calculating the round-trip time (RTT) between sender and receiver using protocols like ICMP ping or TCP time stamps to assess network delay. Monitoring jitter requires analyzing the variation in packet arrival times, often through tools like packet capture analyzers or specialized network performance monitoring systems that track real-time fluctuations. Accurate latency and jitter measurements are critical for optimizing Quality of Service (QoS) in VoIP, video conferencing, and real-time gaming applications.

Techniques for Reducing Network Latency

Techniques for reducing network latency include optimizing routing paths, deploying edge computing to process data closer to the source, and using Quality of Service (QoS) protocols to prioritize time-sensitive traffic. Network engineers implement TCP/IP stack optimizations and leverage content delivery networks (CDNs) to minimize transmission delay. Hardware upgrades such as low-latency switches and high-speed fiber optics further enhance packet delivery speed and reduce overall network latency.

Effective Strategies to Minimize Jitter

Minimizing jitter in networking requires prioritizing consistent packet delivery by implementing Quality of Service (QoS) mechanisms that allocate bandwidth efficiently and reduce congestion. Using traffic shaping and buffering techniques helps smooth packet flows, while deploying jitter buffers in VoIP systems compensates for packet arrival time variations. Network infrastructure improvements like upgrading to low-latency routers and optimizing routing protocols further stabilize latency and reduce jitter impact on real-time communications.

Latency and Jitter: Best Practices for Optimized Network Performance

Latency measures the time it takes for data to travel from source to destination, while jitter represents the variation in packet arrival times, both crucial for real-time applications like VoIP and online gaming. Minimizing latency involves optimizing routing paths, using high-speed hardware, and implementing Quality of Service (QoS) policies to prioritize critical traffic. Reducing jitter requires stable transmission channels, consistent packet timing, and buffering techniques to maintain smooth data flow and enhance overall network performance.

Latency vs Jitter Infographic

techiny.com

techiny.com