Depth sensing employs specialized sensors such as LiDAR or structured light to capture precise distance measurements, enabling accurate three-dimensional mapping in augmented reality environments. Stereo vision relies on dual cameras to simulate human binocular vision, calculating depth by comparing disparities between two images, which offers cost-effective depth estimation but may struggle in low-texture or low-light conditions. Choosing between depth sensing and stereo vision depends on the required accuracy, environmental conditions, and hardware constraints within an augmented reality application.

Table of Comparison

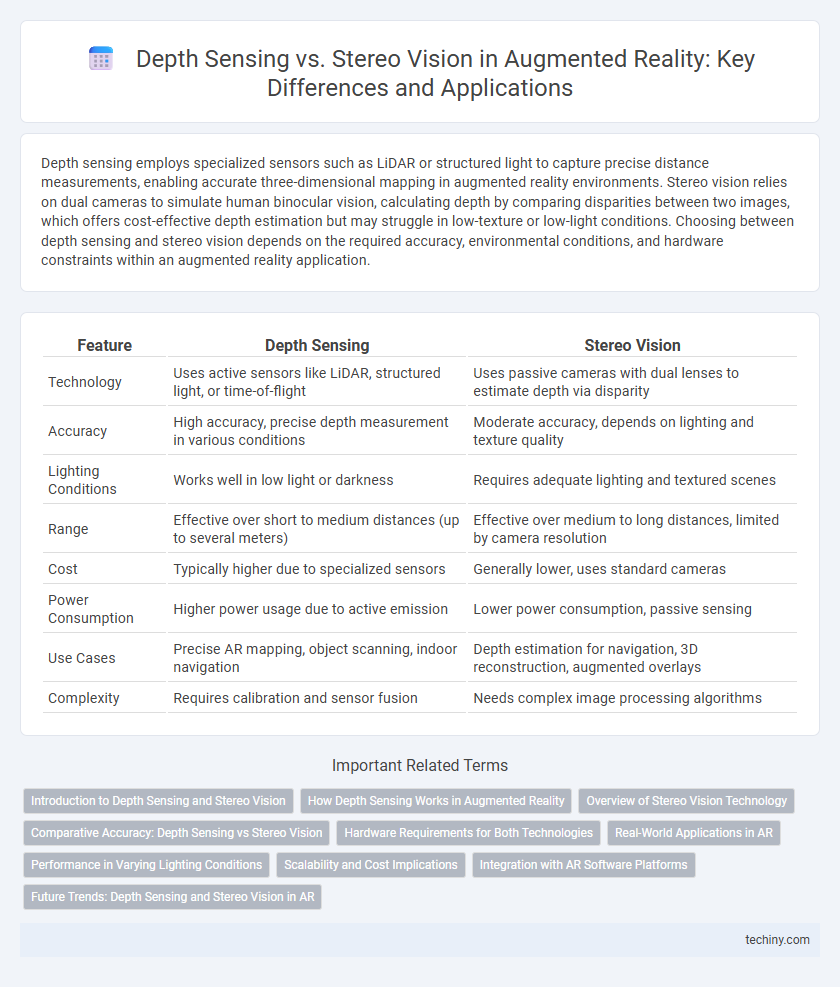

| Feature | Depth Sensing | Stereo Vision |

|---|---|---|

| Technology | Uses active sensors like LiDAR, structured light, or time-of-flight | Uses passive cameras with dual lenses to estimate depth via disparity |

| Accuracy | High accuracy, precise depth measurement in various conditions | Moderate accuracy, depends on lighting and texture quality |

| Lighting Conditions | Works well in low light or darkness | Requires adequate lighting and textured scenes |

| Range | Effective over short to medium distances (up to several meters) | Effective over medium to long distances, limited by camera resolution |

| Cost | Typically higher due to specialized sensors | Generally lower, uses standard cameras |

| Power Consumption | Higher power usage due to active emission | Lower power consumption, passive sensing |

| Use Cases | Precise AR mapping, object scanning, indoor navigation | Depth estimation for navigation, 3D reconstruction, augmented overlays |

| Complexity | Requires calibration and sensor fusion | Needs complex image processing algorithms |

Introduction to Depth Sensing and Stereo Vision

Depth sensing in augmented reality utilizes specialized sensors such as time-of-flight cameras and structured light to accurately capture the distance between objects and the device, enabling precise spatial mapping. Stereo vision processes images from two cameras positioned at different angles to simulate human binocular vision, deriving depth information through disparity calculation. Both methods are essential for creating immersive AR experiences by enhancing environmental awareness and object interaction.

How Depth Sensing Works in Augmented Reality

Depth sensing in augmented reality relies on specialized sensors, such as LiDAR, structured light, or time-of-flight cameras, to measure the distance between the device and the surrounding objects by emitting signals and analyzing their reflections. This process generates precise 3D maps of the environment, enabling accurate spatial understanding and real-time object placement within AR experiences. Depth sensing outperforms traditional stereo vision by providing enhanced accuracy and reliability in diverse lighting conditions and complex scenes.

Overview of Stereo Vision Technology

Stereo vision technology in augmented reality uses two cameras to capture images from slightly different angles, enabling depth perception through disparity calculation. This method mimics human binocular vision, providing detailed 3D information essential for accurate object recognition and spatial mapping. Stereo vision excels in environments with sufficient texture and lighting, allowing real-time depth sensing crucial for immersive AR experiences.

Comparative Accuracy: Depth Sensing vs Stereo Vision

Depth sensing uses specialized sensors like LiDAR and structured light to capture precise distance measurements, offering higher accuracy in complex environments. Stereo vision relies on dual cameras to estimate depth through disparity calculation but can struggle with textureless surfaces and varying lighting conditions. Depth sensing generally outperforms stereo vision in accuracy due to its direct measurement approach and robustness in diverse scenarios.

Hardware Requirements for Both Technologies

Depth sensing in augmented reality relies on specialized hardware such as LiDAR sensors, structured light emitters, or time-of-flight cameras to accurately capture spatial information and measure distances. Stereo vision uses a pair of standard cameras spaced at a specific baseline to simulate human binocular vision, requiring fewer specialized components but demanding increased processing power to compute disparity maps. Depth sensing hardware often provides more precise depth data with lower computational load, while stereo vision systems depend heavily on software algorithms and higher processing capabilities to extract depth information from dual camera inputs.

Real-World Applications in AR

Depth sensing technologies, such as LiDAR and Time-of-Flight (ToF) sensors, provide precise distance measurements critical for accurate spatial mapping and object placement in augmented reality (AR) environments. Stereo vision systems use dual cameras to simulate human binocular vision, enabling depth perception by analyzing disparities between images, which is essential for applications requiring real-time interaction and low-cost hardware. Real-world AR applications, including indoor navigation, industrial maintenance, and interactive gaming, benefit from combining both technologies to enhance environment understanding and improve user experience.

Performance in Varying Lighting Conditions

Depth sensing technology leverages infrared light to accurately capture spatial information in low-light and dark environments, maintaining consistent performance regardless of ambient lighting. Stereo vision relies on two cameras to estimate depth through disparity, which can be significantly affected by challenging lighting conditions like glare, shadows, or low illumination. In applications requiring reliable depth data in diverse lighting, depth sensing provides superior robustness compared to stereo vision systems.

Scalability and Cost Implications

Depth sensing technology offers scalable solutions for augmented reality applications by providing accurate distance measurements with fewer hardware components, reducing overall system complexity and cost. Stereo vision relies on dual cameras and complex algorithms to generate depth maps, often increasing computational requirements and expenses, which can limit scalability in large-scale deployments. Cost-effective depth sensors enable broader adoption in AR devices, balancing performance and affordability, whereas stereo vision may be better suited for high-precision tasks with higher budget allowances.

Integration with AR Software Platforms

Depth sensing technology employs time-of-flight or structured light sensors to capture precise spatial data, enhancing AR software platforms with accurate environment mapping and object placement. Stereo vision leverages dual cameras to simulate human binocular vision, enabling AR applications to interpret depth through disparity calculations but often requires complex calibration for seamless integration. Effective AR solutions balance the sensor accuracy of depth sensing with the natural scene interpretation of stereo vision, optimizing user experience and interaction within augmented environments.

Future Trends: Depth Sensing and Stereo Vision in AR

Future trends in augmented reality emphasize advancements in depth sensing technology, leveraging LiDAR and time-of-flight sensors to provide more accurate and real-time environmental mapping. Stereo vision continues to evolve with AI-enhanced algorithms that improve 3D object recognition and spatial understanding, enabling richer and more interactive AR experiences. Integration of hybrid systems combining depth sensing and stereo vision is expected to optimize performance, reduce latency, and enhance AR device versatility across various applications.

Depth Sensing vs Stereo Vision Infographic

techiny.com

techiny.com