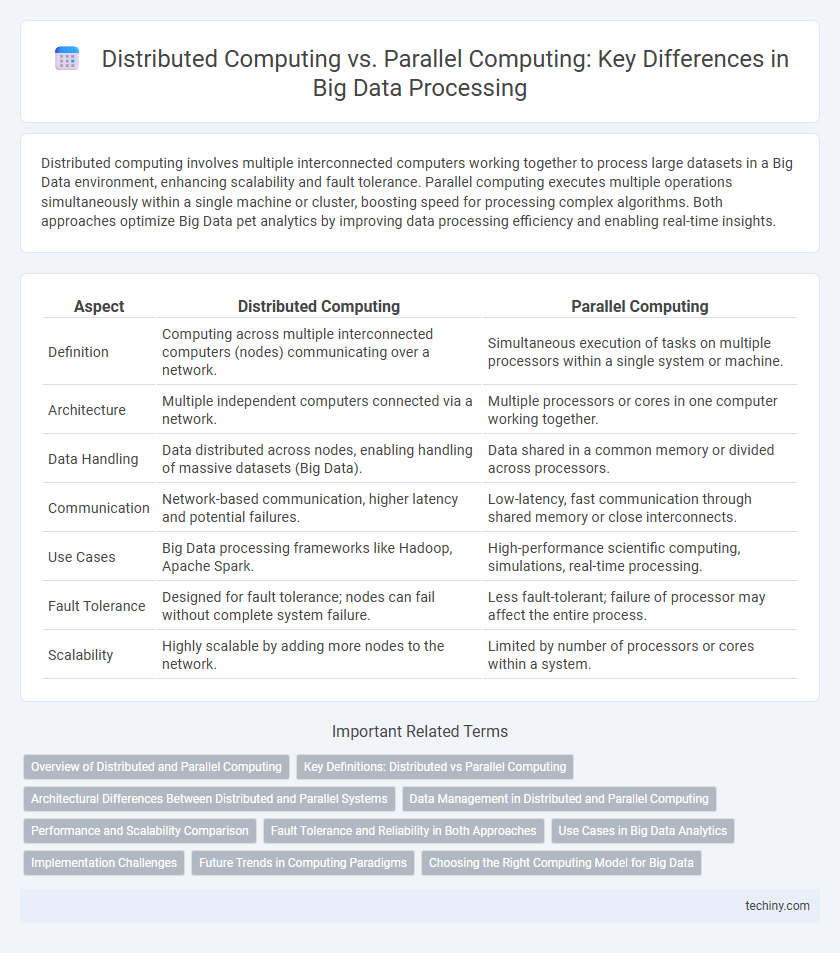

Distributed computing involves multiple interconnected computers working together to process large datasets in a Big Data environment, enhancing scalability and fault tolerance. Parallel computing executes multiple operations simultaneously within a single machine or cluster, boosting speed for processing complex algorithms. Both approaches optimize Big Data pet analytics by improving data processing efficiency and enabling real-time insights.

Table of Comparison

| Aspect | Distributed Computing | Parallel Computing |

|---|---|---|

| Definition | Computing across multiple interconnected computers (nodes) communicating over a network. | Simultaneous execution of tasks on multiple processors within a single system or machine. |

| Architecture | Multiple independent computers connected via a network. | Multiple processors or cores in one computer working together. |

| Data Handling | Data distributed across nodes, enabling handling of massive datasets (Big Data). | Data shared in a common memory or divided across processors. |

| Communication | Network-based communication, higher latency and potential failures. | Low-latency, fast communication through shared memory or close interconnects. |

| Use Cases | Big Data processing frameworks like Hadoop, Apache Spark. | High-performance scientific computing, simulations, real-time processing. |

| Fault Tolerance | Designed for fault tolerance; nodes can fail without complete system failure. | Less fault-tolerant; failure of processor may affect the entire process. |

| Scalability | Highly scalable by adding more nodes to the network. | Limited by number of processors or cores within a system. |

Overview of Distributed and Parallel Computing

Distributed computing involves multiple interconnected computers working together to solve a problem by dividing tasks across a network, enhancing scalability and fault tolerance. Parallel computing executes multiple processes simultaneously within a single machine or tightly-coupled systems, optimizing performance for computationally intensive tasks. Both paradigms are essential in big data analytics for efficient processing and managing large datasets.

Key Definitions: Distributed vs Parallel Computing

Distributed computing involves multiple interconnected computers working on different parts of a task simultaneously, often across diverse locations, enhancing scalability and fault tolerance. Parallel computing refers to the simultaneous execution of multiple calculations within a single computer system using multiple processors or cores to speed up processing time. Both approaches optimize data processing efficiency but differ in architecture, with distributed computing emphasizing networked systems and parallel computing focusing on multi-core or multi-processor hardware configurations.

Architectural Differences Between Distributed and Parallel Systems

Distributed computing systems consist of multiple autonomous computers connected via a network, each with its own memory and processors, working collaboratively to solve large-scale problems. Parallel computing architectures feature multiple processors within a single machine sharing a common memory space, enabling simultaneous execution of tasks with tightly coupled communication. The key architectural difference lies in communication latency and memory sharing: distributed systems rely on message passing across network nodes, while parallel systems benefit from high-speed shared memory for efficient inter-processor communication.

Data Management in Distributed and Parallel Computing

Distributed computing manages data by distributing tasks and storage across multiple networked nodes, enabling scalable data processing and fault tolerance in big data environments. Parallel computing processes large data sets by dividing them into smaller chunks executed simultaneously on multi-core processors, optimizing speed but often relying on shared memory architectures. Effective data management in distributed computing demands robust synchronization and consistency protocols, while parallel computing requires efficient data partitioning and minimizing communication overhead to enhance performance.

Performance and Scalability Comparison

Distributed computing excels in scalability by leveraging multiple networked machines to handle large-scale data processing, enabling efficient management of big data workloads. Parallel computing enhances performance through simultaneous execution of tasks on multiple processors within a single machine, optimizing speed for complex computations. While distributed systems offer superior scalability for expanding data volumes, parallel computing provides faster processing for tightly coupled tasks with lower communication overhead.

Fault Tolerance and Reliability in Both Approaches

Distributed computing enhances fault tolerance by distributing tasks across multiple nodes, allowing the system to continue functioning even if some nodes fail, thereby increasing overall reliability. Parallel computing relies on simultaneous processing within a single system or closely connected systems, which can limit fault tolerance since failures in key components may halt the entire process. In big data environments, distributed computing frameworks like Hadoop and Spark are preferred for their superior fault tolerance and reliability compared to traditional parallel computing architectures.

Use Cases in Big Data Analytics

Distributed computing enables large-scale data processing across multiple networked machines, making it ideal for big data analytics tasks like real-time streaming analysis and large-scale batch processing. Parallel computing, operating on multiple processors within a single machine, excels in handling complex algorithms such as machine learning model training and image recognition with high-speed computation. These computing paradigms complement each other by addressing different performance and scalability needs in big data analytics workflows.

Implementation Challenges

Distributed computing faces implementation challenges such as network latency, data consistency across nodes, and fault tolerance due to the need for synchronization among geographically dispersed systems. Parallel computing struggles with workload balancing, memory access contention, and debugging complexities within shared-memory architectures. Both paradigms require sophisticated coordination mechanisms and optimized resource management to efficiently handle Big Data processing demands.

Future Trends in Computing Paradigms

Distributed computing systems will increasingly leverage edge computing and AI integration to enhance data processing scalability and reduce latency in big data applications. Parallel computing architectures are evolving with advanced GPUs and quantum processors to accelerate large-scale machine learning tasks and simulations. Emerging hybrid paradigms combining distributed and parallel computing are expected to optimize resource utilization and enable real-time analytics in complex data environments.

Choosing the Right Computing Model for Big Data

Distributed computing processes large-scale Big Data by dividing tasks across multiple networked machines, enhancing data storage and computational capacity. Parallel computing accelerates data processing by simultaneously executing tasks on multiple cores within a single system, optimizing speed for intensive analytical workloads. Selecting the right computing model depends on data size, latency requirements, resource availability, and complexity, with distributed systems favored for scalability and parallel systems for high-speed data crunching.

Distributed Computing vs Parallel Computing Infographic

techiny.com

techiny.com