F1 score measures the balance between precision and recall, making it ideal for imbalanced classification problems where false positives and false negatives carry different costs. ROC-AUC evaluates the trade-off between true positive rate and false positive rate across thresholds, providing a comprehensive view of a model's ability to distinguish classes. Selecting F1 score or ROC-AUC depends on whether the priority is on misclassification costs or overall ranking performance.

Table of Comparison

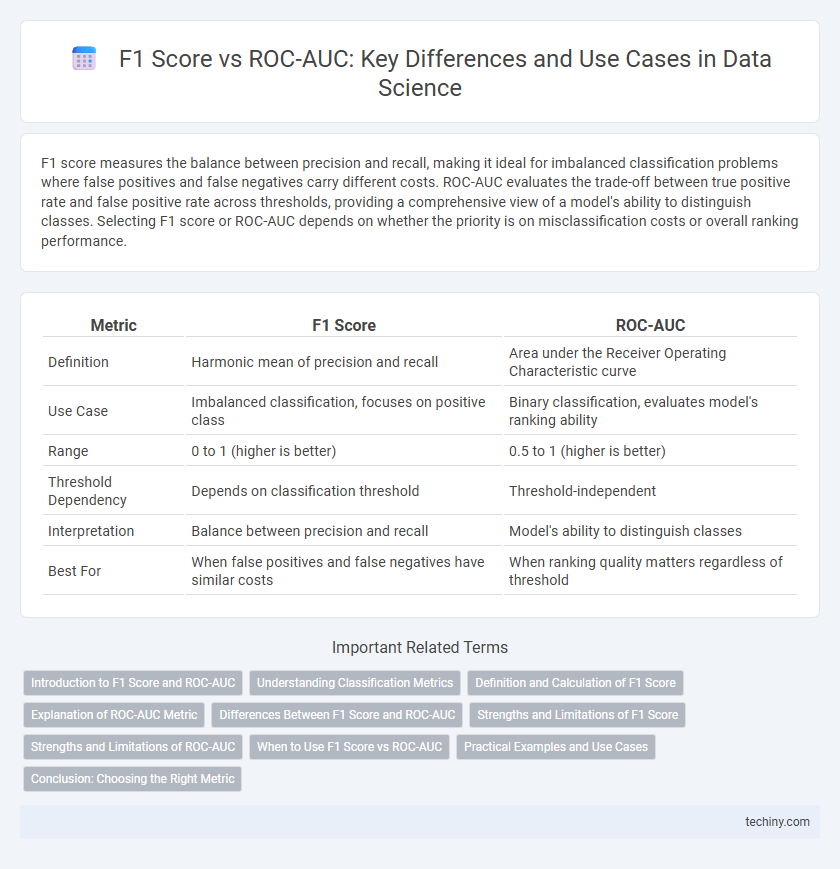

| Metric | F1 Score | ROC-AUC |

|---|---|---|

| Definition | Harmonic mean of precision and recall | Area under the Receiver Operating Characteristic curve |

| Use Case | Imbalanced classification, focuses on positive class | Binary classification, evaluates model's ranking ability |

| Range | 0 to 1 (higher is better) | 0.5 to 1 (higher is better) |

| Threshold Dependency | Depends on classification threshold | Threshold-independent |

| Interpretation | Balance between precision and recall | Model's ability to distinguish classes |

| Best For | When false positives and false negatives have similar costs | When ranking quality matters regardless of threshold |

Introduction to F1 Score and ROC-AUC

F1 Score measures a model's accuracy by calculating the harmonic mean of precision and recall, making it essential for imbalanced classification tasks. ROC-AUC evaluates the performance of a binary classifier by plotting true positive rate against false positive rate across threshold values, summarizing the model's ability to distinguish between classes. Both metrics provide critical insights into classification model effectiveness, with F1 Score focusing on balance between false positives and negatives, while ROC-AUC emphasizes overall separability.

Understanding Classification Metrics

The F1 score balances precision and recall, making it ideal for imbalanced classification datasets where false positives and false negatives carry different costs. ROC-AUC evaluates the overall ability of a classifier to distinguish between classes by measuring the trade-off between true positive rate and false positive rate across thresholds. Selecting between F1 score and ROC-AUC depends on the specific goals of the classification task and the importance of error types in the dataset.

Definition and Calculation of F1 Score

The F1 score is a harmonic mean of precision and recall, providing a balanced measure that evaluates a model's accuracy in binary classification. It is calculated as 2 * (Precision * Recall) / (Precision + Recall), where precision measures the ratio of true positive predictions to all positive predictions and recall measures the ratio of true positive predictions to all actual positives. Unlike ROC-AUC, which assesses the trade-off between true positive rate and false positive rate across thresholds, the F1 score focuses specifically on the performance of positive class predictions.

Explanation of ROC-AUC Metric

The ROC-AUC metric evaluates a classification model's ability to distinguish between classes by measuring the area under the Receiver Operating Characteristic curve, which plots the true positive rate against the false positive rate at various threshold settings. A higher AUC value indicates better model performance in terms of ranking positive instances higher than negative ones, independent of classification thresholds. This metric is especially useful in imbalanced datasets where balanced evaluation across all classification thresholds is critical.

Differences Between F1 Score and ROC-AUC

F1 score evaluates the balance between precision and recall, making it crucial for imbalanced classification problems where false positives and false negatives carry different costs. ROC-AUC measures the ability of a model to distinguish between classes across all classification thresholds, focusing on the trade-off between true positive rate and false positive rate. While F1 score targets specific classification thresholds, ROC-AUC provides a threshold-independent performance metric.

Strengths and Limitations of F1 Score

F1 score excels in measuring a model's accuracy by balancing precision and recall, making it highly effective for imbalanced classification tasks where false positives and false negatives are critical. However, F1 score does not take true negatives into account, limiting its ability to provide a comprehensive performance overview compared to metrics like ROC-AUC. This limitation makes F1 less suitable for applications where the overall class distribution and specificity are important for decision-making.

Strengths and Limitations of ROC-AUC

ROC-AUC excels in evaluating classification models by measuring the ability to distinguish between classes across all threshold levels, providing a comprehensive performance metric independent of class distribution. Its strength lies in capturing the trade-off between true positive rate (sensitivity) and false positive rate, making it robust to imbalanced datasets. However, ROC-AUC can be misleading in highly skewed data as it may overestimate performance by giving equal weight to false positives and false negatives, which might not align with specific business or application costs.

When to Use F1 Score vs ROC-AUC

F1 score is preferred in imbalanced classification, emphasizing a harmonic mean of precision and recall to balance false positives and false negatives. ROC-AUC evaluates overall model discrimination capability across different threshold values, suitable for understanding ranking performance in balanced or moderately imbalanced datasets. Use F1 score when precise classification matters and ROC-AUC for assessing model robustness and threshold-independent performance.

Practical Examples and Use Cases

F1 score is critical in imbalanced classification scenarios like fraud detection, where precision and recall balance prevents costly false positives and negatives. ROC-AUC excels in medical diagnosis tasks, such as cancer detection, by evaluating model performance across all classification thresholds to capture true positive rates against false positives. Selecting between F1 score and ROC-AUC depends on specific use cases, emphasizing precision-recall balance for F1 and overall ranking performance for ROC-AUC.

Conclusion: Choosing the Right Metric

Selecting the right metric depends on the specific problem context: F1 score emphasizes the balance between precision and recall, making it ideal for imbalanced classification tasks where false positives and false negatives have different costs. ROC-AUC measures the ability of a model to discriminate between classes across all thresholds, providing a comprehensive view of performance in binary classification. Prioritize F1 score when accurate positive class prediction matters most, and rely on ROC-AUC for overall classification capability assessment.

F1 score vs ROC-AUC Infographic

techiny.com

techiny.com