Parallel processing significantly enhances computational speed by dividing tasks into multiple smaller operations that run simultaneously across multiple processors or cores, ideal for complex hardware designs requiring high throughput. Serial processing executes instructions sequentially, which simplifies hardware design but often results in slower performance for tasks demanding extensive data handling or multi-threading. Optimizing hardware architecture requires balancing parallel and serial processing based on application needs, power consumption, and system complexity.

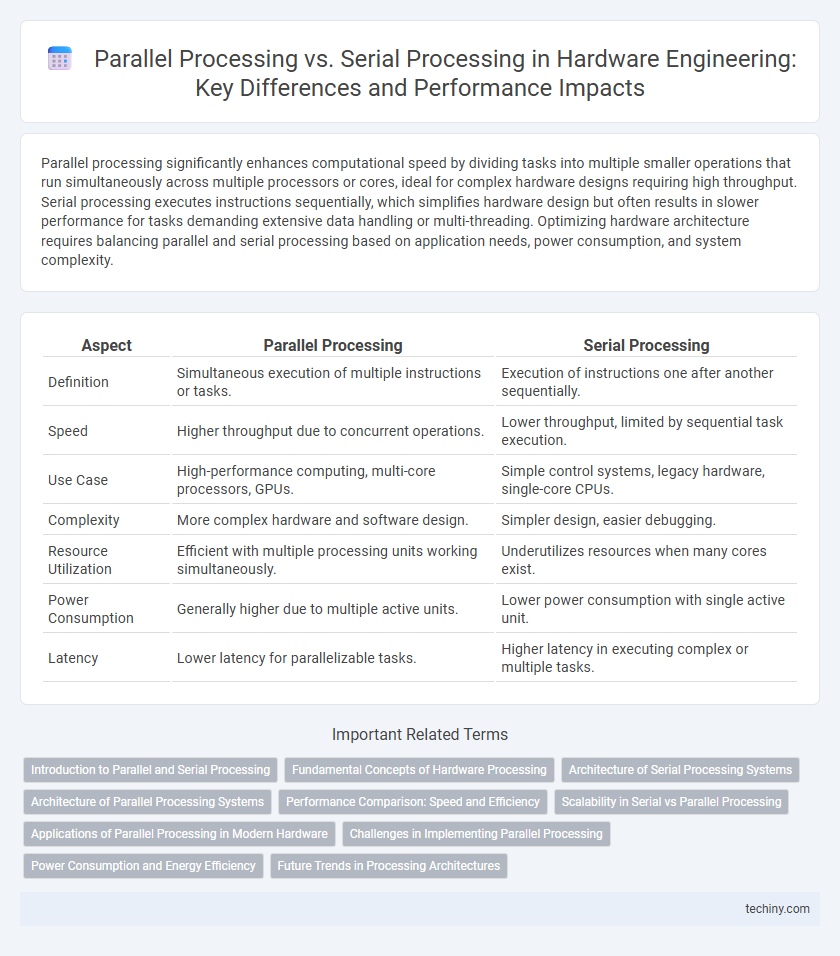

Table of Comparison

| Aspect | Parallel Processing | Serial Processing |

|---|---|---|

| Definition | Simultaneous execution of multiple instructions or tasks. | Execution of instructions one after another sequentially. |

| Speed | Higher throughput due to concurrent operations. | Lower throughput, limited by sequential task execution. |

| Use Case | High-performance computing, multi-core processors, GPUs. | Simple control systems, legacy hardware, single-core CPUs. |

| Complexity | More complex hardware and software design. | Simpler design, easier debugging. |

| Resource Utilization | Efficient with multiple processing units working simultaneously. | Underutilizes resources when many cores exist. |

| Power Consumption | Generally higher due to multiple active units. | Lower power consumption with single active unit. |

| Latency | Lower latency for parallelizable tasks. | Higher latency in executing complex or multiple tasks. |

Introduction to Parallel and Serial Processing

Parallel processing divides tasks into multiple smaller sub-tasks executed simultaneously across multiple processors, significantly increasing computational speed and efficiency in hardware engineering. Serial processing executes tasks sequentially, completing one operation before moving to the next, which can limit performance in complex computations. Understanding the differences between these processing methods is crucial for optimizing hardware design and achieving desired system performance.

Fundamental Concepts of Hardware Processing

Parallel processing divides tasks into multiple smaller operations that run simultaneously across multiple processors, significantly enhancing computational speed and efficiency in hardware systems. In contrast, serial processing executes tasks sequentially, with one operation completing before the next begins, which can limit speed but simplifies design and control. Understanding these fundamental hardware processing concepts is crucial for optimizing performance in modern computing architectures and balancing trade-offs between complexity, power consumption, and processing speed.

Architecture of Serial Processing Systems

The architecture of serial processing systems relies on a single processing unit executing instructions sequentially, which simplifies control design and reduces hardware complexity. This design limits throughput but offers deterministic performance and ease of debugging due to its straightforward instruction flow. Serial processors are commonly found in embedded systems where simplicity, low power consumption, and predictable timing are critical.

Architecture of Parallel Processing Systems

Parallel processing systems utilize multiple processing units working simultaneously to execute tasks, significantly enhancing computational speed and efficiency. These architectures often include multi-core processors, symmetric multiprocessing (SMP), and massively parallel processors (MPP), which coordinate tasks through shared or distributed memory models. Designing effective communication protocols and synchronization mechanisms is crucial to maximize throughput and minimize latency in parallel processing systems.

Performance Comparison: Speed and Efficiency

Parallel processing significantly enhances performance by executing multiple tasks simultaneously, reducing overall computation time and increasing throughput compared to serial processing, which handles tasks sequentially. Speed improvements in parallel processing are evident in multi-core and GPU architectures, where concurrent execution leverages hardware resources more efficiently than serial execution. However, parallel processing may incur overhead from synchronization and communication, impacting efficiency in tasks with complex dependencies, whereas serial processing offers predictable performance for straightforward, linear workloads.

Scalability in Serial vs Parallel Processing

Parallel processing significantly enhances scalability by distributing workloads across multiple processors or cores, enabling simultaneous execution and reducing bottlenecks. Serial processing, limited by sequential instruction execution, faces inherent scalability constraints as performance gains rely solely on increasing processor speed. As hardware scales, parallel architectures efficiently leverage concurrency, making them preferable for high-performance computing and large-scale data processing tasks.

Applications of Parallel Processing in Modern Hardware

Parallel processing enables modern hardware systems to execute multiple tasks simultaneously, significantly improving computational speed and efficiency in applications such as graphics rendering, scientific simulations, and real-time data analysis. High-performance computing clusters and multi-core processors leverage parallelism to accelerate machine learning algorithms, big data processing, and complex mathematical computations. This approach is critical in advanced hardware architectures like GPUs and ARM big.LITTLE designs, optimizing power consumption and throughput for diverse workloads.

Challenges in Implementing Parallel Processing

Implementing parallel processing in hardware engineering faces significant challenges including synchronization overhead, data dependency conflicts, and increased complexity in designing scalable architectures. Managing communication latency and ensuring efficient load balancing across multiple processing units are critical to achieving optimal performance. These issues often lead to increased development costs and require advanced algorithms to maximize parallelism while minimizing bottlenecks.

Power Consumption and Energy Efficiency

Parallel processing distributes computational tasks across multiple processors, significantly reducing power consumption by enabling faster completion times and allowing processors to enter low-power states sooner. In contrast, serial processing often leads to higher energy usage due to prolonged active processor time and increased heat dissipation. Energy efficiency in hardware engineering favors parallel architectures, as they optimize performance-per-watt metrics and decrease total energy consumption during complex computations.

Future Trends in Processing Architectures

Future trends in hardware engineering emphasize hybrid processing architectures combining parallel and serial processing to optimize performance and energy efficiency. Emerging technologies like quantum computing and neuromorphic processors integrate massively parallel operations with sequential control flows to tackle complex computational problems. Advances in materials science and chip design enable scalable parallelism, reducing latency and power consumption for next-generation processors.

Parallel Processing vs Serial Processing Infographic

techiny.com

techiny.com