Dead reckoning estimates a robot's position by calculating movement based on velocity and direction over time, but it accumulates errors due to sensor drift and uncorrected deviations. Sensor fusion combines data from multiple sensors, such as GPS, IMUs, and LiDAR, to provide more accurate and reliable localization by compensating for individual sensor limitations. This integration significantly improves navigation precision in complex and dynamic environments, outperforming dead reckoning alone.

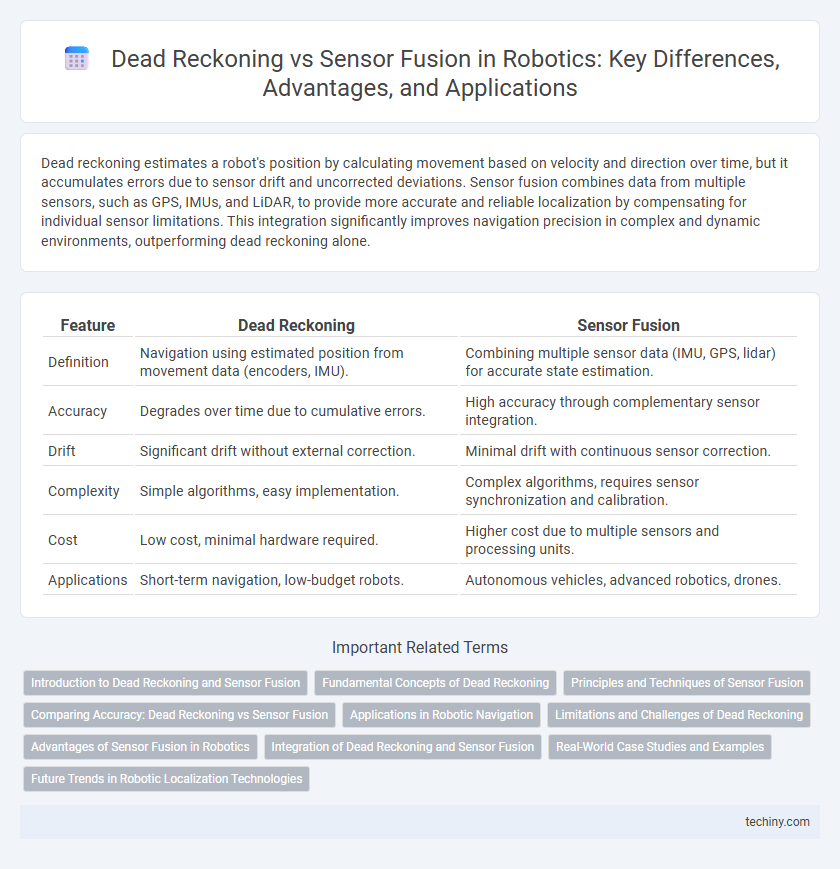

Table of Comparison

| Feature | Dead Reckoning | Sensor Fusion |

|---|---|---|

| Definition | Navigation using estimated position from movement data (encoders, IMU). | Combining multiple sensor data (IMU, GPS, lidar) for accurate state estimation. |

| Accuracy | Degrades over time due to cumulative errors. | High accuracy through complementary sensor integration. |

| Drift | Significant drift without external correction. | Minimal drift with continuous sensor correction. |

| Complexity | Simple algorithms, easy implementation. | Complex algorithms, requires sensor synchronization and calibration. |

| Cost | Low cost, minimal hardware required. | Higher cost due to multiple sensors and processing units. |

| Applications | Short-term navigation, low-budget robots. | Autonomous vehicles, advanced robotics, drones. |

Introduction to Dead Reckoning and Sensor Fusion

Dead reckoning calculates a robot's position by estimating displacement based on previously known location, velocity, and time, often using wheel encoders and inertial measurement units (IMUs). Sensor fusion combines data from multiple sensors such as LiDAR, GPS, cameras, and IMUs to enhance localization accuracy and reduce cumulative errors inherent in dead reckoning. Integrating sensor fusion techniques enables robust navigation by compensating for individual sensor limitations and environmental uncertainties.

Fundamental Concepts of Dead Reckoning

Dead reckoning in robotics involves estimating a robot's current position by calculating its previous position combined with data from motion sensors such as wheel encoders and inertial measurement units (IMUs). The fundamental concept relies on continuous integration of velocity and orientation data to update position without external references. This method, while straightforward, accumulates errors over time due to sensor drift and noise, making it less accurate compared to sensor fusion techniques that combine multiple data sources for improved localization.

Principles and Techniques of Sensor Fusion

Sensor fusion in robotics integrates data from multiple sensors such as IMUs, cameras, and LiDAR to enhance position and orientation accuracy beyond dead reckoning alone. It employs probabilistic techniques like Kalman filters and particle filters to combine and correct sensor measurements, reducing drift and noise inherent in dead reckoning. This multi-sensor approach enables robust navigation and mapping in dynamic and uncertain environments.

Comparing Accuracy: Dead Reckoning vs Sensor Fusion

Dead reckoning relies on internal sensors like wheel encoders and inertial measurement units to estimate position, but it accumulates errors over time due to drift and sensor noise. Sensor fusion combines data from multiple sources such as GPS, LiDAR, cameras, and IMUs to correct these inaccuracies and provide more robust, precise localization in dynamic environments. Consequently, sensor fusion outperforms dead reckoning in accuracy and reliability for real-time robotic navigation and mapping.

Applications in Robotic Navigation

Dead reckoning relies on internal sensors like odometers and inertial measurement units to estimate a robot's position, offering simplicity but accumulating errors over time, making it suitable for short-term navigation in controlled environments. Sensor fusion combines data from multiple sensors such as LiDAR, GPS, cameras, and IMUs, providing robust and accurate localization critical for autonomous vehicles and drones operating in dynamic, uncertain environments. Advanced robotic navigation systems integrate sensor fusion algorithms to enhance reliability and precision, enabling complex tasks like simultaneous localization and mapping (SLAM) in real-world applications.

Limitations and Challenges of Dead Reckoning

Dead reckoning in robotics faces significant limitations due to cumulative errors from sensor drift and wheel slippage, which degrade position accuracy over time. It relies solely on internal sensors like encoders and gyroscopes, making it vulnerable to environmental disturbances and sensor noise without external correction inputs. These challenges necessitate complementary techniques such as sensor fusion with GPS or LiDAR to enhance reliability and mitigate error accumulation in autonomous navigation systems.

Advantages of Sensor Fusion in Robotics

Sensor fusion in robotics combines data from multiple sensors such as LiDAR, IMU, and cameras to enhance localization accuracy and environmental perception beyond what dead reckoning alone can achieve. This approach mitigates cumulative errors and drift commonly associated with dead reckoning by continuously correcting estimates using diverse sensor inputs. Integrating sensor fusion improves real-time decision-making and robustness in complex, dynamic environments, enabling more reliable autonomous navigation.

Integration of Dead Reckoning and Sensor Fusion

Integration of dead reckoning and sensor fusion in robotics enhances navigation accuracy by combining the relative position estimation from dead reckoning with absolute measurements from sensors like GPS, LiDAR, and IMUs. Sensor fusion algorithms such as Extended Kalman Filter (EKF) or Particle Filter continuously correct dead reckoning errors caused by wheel slippage or drift, ensuring robust localization. This hybrid approach optimizes path planning and obstacle avoidance, crucial for autonomous robots operating in dynamic or GPS-denied environments.

Real-World Case Studies and Examples

Dead reckoning in robotics relies on wheel encoders and inertial measurement units to estimate position but often accumulates significant error over time, as demonstrated in warehouse automation where drift affects navigation accuracy. Sensor fusion techniques combine data from LIDAR, GPS, IMUs, and cameras to enhance localization, exemplified by autonomous vehicles like Waymo's self-driving cars that achieve centimeter-level positioning even in complex urban environments. Real-world case studies highlight sensor fusion's superior robustness and accuracy compared to dead reckoning alone, particularly in dynamic and GPS-denied environments.

Future Trends in Robotic Localization Technologies

Future trends in robotic localization technologies emphasize the transition from traditional dead reckoning methods to advanced sensor fusion approaches, integrating data from LiDAR, IMUs, and vision sensors for improved accuracy and reliability. Machine learning algorithms increasingly enhance sensor fusion by dynamically adapting to changing environments and mitigating sensor drift, crucial for autonomous navigation in complex settings. The convergence of edge computing and 5G networks further accelerates real-time processing and data exchange, boosting the efficiency and scalability of robotic localization systems.

dead reckoning vs sensor fusion Infographic

techiny.com

techiny.com