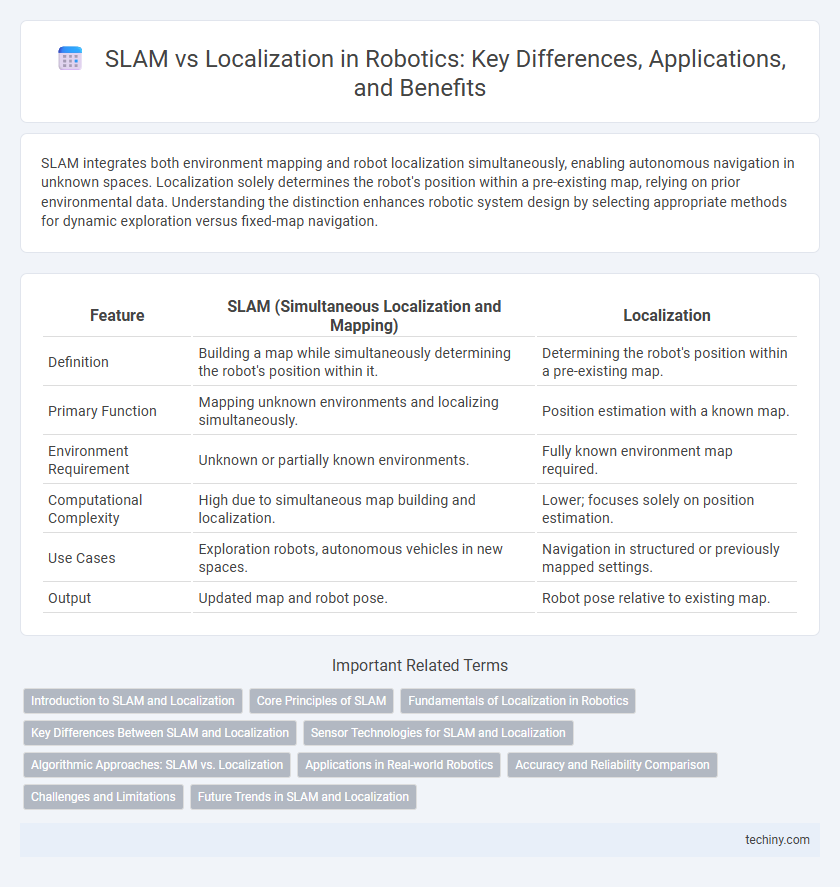

SLAM integrates both environment mapping and robot localization simultaneously, enabling autonomous navigation in unknown spaces. Localization solely determines the robot's position within a pre-existing map, relying on prior environmental data. Understanding the distinction enhances robotic system design by selecting appropriate methods for dynamic exploration versus fixed-map navigation.

Table of Comparison

| Feature | SLAM (Simultaneous Localization and Mapping) | Localization |

|---|---|---|

| Definition | Building a map while simultaneously determining the robot's position within it. | Determining the robot's position within a pre-existing map. |

| Primary Function | Mapping unknown environments and localizing simultaneously. | Position estimation with a known map. |

| Environment Requirement | Unknown or partially known environments. | Fully known environment map required. |

| Computational Complexity | High due to simultaneous map building and localization. | Lower; focuses solely on position estimation. |

| Use Cases | Exploration robots, autonomous vehicles in new spaces. | Navigation in structured or previously mapped settings. |

| Output | Updated map and robot pose. | Robot pose relative to existing map. |

Introduction to SLAM and Localization

Simultaneous Localization and Mapping (SLAM) is a process where a robot constructs a map of an unknown environment while simultaneously determining its position within that environment. Localization, by contrast, assumes an existing map and focuses solely on estimating the robot's current position relative to that predefined map. SLAM integrates sensor data, motion models, and algorithms such as Extended Kalman Filters or Particle Filters to achieve real-time mapping and positioning, making it essential for autonomous navigation in dynamic or unfamiliar spaces.

Core Principles of SLAM

SLAM (Simultaneous Localization and Mapping) integrates real-time mapping and localization to enable robots to build a map of an unknown environment while simultaneously determining their position within it. The core principles of SLAM involve sensor data acquisition, feature extraction, state estimation using probabilistic algorithms such as Extended Kalman Filter (EKF) or Particle Filter, and loop closure to reduce accumulated errors. Unlike pure localization, which assumes a known map, SLAM addresses the challenge of mapping unknown terrains, making it critical for autonomous navigation in dynamic and unstructured settings.

Fundamentals of Localization in Robotics

Localization in robotics refers to the process where a robot determines its position and orientation within a known map or environment using sensor data such as LiDAR, cameras, or odometry. Unlike SLAM, which simultaneously constructs the map while localizing, fundamental localization relies on pre-existing maps to accurately estimate the robot's pose. Techniques such as Kalman filters, particle filters, and Monte Carlo localization are commonly employed to enhance the precision and robustness of this essential robotic function.

Key Differences Between SLAM and Localization

SLAM (Simultaneous Localization and Mapping) creates a map of an unknown environment while simultaneously determining the robot's position within it, integrating sensor data for real-time mapping and pose estimation. Localization assumes a pre-existing map and focuses solely on estimating the robot's pose relative to that known map using algorithms like Monte Carlo Localization or particle filters. Key differences include SLAM's dual tasks of mapping plus localization versus localization's singular task, making SLAM more computationally intensive and suited for exploration, whereas localization provides rapid pose updates in known environments.

Sensor Technologies for SLAM and Localization

SLAM integrates sensor technologies such as LiDAR, RGB-D cameras, and IMUs to simultaneously build maps and determine the robot's position, enabling dynamic environment understanding. Localization primarily relies on GPS, wheel encoders, and IMUs for precise real-time positioning within a known map. Sensor fusion techniques enhance the accuracy and robustness of both SLAM and localization by combining data from multiple sensor modalities.

Algorithmic Approaches: SLAM vs. Localization

SLAM algorithms simultaneously build a map of an unknown environment while localizing the robot within it using sensor fusion techniques such as Kalman filters, particle filters, or graph-based optimization. Localization algorithms, in contrast, rely on pre-existing maps and primarily focus on estimating the robot's pose by matching sensor data to the known environment using methods like Monte Carlo localization or extended Kalman filters. SLAM's computational complexity is higher due to the dual problem-solving nature, whereas localization benefits from reduced complexity but depends heavily on map accuracy.

Applications in Real-world Robotics

SLAM (Simultaneous Localization and Mapping) enables robots to build and update maps of unknown environments while simultaneously tracking their position, crucial for autonomous navigation in dynamic or previously unexplored areas. Localization relies on pre-existing maps to determine a robot's position, making it suitable for structured environments like warehouses or factories where the layout is stable. In real-world robotics, SLAM is essential for applications such as autonomous drones and self-driving vehicles, whereas localization supports tasks like automated material handling and indoor service robots.

Accuracy and Reliability Comparison

SLAM integrates both localization and mapping, offering higher accuracy by continuously updating the robot's position relative to an evolving map, unlike standalone localization which relies on a pre-existing map and may suffer from drift or errors in dynamic environments. SLAM systems leverage sensor fusion from LIDAR, cameras, and IMUs to enhance reliability, ensuring more robust performance in diverse and unknown settings. Localization methods can be faster and computationally lighter but often compromise on spatial accuracy and adaptability, especially in changing or GPS-denied environments.

Challenges and Limitations

SLAM faces challenges in dynamic environments where moving objects cause map inconsistencies and require complex data association techniques. Localization relies heavily on accurate pre-built maps, limiting effectiveness in unexplored or changing areas. Both methods struggle with sensor noise, computational resource demands, and real-time processing constraints in complex, unstructured environments.

Future Trends in SLAM and Localization

Future trends in SLAM emphasize integration of advanced AI algorithms and deep learning techniques to enhance real-time environment mapping and improve accuracy in dynamic settings. Localization advancements focus on leveraging 5G connectivity and multi-sensor fusion, combining LiDAR, visual, and inertial data to achieve centimeter-level precision in complex, GPS-denied environments. Emerging technologies like quantum computing and edge AI are poised to revolutionize both SLAM and localization by significantly reducing computation latency and enabling more autonomous robotic navigation.

SLAM (Simultaneous Localization and Mapping) vs localization Infographic

techiny.com

techiny.com