Tokenization replaces sensitive data with non-sensitive substitutes called tokens, which have no exploitable value outside the specific environment, reducing the risk of data breaches. Encryption transforms data into unreadable ciphertext using algorithms and keys, requiring decryption to restore original information, making it effective for protecting data in transit or at rest. Choosing between tokenization and encryption depends on data use cases, with tokenization often preferred for minimizing stored sensitive data and encryption for securing data across various platforms.

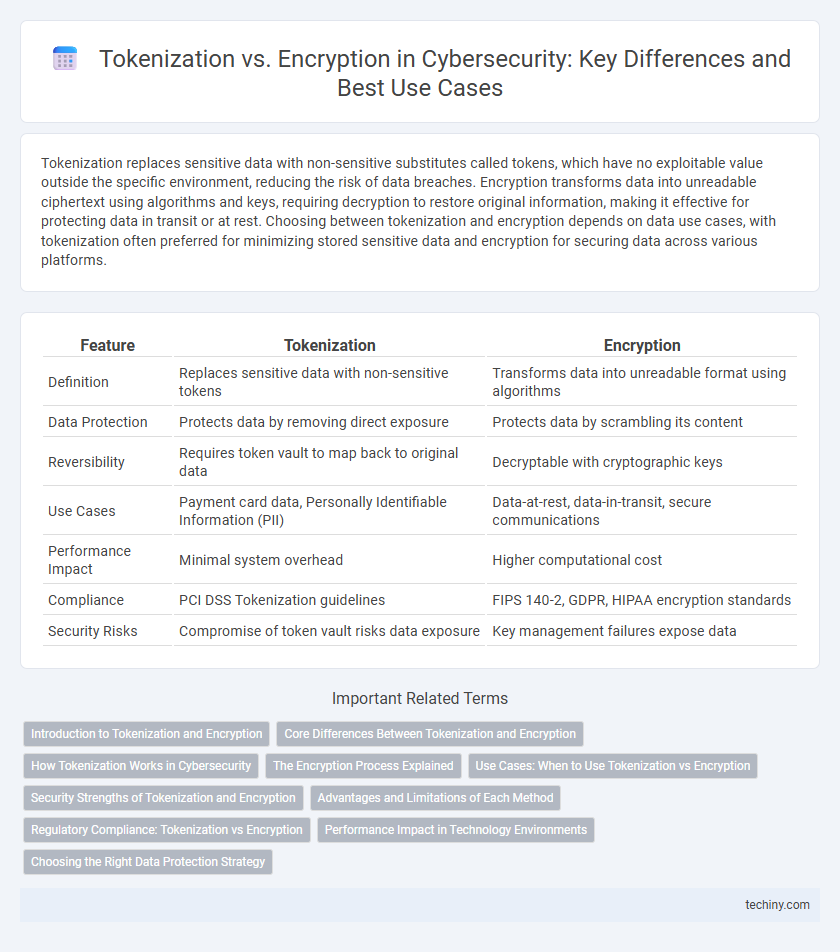

Table of Comparison

| Feature | Tokenization | Encryption |

|---|---|---|

| Definition | Replaces sensitive data with non-sensitive tokens | Transforms data into unreadable format using algorithms |

| Data Protection | Protects data by removing direct exposure | Protects data by scrambling its content |

| Reversibility | Requires token vault to map back to original data | Decryptable with cryptographic keys |

| Use Cases | Payment card data, Personally Identifiable Information (PII) | Data-at-rest, data-in-transit, secure communications |

| Performance Impact | Minimal system overhead | Higher computational cost |

| Compliance | PCI DSS Tokenization guidelines | FIPS 140-2, GDPR, HIPAA encryption standards |

| Security Risks | Compromise of token vault risks data exposure | Key management failures expose data |

Introduction to Tokenization and Encryption

Tokenization replaces sensitive data elements with non-sensitive equivalents, or tokens, that have no exploitable meaning, enhancing data security by limiting exposure of original information. Encryption transforms data into unreadable ciphertext using algorithms and keys, ensuring confidentiality and protection during transmission and storage. Both methods serve crucial roles in cybersecurity, with tokenization often preferred for compliance and minimizing data breach risks, while encryption remains essential for securing data in transit and at rest.

Core Differences Between Tokenization and Encryption

Tokenization replaces sensitive data with non-sensitive equivalents called tokens, which have no exploitable meaning or value outside the specific system, whereas encryption transforms data into a coded format requiring a key to decode. Tokenization eliminates the need to secure the original data throughout the transaction process, significantly reducing breach risks and compliance scope under regulations like PCI DSS. Encryption, however, relies on cryptographic keys for data protection but still retains the original data structure, making key management and potential decryption vulnerabilities critical challenges.

How Tokenization Works in Cybersecurity

Tokenization in cybersecurity replaces sensitive data elements with non-sensitive equivalents called tokens, which retain the format but have no exploitable value outside the specific system. Unlike encryption, tokens do not require complex cryptographic keys for data protection since the original data is stored securely in a centralized token vault. This process reduces the risk of data breaches by minimizing the exposure of sensitive information during transactions and storage.

The Encryption Process Explained

Encryption transforms sensitive data into an unreadable format using algorithms and cryptographic keys, ensuring confidentiality during transmission or storage. The encryption process involves converting plaintext into ciphertext through symmetric or asymmetric key cryptography, where only authorized parties can decrypt the information. Strong encryption standards like AES and RSA protect data from unauthorized access, safeguarding against cyber threats and data breaches.

Use Cases: When to Use Tokenization vs Encryption

Tokenization is ideal for protecting sensitive data in payment processing and healthcare records by replacing data with non-sensitive substitutes, reducing the risk of breaches without the need for complex cryptographic operations. Encryption is best suited for securing data in transit and at rest, such as emails, cloud storage, and network communications, ensuring that only authorized parties can access the original information using cryptographic keys. Choosing between tokenization and encryption depends on whether the primary goal is to minimize the scope of compliance (tokenization) or to maintain data confidentiality and integrity across various platforms (encryption).

Security Strengths of Tokenization and Encryption

Tokenization enhances data security by replacing sensitive information with non-sensitive tokens, effectively minimizing exposure and reducing the risk of data breaches in payment systems and personal data storage. Encryption secures data through complex algorithms that convert plaintext into ciphertext, safeguarding information during transmission and storage by making it unreadable without the decryption key. Both methods provide robust protection, with tokenization excelling in limiting data scope and encryption ensuring confidentiality and integrity across diverse cybersecurity applications.

Advantages and Limitations of Each Method

Tokenization offers the advantage of reducing the scope of sensitive data exposure by replacing it with non-sensitive placeholders, which minimizes compliance burdens and lowers breach risks. Encryption protects data by converting it into unreadable code, allowing secure storage and transmission, but requires complex key management and can impact system performance. While tokenization is highly effective for specific data like payment card information, encryption remains essential for protecting broader data sets across diverse security environments.

Regulatory Compliance: Tokenization vs Encryption

Tokenization replaces sensitive data with non-sensitive equivalents, reducing the scope of regulatory requirements like PCI DSS by limiting exposure of actual data. Encryption transforms data into unreadable format using algorithms, necessitating strict key management to meet compliance standards such as GDPR and HIPAA. Regulatory compliance benefits from tokenization's minimized data footprint, while encryption demands rigorous controls to protect cryptographic keys and encrypted data.

Performance Impact in Technology Environments

Tokenization reduces performance impact compared to encryption by replacing sensitive data with non-sensitive tokens, thereby minimizing the processing overhead on systems. Encryption requires computationally intensive algorithms for data transformation and key management, which can slow down transaction speeds and increase latency in high-volume environments. Tokenization streamlines workflows and enhances system efficiency by offloading data protection to specialized token vaults, significantly improving performance in large-scale technology deployments.

Choosing the Right Data Protection Strategy

Tokenization replaces sensitive data with non-sensitive equivalents, minimizing exposure by storing tokens separately, while encryption transforms data into unreadable ciphertext requiring keys for access. Selecting the right data protection strategy depends on the specific use case, regulatory requirements like PCI DSS, and performance considerations; tokenization excels in reducing breach impact without affecting database structure, whereas encryption offers robust protection for data in transit and at rest. Organizations must evaluate risk tolerance, compliance obligations, and system integration complexity to implement the most effective approach for safeguarding sensitive information.

Tokenization vs Encryption Infographic

techiny.com

techiny.com