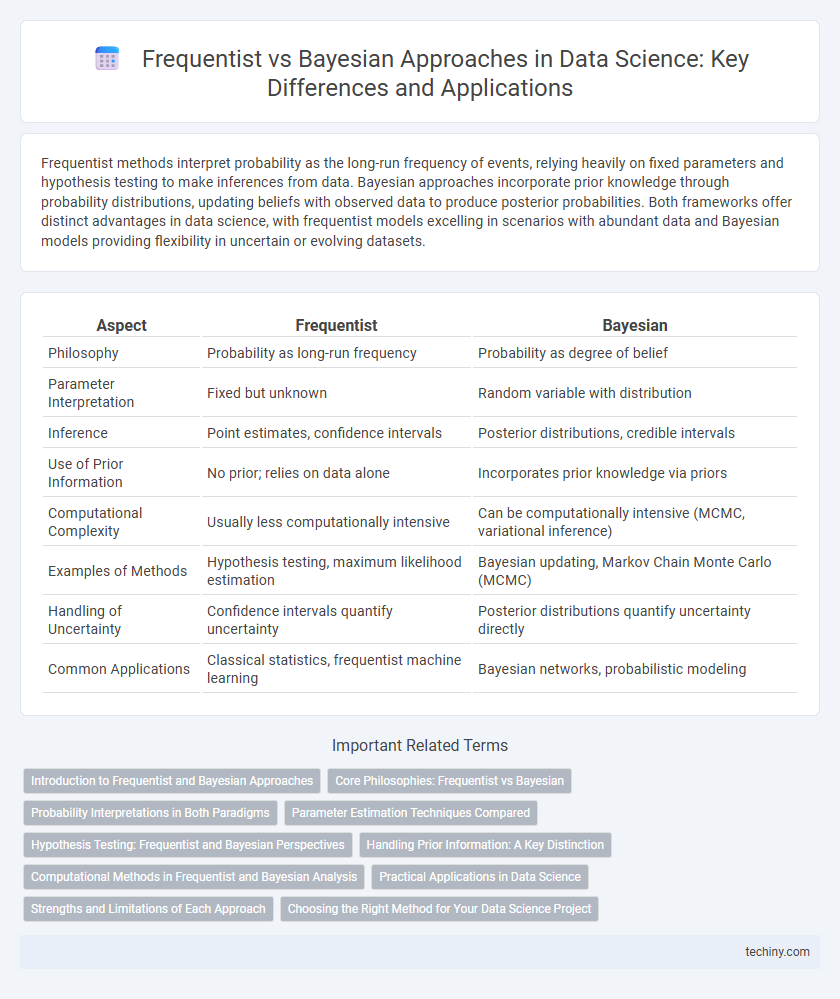

Frequentist methods interpret probability as the long-run frequency of events, relying heavily on fixed parameters and hypothesis testing to make inferences from data. Bayesian approaches incorporate prior knowledge through probability distributions, updating beliefs with observed data to produce posterior probabilities. Both frameworks offer distinct advantages in data science, with frequentist models excelling in scenarios with abundant data and Bayesian models providing flexibility in uncertain or evolving datasets.

Table of Comparison

| Aspect | Frequentist | Bayesian |

|---|---|---|

| Philosophy | Probability as long-run frequency | Probability as degree of belief |

| Parameter Interpretation | Fixed but unknown | Random variable with distribution |

| Inference | Point estimates, confidence intervals | Posterior distributions, credible intervals |

| Use of Prior Information | No prior; relies on data alone | Incorporates prior knowledge via priors |

| Computational Complexity | Usually less computationally intensive | Can be computationally intensive (MCMC, variational inference) |

| Examples of Methods | Hypothesis testing, maximum likelihood estimation | Bayesian updating, Markov Chain Monte Carlo (MCMC) |

| Handling of Uncertainty | Confidence intervals quantify uncertainty | Posterior distributions quantify uncertainty directly |

| Common Applications | Classical statistics, frequentist machine learning | Bayesian networks, probabilistic modeling |

Introduction to Frequentist and Bayesian Approaches

Frequentist and Bayesian approaches provide distinct frameworks for statistical inference, with frequentist methods relying on long-run frequency properties of estimators and hypothesis tests. Bayesian inference incorporates prior knowledge through probability distributions, updating beliefs with observed data using Bayes' theorem. This fundamental difference influences how uncertainty is quantified and decisions are made in data science applications.

Core Philosophies: Frequentist vs Bayesian

Frequentist statistics interprets probability as the long-run frequency of events, emphasizing objective analysis through repeated sampling and fixed parameters. Bayesian statistics treats probability as a degree of belief, updating prior knowledge with observed data via Bayes' theorem to derive posterior distributions. These core philosophical differences influence model interpretation, uncertainty quantification, and decision-making approaches in data science applications.

Probability Interpretations in Both Paradigms

Frequentist probability interprets probabilities as long-run frequencies of events occurring in repeated trials, emphasizing objective, data-driven inference without subjective prior beliefs. In contrast, Bayesian probability represents a degree of belief or uncertainty, updating prior knowledge with new evidence through Bayes' theorem to produce posterior probabilities. This fundamental difference shapes how each paradigm handles uncertainty, hypothesis testing, and decision-making in data science applications.

Parameter Estimation Techniques Compared

Frequentist parameter estimation relies on maximum likelihood estimation (MLE) to find point estimates by maximizing the probability of observed data, assuming fixed parameters. Bayesian parameter estimation integrates prior distributions with likelihood functions to produce posterior distributions, capturing uncertainty in parameter values. Compared to frequentist methods, Bayesian techniques provide full probabilistic characterization, enabling direct probabilistic inference and more flexible modeling in complex data scenarios.

Hypothesis Testing: Frequentist and Bayesian Perspectives

Frequentist hypothesis testing relies on p-values and fixed significance levels to determine whether to reject a null hypothesis, emphasizing long-run frequency properties. Bayesian hypothesis testing incorporates prior distributions and updates beliefs through posterior probabilities, offering a probabilistic interpretation of hypotheses. This Bayesian framework enables direct probability statements about hypotheses, contrasting with the frequentist approach's binary accept-reject decisions.

Handling Prior Information: A Key Distinction

Frequentist methods do not incorporate prior information, relying solely on observed data to estimate parameters, which limits their adaptability in contexts with scarce data. Bayesian approaches explicitly integrate prior knowledge through prior probability distributions, allowing for more flexible and informed inference. This fundamental difference in handling prior information critically impacts model accuracy and decision-making in data science applications.

Computational Methods in Frequentist and Bayesian Analysis

Frequentist computational methods primarily rely on maximum likelihood estimation and bootstrapping techniques to infer parameters from data, emphasizing long-run frequency properties. Bayesian analysis employs Markov Chain Monte Carlo (MCMC) algorithms and variational inference to approximate posterior distributions, integrating prior information with observed data. Advances in computational power and algorithmic efficiency have significantly enhanced the scalability and accuracy of both approaches in complex statistical modeling.

Practical Applications in Data Science

Frequentist methods are widely used in hypothesis testing and confidence interval estimation, providing clear, data-driven conclusions without relying on prior distributions. Bayesian approaches excel in dynamic models and real-time data analysis, updating probabilistic beliefs as new data arrives, which enhances predictive accuracy. In practice, combining Frequentist and Bayesian techniques allows data scientists to balance interpretability and flexibility in diverse applications such as A/B testing, recommendation systems, and machine learning model evaluation.

Strengths and Limitations of Each Approach

Frequentist methods provide objective, long-run frequency interpretations of probability, excelling in hypothesis testing and confidence interval estimation with large sample sizes, but they struggle with incorporating prior knowledge and updating beliefs. Bayesian approaches offer flexibility by combining prior information with observed data to produce probabilistic inferences, making them powerful for complex models and small samples, though they can be computationally intensive and sensitive to prior assumptions. Both frameworks contribute uniquely to data science, with frequentist approaches favored for standardized testing and Bayesian techniques preferred for adaptive learning and decision-making under uncertainty.

Choosing the Right Method for Your Data Science Project

Frequentist methods rely on long-run frequencies and fixed parameters, making them suitable for large datasets with well-defined sampling processes in data science projects. Bayesian approaches incorporate prior knowledge and update probabilities as new data emerges, offering flexibility in dynamic environments and smaller sample sizes. Selecting the right method depends on project objectives, available data, computational resources, and the need for interpretability in probabilistic inference.

frequentist vs Bayesian Infographic

techiny.com

techiny.com