On-chip memory offers faster access speeds and lower latency due to its proximity to the processor, enhancing overall system performance and reducing power consumption. Off-chip memory provides significantly larger storage capacity but incurs higher latency and increased power usage because of longer data transfer distances. Balancing the use of on-chip and off-chip memory is crucial in hardware engineering to optimize speed, efficiency, and cost in system design.

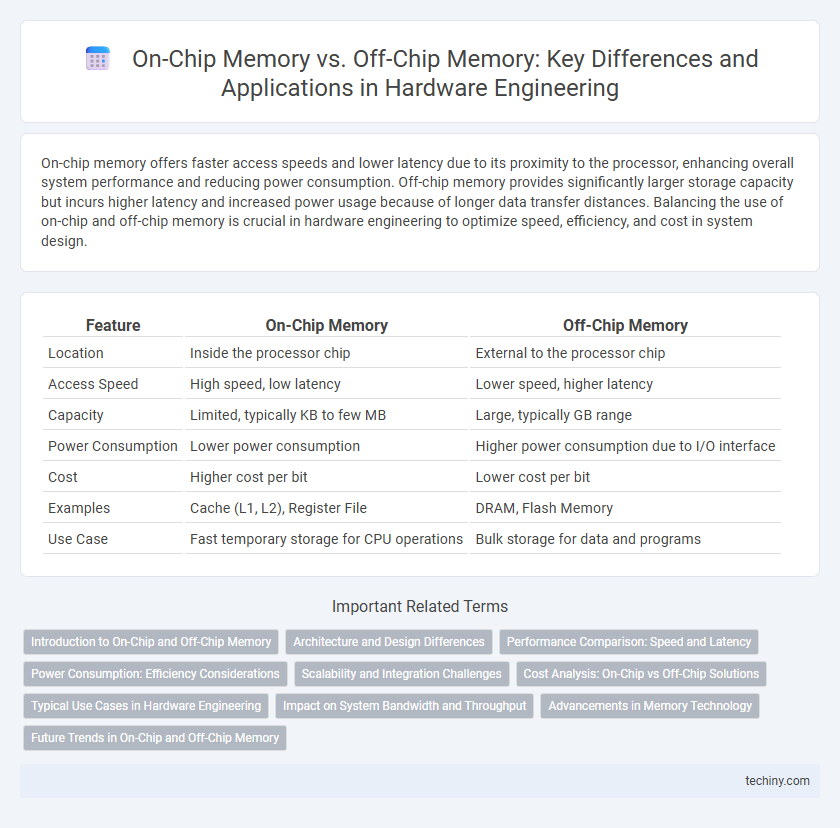

Table of Comparison

| Feature | On-Chip Memory | Off-Chip Memory |

|---|---|---|

| Location | Inside the processor chip | External to the processor chip |

| Access Speed | High speed, low latency | Lower speed, higher latency |

| Capacity | Limited, typically KB to few MB | Large, typically GB range |

| Power Consumption | Lower power consumption | Higher power consumption due to I/O interface |

| Cost | Higher cost per bit | Lower cost per bit |

| Examples | Cache (L1, L2), Register File | DRAM, Flash Memory |

| Use Case | Fast temporary storage for CPU operations | Bulk storage for data and programs |

Introduction to On-Chip and Off-Chip Memory

On-chip memory refers to the integrated memory located directly within the processor chip, typically offering faster access times and lower latency due to its proximity to the CPU cores. Off-chip memory, such as DRAM modules, is situated outside the processor die and connected via external buses, providing larger storage capacity but incurring higher latency and increased power consumption. Balancing on-chip cache sizes with off-chip memory bandwidth is critical in hardware engineering to optimize system performance and efficiency.

Architecture and Design Differences

On-chip memory is integrated directly within the chip's semiconductor die, offering faster access speeds and lower latency compared to off-chip memory, which resides externally and requires bus communication. Architectural design of on-chip memory emphasizes limited capacity and power efficiency to optimize processor performance, while off-chip memory designs prioritize larger storage capacity and scalability. The design trade-offs involve balancing on-chip memory's proximity for speed against off-chip memory's flexibility and cost-effectiveness in system architecture.

Performance Comparison: Speed and Latency

On-chip memory offers significantly faster access speeds and lower latency compared to off-chip memory due to its close proximity to the processor and integration within the same silicon die. Off-chip memory, such as DRAM, suffers from higher latency caused by longer electrical pathways and bus contention, impacting real-time processing efficiency. The high bandwidth and minimal latency of on-chip memory make it essential for cache storage, whereas off-chip memory is suited for larger capacity requirements despite slower performance.

Power Consumption: Efficiency Considerations

On-chip memory consumes significantly less power than off-chip memory due to reduced data transfer distances and lower capacitance loads, enhancing overall energy efficiency in integrated circuits. Off-chip memory requires more power for data communication across chip interfaces, resulting in increased dynamic power consumption and latency. Designing systems with optimized on-chip memory allocation can drastically lower power budgets in portable and energy-sensitive hardware applications.

Scalability and Integration Challenges

On-chip memory offers superior scalability within integrated circuits due to its close proximity to processing units, enabling faster access speeds and reduced latency. However, integrating large on-chip memory is limited by silicon area constraints and power consumption, posing significant design challenges. Off-chip memory provides greater capacity and easier scalability but suffers from higher latency and increased complexity in signal integrity and board-level integration.

Cost Analysis: On-Chip vs Off-Chip Solutions

On-chip memory offers lower latency and higher bandwidth at the expense of increased silicon area and manufacturing costs, impacting the overall chip price. Off-chip memory solutions, such as DRAM or SRAM modules, provide larger capacities with reduced on-die area requirements but incur higher system-level costs due to package, board space, and interface complexities. Cost optimization in hardware design must balance on-chip memory's premium silicon cost against off-chip memory's integration and interface expenses to achieve performance and budget targets.

Typical Use Cases in Hardware Engineering

On-chip memory is primarily utilized for cache storage, register files, and small buffers due to its low latency and high bandwidth, enabling faster data access critical for performance-sensitive tasks in processors and FPGA designs. Off-chip memory, such as DRAM or SDRAM, serves as the main memory for large data storage and buffering in systems where capacity exceeds on-chip limitations, commonly used in embedded systems, GPUs, and general computing platforms. Hardware engineers choose on-chip memory for speed-critical control and computation, while off-chip memory supports bulk data handling and extended storage requirements.

Impact on System Bandwidth and Throughput

On-chip memory provides lower latency and higher bandwidth due to its close proximity to processing cores, significantly boosting system throughput by reducing data transfer delays. Off-chip memory, while offering larger capacity, incurs increased latency and limited bandwidth through external buses, which can bottleneck overall system performance. Optimizing the balance between on-chip cache and off-chip DRAM access is crucial for maximizing bandwidth efficiency and achieving higher computational throughput in hardware design.

Advancements in Memory Technology

Recent advancements in memory technology have significantly improved the performance of both on-chip and off-chip memory in hardware engineering. On-chip memory, such as SRAM and embedded DRAM, benefits from increased density and lower latency through advanced lithography and 3D stacking techniques, enabling faster data access within processors. Off-chip memory technologies like DDR5 and HBM2 offer higher bandwidth and reduced power consumption, supporting large-scale data processing and enhancing overall system efficiency in modern computing architectures.

Future Trends in On-Chip and Off-Chip Memory

Emerging trends in on-chip memory emphasize greater integration of non-volatile memory technologies such as MRAM and ReRAM, aiming to reduce latency and power consumption while increasing storage density. Off-chip memory advancements focus on high-bandwidth memory (HBM) and DDR5 improvements that support faster data transfer rates and enhanced energy efficiency for large-scale data processing tasks. The convergence of chiplet architectures and 3D stacking technologies further drives innovation in balancing on-chip and off-chip memory performance for next-generation hardware systems.

On-Chip Memory vs Off-Chip Memory Infographic

techiny.com

techiny.com