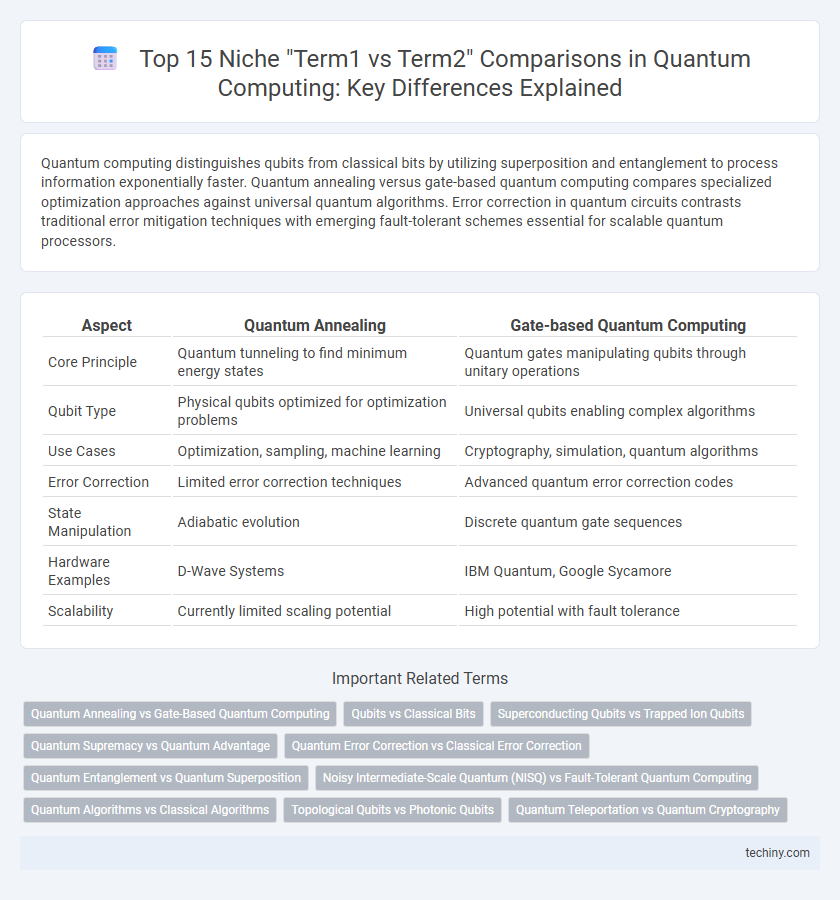

Quantum computing distinguishes qubits from classical bits by utilizing superposition and entanglement to process information exponentially faster. Quantum annealing versus gate-based quantum computing compares specialized optimization approaches against universal quantum algorithms. Error correction in quantum circuits contrasts traditional error mitigation techniques with emerging fault-tolerant schemes essential for scalable quantum processors.

Table of Comparison

| Aspect | Quantum Annealing | Gate-based Quantum Computing |

|---|---|---|

| Core Principle | Quantum tunneling to find minimum energy states | Quantum gates manipulating qubits through unitary operations |

| Qubit Type | Physical qubits optimized for optimization problems | Universal qubits enabling complex algorithms |

| Use Cases | Optimization, sampling, machine learning | Cryptography, simulation, quantum algorithms |

| Error Correction | Limited error correction techniques | Advanced quantum error correction codes |

| State Manipulation | Adiabatic evolution | Discrete quantum gate sequences |

| Hardware Examples | D-Wave Systems | IBM Quantum, Google Sycamore |

| Scalability | Currently limited scaling potential | High potential with fault tolerance |

Quantum Annealing vs Gate-Based Quantum Computing

Quantum Annealing utilizes quantum fluctuations to find the global minimum of optimization problems by evolving a system's Hamiltonian, while Gate-Based Quantum Computing manipulates qubits with discrete quantum logic gates for universal quantum algorithms. Quantum Annealing excels in solving combinatorial optimization tasks and is implemented in specialized hardware like D-Wave systems, whereas Gate-Based architectures such as superconducting qubits from IBM and trapped ions provide greater versatility for complex algorithmic processing. The effective problem domain, coherence times, and error correction mechanisms significantly differ, making Quantum Annealing more suitable for heuristic optimization and Gate-Based computing for broader, fault-tolerant quantum computations.

Qubits vs Classical Bits

Qubits leverage superposition and entanglement, enabling quantum computers to process complex computations exponentially faster than classical bits, which represent data as binary 0s or 1s. Unlike classical bits limited to clear states, qubits exist simultaneously in multiple states, providing significant advantages for parallelism in quantum algorithms. Error rates and coherence times in qubits remain critical challenges compared to the stability and reliability of classical bits in conventional computing systems.

Superconducting Qubits vs Trapped Ion Qubits

Superconducting qubits utilize Josephson junctions to achieve fast gate operations at microwave frequencies, offering scalability advantages for quantum processors. Trapped ion qubits rely on electromagnetic traps to isolate individual ions, providing superior coherence times and high-fidelity gate implementations. The trade-off between faster gate speeds in superconducting qubits and longer coherence in trapped ion systems defines current efforts in optimizing quantum computing architectures.

Quantum Supremacy vs Quantum Advantage

Quantum Supremacy refers to the milestone where a quantum computer performs a computation that is infeasible for any classical computer, highlighting a clear computational boundary. Quantum Advantage focuses on practical scenarios where quantum devices solve real-world problems more efficiently than classical counterparts, emphasizing user-centric application benefits. Understanding the distinction aids in assessing progress from theoretical breakthroughs to impactful quantum technologies.

Quantum Error Correction vs Classical Error Correction

Quantum Error Correction (QEC) employs entanglement and superposition to protect quantum information from decoherence and quantum noise, unlike Classical Error Correction, which uses redundancy and parity checks to detect and correct bit errors in classical data. QEC codes, such as surface codes and stabilizer codes, can correct unique quantum errors like phase flips and bit flips simultaneously, ensuring fault-tolerant quantum computation. Classical error correction techniques are ineffective in quantum systems due to the no-cloning theorem and the probabilistic nature of quantum states.

Quantum Entanglement vs Quantum Superposition

Quantum entanglement involves particles becoming interconnected so that the state of one instantly influences the state of another, regardless of distance, enabling key protocols in quantum communication and cryptography. Quantum superposition allows a quantum bit (qubit) to exist simultaneously in multiple states, forming the foundation for parallelism in quantum algorithms. While superposition enables quantum speedup by representing multiple possibilities concurrently, entanglement facilitates non-classical correlations essential for error correction and secure information transfer.

Noisy Intermediate-Scale Quantum (NISQ) vs Fault-Tolerant Quantum Computing

Noisy Intermediate-Scale Quantum (NISQ) devices operate with limited qubits and tolerate certain error rates, enabling near-term quantum algorithms despite noise and decoherence challenges. Fault-Tolerant Quantum Computing relies on quantum error correction codes like the surface code to achieve reliable computation by mitigating errors over extended qubit lifetimes. While NISQ targets practical applications using imperfect hardware, fault-tolerant systems aim for scalable and precise quantum computations essential for long-term quantum advantage.

Quantum Algorithms vs Classical Algorithms

Quantum algorithms exploit quantum superposition and entanglement to solve certain problems exponentially faster than classical algorithms, particularly in factoring integers (Shor's algorithm) and searching unsorted databases (Grover's algorithm). Classical algorithms rely on deterministic or probabilistic processes and often require polynomial or exponential time for these same tasks. The unique computational capabilities of quantum algorithms stem from quantum parallelism and interference, which have no direct analog in classical computing models.

Topological Qubits vs Photonic Qubits

Topological qubits leverage non-Abelian anyons to achieve inherent fault tolerance by encoding quantum information in the system's global properties, reducing decoherence effects prevalent in other qubit types. Photonic qubits utilize the quantum states of photons, such as polarization or time-bin encoding, enabling high-speed transmission and scalability in optical quantum networks. While topological qubits promise robustness against environmental noise through topological protection, photonic qubits excel in long-distance quantum communication and integration with existing fiber-optic infrastructure.

Quantum Teleportation vs Quantum Cryptography

Quantum Teleportation enables the transfer of quantum states between distant particles without moving the physical particles themselves, relying on entanglement and classical communication channels. Quantum Cryptography, particularly Quantum Key Distribution (QKD), secures data transmission by exploiting quantum mechanics principles to detect eavesdropping and guarantee the secrecy of encryption keys. Both leverage quantum entanglement, but Teleportation focuses on state transfer, whereas Cryptography emphasizes secure communication.

Sure! Here is a list of niche and specific "term1 vs term2" comparisons within the context of Quantu Infographic

techiny.com

techiny.com