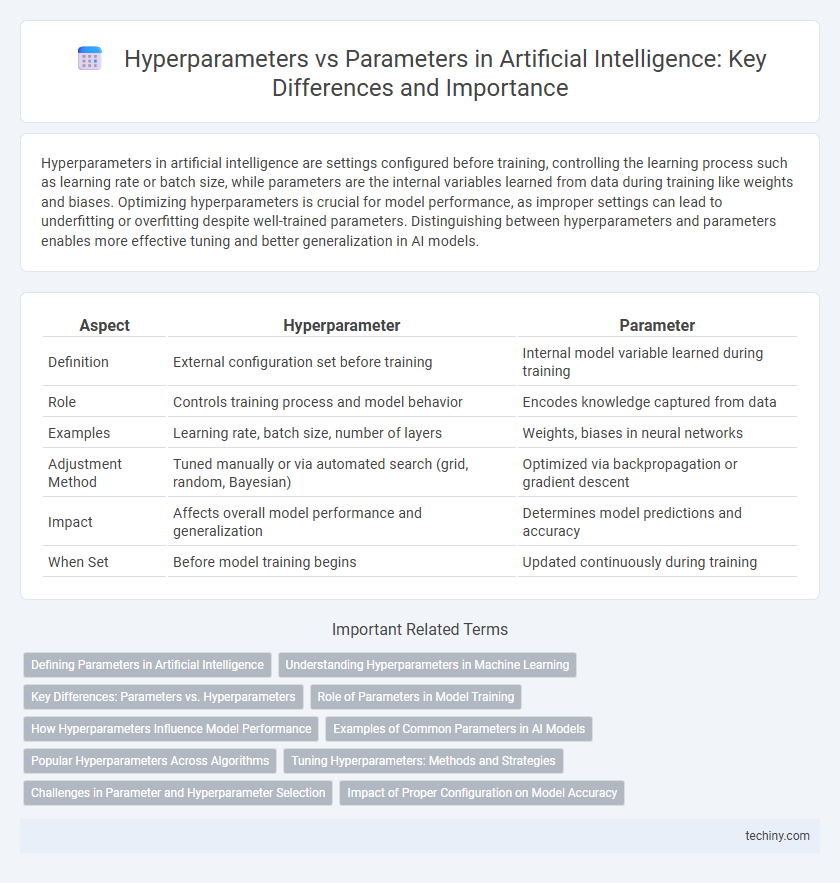

Hyperparameters in artificial intelligence are settings configured before training, controlling the learning process such as learning rate or batch size, while parameters are the internal variables learned from data during training like weights and biases. Optimizing hyperparameters is crucial for model performance, as improper settings can lead to underfitting or overfitting despite well-trained parameters. Distinguishing between hyperparameters and parameters enables more effective tuning and better generalization in AI models.

Table of Comparison

| Aspect | Hyperparameter | Parameter |

|---|---|---|

| Definition | External configuration set before training | Internal model variable learned during training |

| Role | Controls training process and model behavior | Encodes knowledge captured from data |

| Examples | Learning rate, batch size, number of layers | Weights, biases in neural networks |

| Adjustment Method | Tuned manually or via automated search (grid, random, Bayesian) | Optimized via backpropagation or gradient descent |

| Impact | Affects overall model performance and generalization | Determines model predictions and accuracy |

| When Set | Before model training begins | Updated continuously during training |

Defining Parameters in Artificial Intelligence

Parameters in artificial intelligence refer to the internal variables of a model that are learned from training data, such as weights and biases in neural networks. Hyperparameters, in contrast, are external configurations set before training, like learning rate, batch size, and number of layers, which guide the training process but are not directly learned. Defining parameters accurately is crucial for model optimization, as they directly impact the model's ability to capture underlying patterns and make accurate predictions.

Understanding Hyperparameters in Machine Learning

Hyperparameters in machine learning are settings configured prior to the training process, such as learning rate, batch size, and number of epochs, which control the behavior and performance of algorithms. Unlike parameters, which are learned from data during training (e.g., weights and biases in neural networks), hyperparameters require manual tuning or automated optimization techniques like grid search and Bayesian optimization. Effective hyperparameter selection significantly impacts model accuracy, generalization, and convergence speed.

Key Differences: Parameters vs. Hyperparameters

Parameters in artificial intelligence models are internal variables learned from training data, such as weights and biases in neural networks, directly influencing model predictions. Hyperparameters, by contrast, are external configurations set before training, including learning rate, batch size, and number of epochs, which control the training process and model architecture. Understanding the key difference between parameters and hyperparameters is crucial for optimizing model performance and ensuring effective learning and generalization.

Role of Parameters in Model Training

Parameters are the internal configuration variables of an AI model, learned from training data to minimize the error function and improve predictive accuracy. Hyperparameters, set before training begins, control the training process by defining model architecture, learning rate, and regularization techniques. The optimization of parameters through gradient descent directly determines the model's ability to generalize and perform on unseen data.

How Hyperparameters Influence Model Performance

Hyperparameters control the training process of machine learning models by setting values such as learning rate, batch size, and number of layers, directly impacting model convergence and generalization. Unlike parameters, which are learned during training, hyperparameters must be tuned through techniques like grid search or Bayesian optimization to optimize model accuracy and prevent overfitting. Proper hyperparameter selection significantly enhances model performance by balancing bias-variance trade-offs and improving predictive power on unseen data.

Examples of Common Parameters in AI Models

Common parameters in AI models include weights, biases, and activation functions within neural networks, which directly influence the model's predictions by adjusting during training. Hyperparameters, in contrast, are set before training and include learning rate, batch size, and number of hidden layers, controlling the training process and model complexity. Understanding these parameters and hyperparameters is crucial for optimizing model performance and achieving accurate results.

Popular Hyperparameters Across Algorithms

Popular hyperparameters across artificial intelligence algorithms include learning rate, batch size, number of epochs, and regularization strength, which significantly influence model training efficiency and generalization. Parameters, in contrast, are internal model attributes like weights and biases learned directly from the training data. Optimizing hyperparameters such as dropout rate in neural networks or the maximum depth in decision trees can dramatically enhance predictive performance.

Tuning Hyperparameters: Methods and Strategies

Hyperparameter tuning involves selecting the optimal values for settings that control the learning process, such as learning rate, batch size, and number of layers, which differ from parameters that models learn during training. Techniques like grid search, random search, and Bayesian optimization systematically explore hyperparameter spaces to improve model performance. Employing strategies such as cross-validation and early stopping ensures robust evaluation and prevents overfitting during the tuning process.

Challenges in Parameter and Hyperparameter Selection

Selecting optimal parameters, which are directly learned from training data such as model weights, presents challenges due to the risk of overfitting and underfitting impacting model performance. Hyperparameter tuning, involving choices like learning rate and batch size, requires extensive experimentation and computational resources to identify configurations that balance model accuracy and generalization. The interplay between parameters and hyperparameters further complicates the selection process, necessitating advanced techniques like automated hyperparameter optimization to efficiently navigate the high-dimensional search space.

Impact of Proper Configuration on Model Accuracy

Proper configuration of hyperparameters, such as learning rate, batch size, and number of epochs, directly influences model accuracy by optimizing the training process and preventing overfitting or underfitting. Parameters, which are learned during training, depend on these hyperparameters to converge effectively to minimize loss functions. Fine-tuning hyperparameters using methods like grid search or Bayesian optimization can significantly enhance predictive performance and robustness of AI models.

Hyperparameter vs Parameter Infographic

techiny.com

techiny.com