SRAM uses bistable latching circuitry to store data, providing faster access times and lower latency compared to DRAM. DRAM stores data as charges in capacitors, which requires periodic refreshing to maintain information but offers higher density and lower cost per bit. SRAM is ideal for cache memory due to its speed, while DRAM is preferred for main system memory owing to its larger storage capacity.

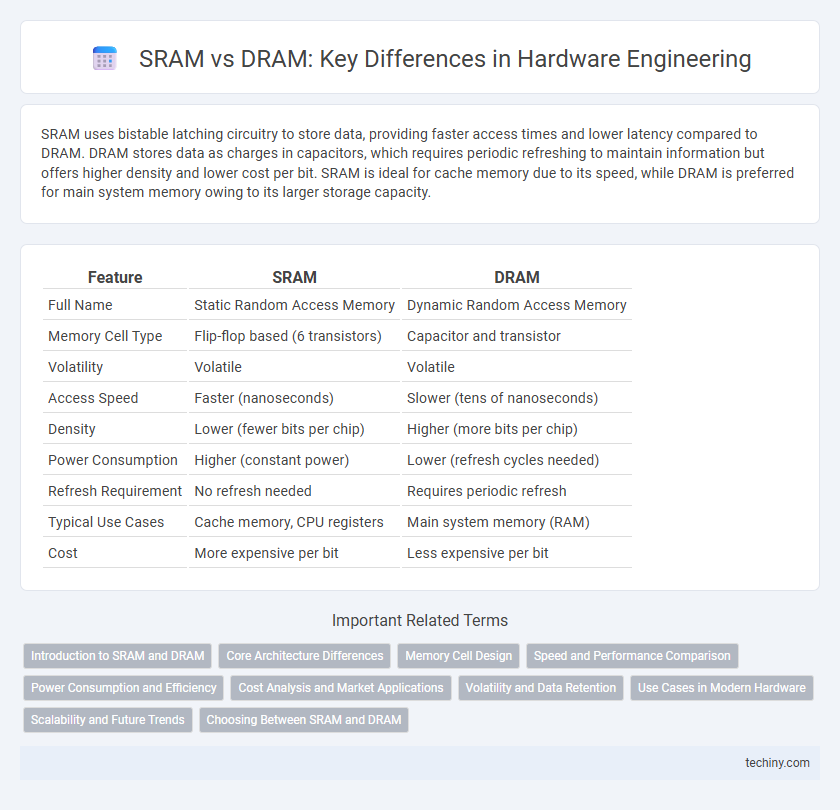

Table of Comparison

| Feature | SRAM | DRAM |

|---|---|---|

| Full Name | Static Random Access Memory | Dynamic Random Access Memory |

| Memory Cell Type | Flip-flop based (6 transistors) | Capacitor and transistor |

| Volatility | Volatile | Volatile |

| Access Speed | Faster (nanoseconds) | Slower (tens of nanoseconds) |

| Density | Lower (fewer bits per chip) | Higher (more bits per chip) |

| Power Consumption | Higher (constant power) | Lower (refresh cycles needed) |

| Refresh Requirement | No refresh needed | Requires periodic refresh |

| Typical Use Cases | Cache memory, CPU registers | Main system memory (RAM) |

| Cost | More expensive per bit | Less expensive per bit |

Introduction to SRAM and DRAM

SRAM (Static Random-Access Memory) uses bistable latching circuitry to store each bit, offering faster access times and higher durability without the need for periodic refreshing. DRAM (Dynamic Random-Access Memory) stores data in capacitors that require continuous refreshing, enabling higher density and cost-effective memory solutions. SRAM is typically used for cache memory due to its speed, while DRAM serves as the main system memory in computers.

Core Architecture Differences

SRAM (Static Random-Access Memory) utilizes bistable latching circuitry composed of flip-flops, enabling fast and stable storage without the need for periodic refresh cycles. DRAM (Dynamic Random-Access Memory) relies on a single transistor and capacitor pair per bit, requiring continuous refresh due to charge leakage in the capacitor. SRAM's core architecture provides lower latency and higher speed, while DRAM offers higher density and cost efficiency due to its simpler cell structure.

Memory Cell Design

SRAM memory cells use six transistors to maintain stable data storage without needing refresh cycles, enabling faster access and lower latency compared to DRAM. DRAM memory cells rely on a single transistor and a capacitor to store each bit, requiring periodic refresh due to charge leakage, which impacts speed and power efficiency. The complex transistor arrangement in SRAM leads to higher area and power consumption, while DRAM's simpler cell design allows for higher density and lower cost per bit.

Speed and Performance Comparison

SRAM offers significantly faster access times than DRAM due to its use of flip-flop circuits that retain data without needing constant refresh cycles. DRAM, while slower because it requires periodic refreshing of capacitive cells, provides higher density and lower cost per bit, making it suitable for larger memory applications. In performance-sensitive hardware engineering tasks, SRAM is preferred for cache memory where speed is critical, whereas DRAM is commonly used for main system memory balancing performance and capacity.

Power Consumption and Efficiency

SRAM consumes less power during idle states due to its design with flip-flops, making it highly efficient for cache memory requiring rapid access and low latency. DRAM, while denser and cheaper per bit, requires constant refreshing, leading to higher power consumption and reduced efficiency in continuous operation. Power efficiency in SRAM is ideal for low-power hardware applications, whereas DRAM suits high-capacity storage where energy trade-offs are acceptable.

Cost Analysis and Market Applications

SRAM offers faster access times and lower latency compared to DRAM but comes at a significantly higher production cost due to its complex transistor structure, making it less cost-effective for large memory arrays. DRAM, with its simpler design and higher density, provides a more economical solution for bulk storage, widely used in main memory applications for consumer electronics and data centers. Market applications favor SRAM in cache memory and embedded systems where speed is critical, while DRAM dominates in cost-sensitive environments requiring extensive memory capacity.

Volatility and Data Retention

SRAM (Static Random-Access Memory) is volatile memory that retains data as long as power is supplied, utilizing flip-flops to store each bit, enabling fast access times without the need for periodic refresh. DRAM (Dynamic Random-Access Memory) is also volatile but requires constant refreshing of stored charge in capacitors to maintain data, resulting in slower access speeds compared to SRAM due to refresh cycles. The inherent volatility in both memories means data is lost when power is removed, but SRAM's data retention stability during power-on conditions makes it preferable for cache memory in processors.

Use Cases in Modern Hardware

SRAM excels in cache memory for CPUs due to its high speed and low latency, making it ideal for frequently accessed data storage in modern processors. DRAM is widely used in main memory and graphics cards where high density and cost-effectiveness are critical, supporting large-scale data requirements in servers and consumer devices. Mobile devices often balance SRAM for cache and DRAM for bulk memory, optimizing power consumption and performance.

Scalability and Future Trends

SRAM offers faster access times and lower latency, making it ideal for cache memory, but its limited density and higher power consumption restrict scalability compared to DRAM. DRAM's higher density and lower manufacturing cost enable greater scalability for main memory applications, supporting larger memory capacities in modern computing systems. Emerging technologies such as 3D-stacked DRAM and advanced materials aim to enhance DRAM scalability, while innovations in SRAM focus on improving power efficiency and integration in heterogeneous computing architectures.

Choosing Between SRAM and DRAM

Choosing between SRAM and DRAM depends on speed, density, and power consumption requirements in hardware engineering. SRAM offers faster access times and lower latency, ideal for CPU caches and registers, but consumes more silicon area and power per bit. DRAM provides higher density and lower cost per bit, making it suitable for main memory despite slower access speeds and the need for periodic refresh.

SRAM vs DRAM Infographic

techiny.com

techiny.com